突破LVS瓶頸,LVS Cluster部署(OSPF + LVS)

前言

LVS大家應該很熟悉,這款優秀的開源軟件基本成為了IP負載均衡的代言詞。但在實際的生產環境中會發現,LVS調度在大壓力下很容易就產生瓶頸,其中瓶頸包括ipvs內核模塊的限制,CPU軟中斷,網卡性能等,當然這些都是可以調優的,關于LVS的調優,會在這里詳細講LVS調優攻略。回到主題,那當無法避免的單臺LVS調度機出現了性能瓶頸,有什么辦法呢?在本文就來介紹如何橫向擴展LVS調度機

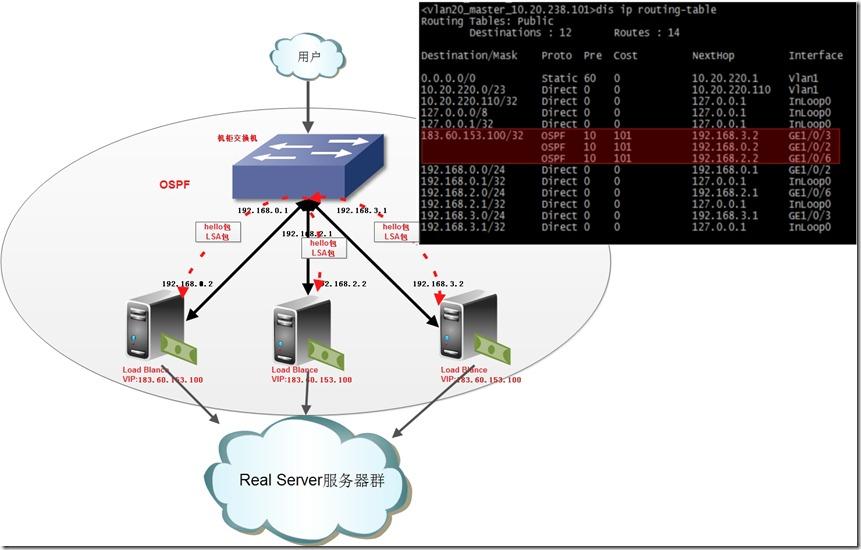

架構簡圖

如上圖三層設備的路由表,VIP地址183.60.153.100對應nexthop有三個地址,這三個地址是三臺lvs調度機的地址。這樣便可達到效果:用戶訪問------>VIP------>三臺LVS調度機------>分發到多臺RealServe

架構優勢

1.LVS調度機自由伸縮,橫向擴展(最大8臺,受限于三層設備允許的等價路由數目)

2.LVS調度資源全利用,All Active。不存在備份機

部署方法

1.硬件資源準備

三層設備: 本文用的是h3c 5800三層交換機

LVS調度機三臺: 192.168.0.2 192.168.2.2 192.168.3.2

Realserver三臺: 183.60.153.101 183.60.153.102 183.60.153.103

2.三層設備OSPF配置

- #查找與三層交換與lvs調度相連的端口,在本文端口分別為 g1/0/2 g1/0/3 g1/0/6

- #把g1/0/2改為三層端口,并配上IP

- interface GigabitEthernet1/0/2

- port link-mode route

- ip address 192.168.0.1 255.255.255.0

- #配置ospf的參數, timer hello是發送hello包的間隔,timer dead是存活的死亡時間。默認是10,40。

- #hello包是ospf里面維持鄰居關系的報文,這里配置是每秒發送一個,當到4秒還沒有收到這個報文,就會認為這個鄰居已經丟失,需要修改路由

- ospf timer hello 1

- ospf timer dead 4

- ospf dr-priority 100

- #如此類推,把g1/0/3 g1/0/6都配置上

- interface GigabitEthernet1/0/3

- port link-mode route

- ip address 192.168.3.1 255.255.255.0

- ospf timer hello 1

- ospf timer dead 4

- ospf dr-priority 99

- interface GigabitEthernet1/0/6

- port link-mode route

- ip address 192.168.2.1 255.255.255.0

- ospf timer hello 1

- ospf timer dead 4

- ospf dr-priority 98

- #配置ospf

- ospf 1

- area 0.0.0.0

- network 192.168.0.0 0.0.0.255

- network 192.168.3.0 0.0.0.255

- network 192.168.2.0 0.0.0.255

3.LVS調度機的OSPF配置

a.安裝軟路由軟件quagga

- yum –y install quagga

b.配置zerba.conf

vim /etc/quagga/zebra.conf

- hostname lvs-route-1

- password xxxxxx

- enable password xxxxxx

- log file /var/log/zebra.log

- service password-encryption

c.配置ospfd.conf

vim /etc/quagga/ospfd.conf

- #ospf的配置類似于上面三層設備,注意需要把vip聲明出去(183.60.153.100)

- log file /var/log/ospf.log

- log stdout

- log syslog

- interface eth0

- ip ospf hello-interval 1

- ip ospf dead-interval 4

- router ospf

- ospf router-id 192.168.0.1

- log-adjacency-changes

- auto-cost reference-bandwidth 1000

- network 183.60.153.100/32 area 0.0.0.0

- network 192.168.0.0/24 area 0.0.0.0

d.開啟IP轉發

- sed –i ‘/net.ipv4.ip_forward/d’ /etc/sysctl.conf

- echo "net.ipv4.ip_forward = 1" >> /etc/sysctl.confsysctl –p

e.開啟服務

- /etc/init.d/zebra start

- /etc/init.d/ospfd start

- chkconfig zebra on

- chkconfig ospfd on

#p#

4.LVS keepalived配置

在此架構下,LVS只能配置成DR模式。如果要配置成NAT模式,我的想法是,需要參照上面的方式讓LVS調度機與內網三層設備配置ospf,此方法未驗證,有其他方案請告知。

a.修改配置文件 keepalived.conf ,在Cluster架構中,所有調度機用相同的配置文件

vim /etc/keepalived/keepalived.conf

- #keepalived的全局配置global_defs {

- notification_email {

- lxcong@gmail.com

- }

- notification_email_from lvs_notice@gmail.com

- smtp_server 127.0.0.1

- smtp_connect_timeout 30

- router_id Ospf_LVS_1

- }

- #VRRP實例,在這個架構下所有的LVS調度機都配置成MASTER

- vrrp_instance LVS_Cluster{ ##創建實例 實例名為LVS_Cluster

- state MASTER #備份服務器上將MASTER改為BACKUP

- interface eth0 ##VIP 捆綁網卡

- virtual_router_id 100 ##LVS_ID 在同一個網絡中,LVS_ID是唯一的

- priority 100 #選舉的優先級,優先級大的為MASTER 備份服務上將100改為99

- advert_int 1 #發送vrrp的檢查報文的間隔,單位秒

- authentication { ##認證信息。可以是PASS或者AH

- auth_type PASS

- auth_pass 08856CD8

- }

- virtual_ipaddress {

- 183.60.153.100

- }

- }

- #LVS實例,在本文采用的是DR模式,WRR調度方式。其實在這種架構下也只能使用DR模式

- virtual_server 183.60.153.100 80 {

- delay_loop 6

- lb_algo wrr

- lb_kind DR

- persistence_timeout 60

- protocol TCP

- real_server 183.60.153.101 80 {

- weight 1 # 權重

- inhibit_on_failure # 若此節點故障,則將權重設為零(默認是從列表中移除)

- TCP_CHECK {

- connect_timeout 3

- nb_get_retry 3

- delay_before_retry 3

- connect_port 80

- }

- }

- real_server 183.60.153.102 80 {

- weight 1 # 權重

- inhibit_on_failure # 若此節點故障,則將權重設為零(默認是從列表中移除)

- TCP_CHECK {

- connect_timeout 3

- nb_get_retry 3

- delay_before_retry 3

- connect_port 80

- }

- }

- real_server 183.60.153.103 80 {

- weight 1 # 權重

- inhibit_on_failure # 若此節點故障,則將權重設為零(默認是從列表中移除)

- TCP_CHECK {

- connect_timeout 3

- nb_get_retry 3

- delay_before_retry 3

- connect_port 80

- }

- }

- }

b.啟動keepalived

- /etc/init.d/keepalived start

- chkconfig keepalived on

5.realserver配置

a.添加啟動服務腳本/etc/init.d/lvs_realserver

請自行按需要修改腳本中SNS_VIP變量

- #!/bin/sh

- ### BEGIN INIT INFO

- # Provides: lvs_realserver

- # Default-Start: 3 4 5

- # Default-Stop: 0 1 6

- # Short-Description: LVS real_server service scripts

- # Description: LVS real_server start and stop controller

- ### END INIT INFO

- # Copyright 2013 kisops.com

- #

- # chkconfig: - 20 80

- #

- # Author: k_ops_yw@ijinshan.com

- #有多個虛擬IP,以空格分隔

- SNS_VIP="183.60.153.100"

- . /etc/rc.d/init.d/functions

- if [[ -z "$SNS_VIP" ]];then

- echo 'Please set vips in '$0' with SNS_VIP!'

- fi

- start(){

- num=0

- for loop in $SNS_VIP

- do

- /sbin/ifconfig lo:$num $loop netmask 255.255.255.255 broadcast $loop

- /sbin/route add -host $loop dev lo:$num

- ((num++))

- done

- echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore

- echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce

- echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore

- echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce

- sysctl -e -p >/dev/null 2>&1

- }

- stop(){

- num=0

- for loop in $WEB_VIP

- do

- /sbin/ifconfig lo:$num down

- /sbin/route del -host $loop >/dev/null 2>&1

- ((num++))

- done

- echo "0" >/proc/sys/net/ipv4/conf/lo/arp_ignore

- echo "0" >/proc/sys/net/ipv4/conf/lo/arp_announce

- echo "0" >/proc/sys/net/ipv4/conf/all/arp_ignore

- echo "0" >/proc/sys/net/ipv4/conf/all/arp_announce

- sysctl -e -p >/dev/null 2>&1

- }

- case "$1" in

- start)

- start

- echo "RealServer Start OK"

- ;;

- stop)

- stop

- echo "RealServer Stoped"

- ;;

- restart)

- stop

- start

- ;;

- *)

- echo "Usage: $0 {start|stop|restart}"

- exit 1

- esac

- exit 0

b.啟動服務

- service lvs_realserver start

- chkconfig lvs_realserver on

總結

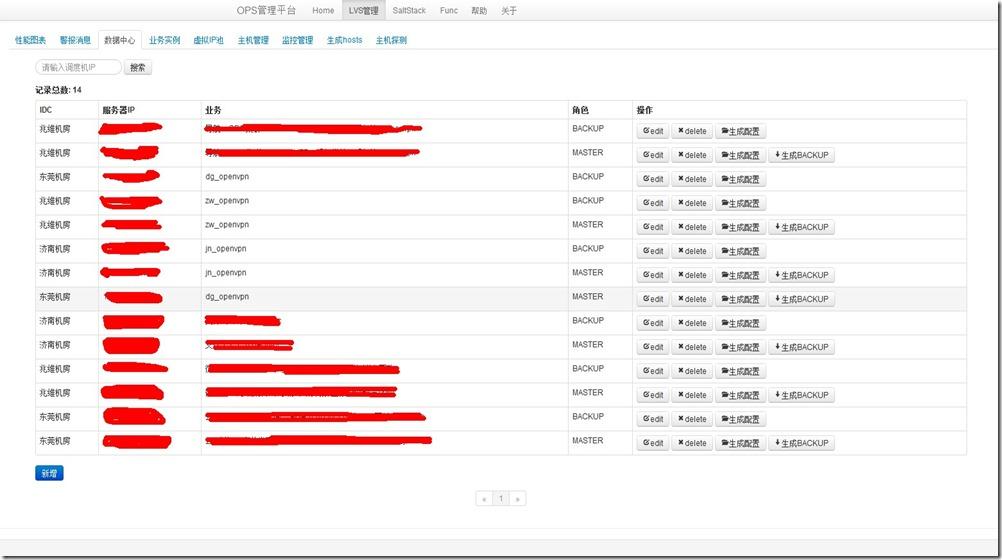

到這里,LVS Cluster架構已部署完了,如果各位有其他更好的LVS擴展方式請留意或者聯系我,互相交流 QQ:83766787。另外以前做了一個LVS的管理平臺,但是一直都做得不好,也希望有相關平臺開發經驗的能聯系我,交流交流、

原文鏈接:http://my.oschina.net/lxcong/blog/143904