使用Arthas一步步分析druid連接池Bug

最近項目組某應用將數據庫由Oracle切換到了TBase,遇到了數據庫連接泄露導致無法創建新連接的問題,下面是問題的分析過程。

問題現象

應用側異常日志

為了便于閱讀,去掉了線程棧中不相關的棧幀。

com.alibaba.druid.pool.GetConnectionTimeoutException: wait millis 5000, active 0, maxActive 30, creating 0, createErrorCount 13047

at com.alibaba.druid.pool.DruidDataSource.getConnectionInternal(DruidDataSource.java:1773)

at com.alibaba.druid.pool.DruidDataSource.getConnectionDirect(DruidDataSource.java:1427)

at com.alibaba.druid.filter.FilterChainImpl.dataSource_connect(FilterChainImpl.java:5059)

at com.alibaba.druid.filter.logging.LogFilter.dataSource_getConnection(LogFilter.java:917)

at com.alibaba.druid.filter.FilterChainImpl.dataSource_connect(FilterChainImpl.java:5055)

at com.alibaba.druid.filter.stat.StatFilter.dataSource_getConnection(StatFilter.java:726)

at com.alibaba.druid.filter.FilterChainImpl.dataSource_connect(FilterChainImpl.java:5055)

at com.alibaba.druid.pool.DruidDataSource.getConnection(DruidDataSource.java:1405)

at com.alibaba.druid.pool.DruidDataSource.getConnection(DruidDataSource.java:1397)

at com.alibaba.druid.pool.DruidDataSource.getConnection(DruidDataSource.java:100)

at org.springframework.jdbc.datasource.DataSourceTransactionManager.doBegin(DataSourceTransactionManager.java:204)

at org.springframework.transaction.support.AbstractPlatformTransactionManager.getTransaction(AbstractPlatformTransactionManager.java:373)

at org.springframework.transaction.interceptor.TransactionAspectSupport.createTransactionIfNecessary(TransactionAspectSupport.java:430)

at org.springframework.transaction.interceptor.TransactionAspectSupport.invokeWithinTransaction(TransactionAspectSupport.java:276)

at org.springframework.transaction.interceptor.TransactionInterceptor.invoke(TransactionInterceptor.java:96)

at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:179)

at org.springframework.aop.framework.JdkDynamicAopProxy.invoke(JdkDynamicAopProxy.java:213)

... ...//省略的棧幀

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745) Caused by: org.postgresql.util.PSQLException: FATAL: remaining connection slots are reserved for non-replication superuser connections

at org.postgresql.core.v3.ConnectionFactoryImpl.doAuthentication(ConnectionFactoryImpl.java:693)

at org.postgresql.core.v3.ConnectionFactoryImpl.tryConnect(ConnectionFactoryImpl.java:203)

at org.postgresql.core.v3.ConnectionFactoryImpl.openConnectionImpl(ConnectionFactoryImpl.java:258)

at org.postgresql.core.ConnectionFactory.openConnection(ConnectionFactory.java:54)

at org.postgresql.jdbc.PgConnection.<init>(PgConnection.java:263)

at org.postgresql.Driver.makeConnection(Driver.java:443)

at org.postgresql.Driver.connect(Driver.java:297)

at com.alibaba.druid.filter.FilterChainImpl.connection_connect(FilterChainImpl.java:156)

at com.alibaba.druid.filter.FilterAdapter.connection_connect(FilterAdapter.java:787)

at com.alibaba.druid.filter.FilterEventAdapter.connection_connect(FilterEventAdapter.java:38)

at com.alibaba.druid.filter.FilterChainImpl.connection_connect(FilterChainImpl.java:150)

at com.alibaba.druid.filter.stat.StatFilter.connection_connect(StatFilter.java:251)

at com.alibaba.druid.filter.FilterChainImpl.connection_connect(FilterChainImpl.java:150)

at com.alibaba.druid.pool.DruidAbstractDataSource.createPhysicalConnection(DruidAbstractDataSource.java:1659)

at com.alibaba.druid.pool.DruidAbstractDataSource.createPhysicalConnection(DruidAbstractDataSource.java:1723)

at com.alibaba.druid.pool.DruidDataSource$CreateConnectionThread.run(DruidDataSource.java:2838)從上面線程棧可知,org.postgresql.util.PSQLException導致了com.alibaba.druid.pool.GetConnectionTimeoutException,所以org.postgresql.util.PSQLException是根因:FATAL: remaining connection slots are reserved for non-replication superuser connections,該異常表示TBase Server端該用戶的連接數達到了閾值。接下來看一下TBase服務端連接數的情況。

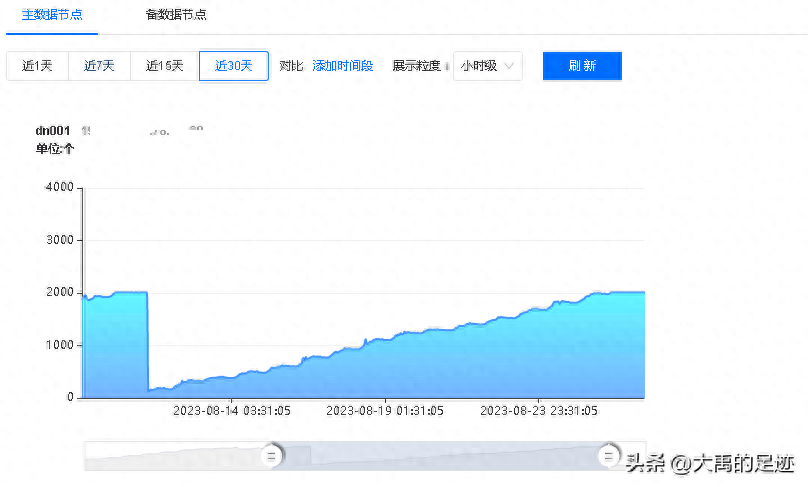

TBase服務端連接數

指標數據

從上圖可知

- 由于達到了TBase Server端連接數2000的閾值,所以導致了應用無法創建連接的異常;

- 隨著應用運行,連接數在慢慢增加,很明顯存在連接泄露的問題。

基本信息

應用使用的druid連接池,版本是druid-1.2.8;TBase JDBC Driver是postgresql-42.6.0;TBase Server版本是10.0 @ Tbase_v5.06.4.3 (commit: 6bd7f61dc)。JDK版本:

java version "1.8.0_65"

Java(TM) SE Runtime Environment (build 1.8.0_65-b17)

Java HotSpot(TM) 64-Bit Server VM (build 25.65-b01, mixed mode)druid配置

druid配置包括兩部分,分別是:Filter、DataSource。

Filter

<bean id="filter-log4j2" class="com.alibaba.druid.filter.logging.Log4j2Filter">

<property name="connectionLogEnabled" value="true" />

<property name="statementLogEnabled" value="true" />

<property name="statementExecutableSqlLogEnable" value="true" />

<property name="resultSetLogEnabled" value="false" />

</bean>DataSource

<bean id="dataSource6" class="com.alibaba.druid.pool.DruidDataSource" init-method="init" destroy-method="close">

<property name="driverClassName" value="org.postgresql.Driver" />

<property name="url" value="" />

<property name="xxx" value="" />

<property name="xxx" value="" />

<property name="initialSize" value="2" />

<property name="minIdle" value="5" />

<property name="maxActive" value="30" />

<property name="maxWait" value="5000" />

<property name="timeBetweenEvictionRunsMillis" value="60000" />

<property name="minEvictableIdleTimeMillis" value="300000" />

<property name="testWhileIdle" value="true" />

<property name="testOnBorrow" value="false" />

<property name="testOnReturn" value="false" />

<property name="phyTimeoutMillis" value="1800000" />

<property name="poolPreparedStatements" value="false" />

<property name="maxPoolPreparedStatementPerConnectionSize" value="20" />

<!-- 對泄漏的連接 自動關閉 -->

<!-- 打開removeAbandoned功能 -->

<property name="removeAbandoned" value="true" />

<!-- 60秒,也就是1分鐘 -->

<property name="removeAbandonedTimeout" value="60" />

<!-- 關閉abanded連接時輸出錯誤日志 -->

<property name="logAbandoned" value="true" />

<!-- 配置監控統計攔截的filters -->

<property name="filters" value="mergeStat" />

<property name="proxyFilters">

<list>

<ref bean="filter-log4j2" />

</list>

</property>

<property name="connectionProperties" value="druid.stat.slowSqlMillis=5000" />

</bean>問題分析

removeAbandoned

數據庫連接泄露,首先想到的是應用程序從數據庫連接池獲取到連接,使用完之后是否沒有釋放?從上面的異常信息看是不存在這種情況的,因為active是0(即當前正在使用的連接數是0),為了便于理解,下面分析下druid這塊的邏輯。從上面可知,druid removeAbandoned相關配置為:

<!-- 對泄漏的連接 自動關閉 -->

<!-- 打開removeAbandoned功能 -->

<property name="removeAbandoned" value="true" />

<!-- 60秒,也就是1分鐘 -->

<property name="removeAbandonedTimeout" value="60" />

<!-- 關閉abanded連接時輸出錯誤日志 -->

<property name="logAbandoned" value="true" />申請連接

以下代碼在DruidDataSource類的getConnectionDirect方法中。

DruidPooledConnection poolableConnection ;

... ...

if (removeAbandoned) {

StackTraceElement[] stackTrace = Thread.currentThread().getStackTrace();

poolableConnection.connectStackTrace = stackTrace;

poolableConnection.setConnectedTimeNano();

poolableConnection.traceEnable = true;

activeConnectionLock.lock();

try {

activeConnections.put(poolableConnection, PRESENT);

} finally {

activeConnectionLock.unlock();

}

}釋放連接

以下代碼在DruidDataSource類的recycle方法中,該方法是在應用程序將連接歸還給連接池的時候調用。

DruidPooledConnection poolableConnection ;

... ...

if (pooledConnection.traceEnable) {

Object oldInfo = null;

activeConnectionLock.lock();

try {

if (pooledConnection.traceEnable) {

oldInfo = activeConnections.remove(pooledConnection);

pooledConnection.traceEnable = false;

}

} finally {

activeConnectionLock.unlock();

}

if (oldInfo == null) {

if (LOG.isWarnEnabled()) {

LOG.warn("remove abandonded failed. activeConnections.size " + activeConnections.size());

}

}

}如果應用程序沒有釋放連接,activeConnections會有未釋放連接的引用。

釋放泄露的連接

DruidDataSource中有個Druid-ConnectionPool-Destroy-XX的線程負責釋放連接:

protected void createAndStartDestroyThread() {

destroyTask = new DestroyTask();

if (destroyScheduler != null) {

long period = timeBetweenEvictionRunsMillis;

if (period <= 0) {

period = 1000;

}

destroySchedulerFuture = destroyScheduler.scheduleAtFixedRate(destroyTask, period, period,

TimeUnit.MILLISECONDS);

initedLatch.countDown();

return;

}

String threadName = "Druid-ConnectionPool-Destroy-" + System.identityHashCode(this);

destroyConnectionThread = new DestroyConnectionThread(threadName);

destroyConnectionThread.start();

}因為destroyScheduler是null,所以使用的是DestroyConnectionThread,每隔timeBetweenEvictionRunsMillis執行一次釋放任務。

public class DestroyConnectionThread extends Thread {

public DestroyConnectionThread(String name){

super(name);

this.setDaemon(true);

}

public void run() {

initedLatch.countDown();

for (;;) {

// 從前面開始刪除

try {

if (closed || closing) {

break;

}

if (timeBetweenEvictionRunsMillis > 0) {

Thread.sleep(timeBetweenEvictionRunsMillis);

} else {

Thread.sleep(1000); //

}

if (Thread.interrupted()) {

break;

}

destroyTask.run();

} catch (InterruptedException e) {

break;

}

}

}

}

public class DestroyTask implements Runnable {

public DestroyTask() {

}

@Override

public void run() {

shrink(true, keepAlive);

if (isRemoveAbandoned()) {

removeAbandoned();

}

}

}

public int removeAbandoned() {

int removeCount = 0;

long currrentNanos = System.nanoTime();

List<DruidPooledConnection> abandonedList = new ArrayList<DruidPooledConnection>();

activeConnectionLock.lock();

try {

Iterator<DruidPooledConnection> iter = activeConnections.keySet().iterator();

for (; iter.hasNext();) {

DruidPooledConnection pooledConnection = iter.next();

if (pooledConnection.isRunning()) {

continue;

}

long timeMillis = (currrentNanos - pooledConnection.getConnectedTimeNano()) / (1000 * 1000);

if (timeMillis >= removeAbandonedTimeoutMillis) {

iter.remove();

pooledConnection.setTraceEnable(false);

abandonedList.add(pooledConnection);

}

}

} finally {

activeConnectionLock.unlock();

}

if (abandonedList.size() > 0) {

for (DruidPooledConnection pooledConnection : abandonedList) {

final ReentrantLock lock = pooledConnection.lock;

lock.lock();

try {

if (pooledConnection.isDisable()) {

continue;

}

} finally {

lock.unlock();

}

//釋放連接

JdbcUtils.close(pooledConnection);

pooledConnection.abandond();

removeAbandonedCount++;

removeCount++;

//打印日志

if (isLogAbandoned()) {

StringBuilder buf = new StringBuilder();

buf.append("abandon connection, owner thread: ");

buf.append(pooledConnection.getOwnerThread().getName());

buf.append(", connected at : ");

buf.append(pooledConnection.getConnectedTimeMillis());

buf.append(", open stackTrace\n");

StackTraceElement[] trace = pooledConnection.getConnectStackTrace();

for (int i = 0; i < trace.length; i++) {

buf.append("\tat ");

buf.append(trace[i].toString());

buf.append("\n");

}

buf.append("ownerThread current state is " + pooledConnection.getOwnerThread().getState()

+ ", current stackTrace\n");

trace = pooledConnection.getOwnerThread().getStackTrace();

for (int i = 0; i < trace.length; i++) {

buf.append("\tat ");

buf.append(trace[i].toString());

buf.append("\n");

}

LOG.error(buf.toString());

}

}

}

return removeCount;

}從以上分析可知,即使應用沒有釋放連接,Druid-ConnectionPool-Destroy線程也會釋放掉activeConnections中的連接(除非業務線程一直阻塞,連接處于running==true的狀態,通過分析線程棧不存在這種情況)。

泄露的連接不是通過連接池申請的?

另一個可能的原因是泄露的連接不是通過連接池申請的,而是應用自己創建出來,但是沒有執行關閉連接的操作。所以思路是拿到所有創建連接的線程棧,然后通過分析線程棧找到有哪些地方創建的連接,這里我們使用arthas的watch來完成。

//抓取PgConnection本地端口號和線程棧

watch org.postgresql.core.v3.ConnectionFactoryImpl openConnectionImpl "{returnObj.pgStream.connection.impl.localport,@org.apache.commons.lang3.exception.ExceptionUtils@getStackTrace(new java.lang.Exception())}" -n 99999999 > /xxx/xxx/xxx/xxx.txt &TBase的數據庫連接對象是org.postgresql.jdbc.PgConnection,在PgConnection初始化的時候會調用org.postgresql.core.v3.ConnectionFactoryImpl.openConnectionImpl。生成的文件中記錄了每個數據庫連接的【本地端口號】和【線程棧】,通過對線程棧進行分析,發現數據庫連接都是通過連接池創建出來的。下面是創建數據庫連接的線程棧:

at org.postgresql.core.v3.ConnectionFactoryImpl.openConnectionImpl(ConnectionFactoryImpl.java:330)

at org.postgresql.core.ConnectionFactory.openConnection(ConnectionFactory.java:54)

at org.postgresql.jdbc.PgConnection.<init>(PgConnection.java:263)

at org.postgresql.Driver.makeConnection(Driver.java:443)

at org.postgresql.Driver.connect(Driver.java:297)

at com.alibaba.druid.filter.FilterChainImpl.connection_connect(FilterChainImpl.java:156)

at com.alibaba.druid.filter.FilterAdapter.connection_connect(FilterAdapter.java:787)

at com.alibaba.druid.filter.FilterEventAdapter.connection_connect(FilterEventAdapter.java:38)

at com.alibaba.druid.filter.FilterChainImpl.connection_connect(FilterChainImpl.java:150)

at com.alibaba.druid.filter.stat.StatFilter.connection_connect(StatFilter.java:251)

at com.alibaba.druid.filter.FilterChainImpl.connection_connect(FilterChainImpl.java:150)

at com.alibaba.druid.pool.DruidAbstractDataSource.createPhysicalConnection(DruidAbstractDataSource.java:1659)

at com.alibaba.druid.pool.DruidAbstractDataSource.createPhysicalConnection(DruidAbstractDataSource.java:1723)

at com.alibaba.druid.pool.DruidDataSource$CreateConnectionThread.run(DruidDataSource.java:2838)既然數據庫連接都是通過連接池創建出來的,那么數據庫連接應該都是受到連接池管理的。

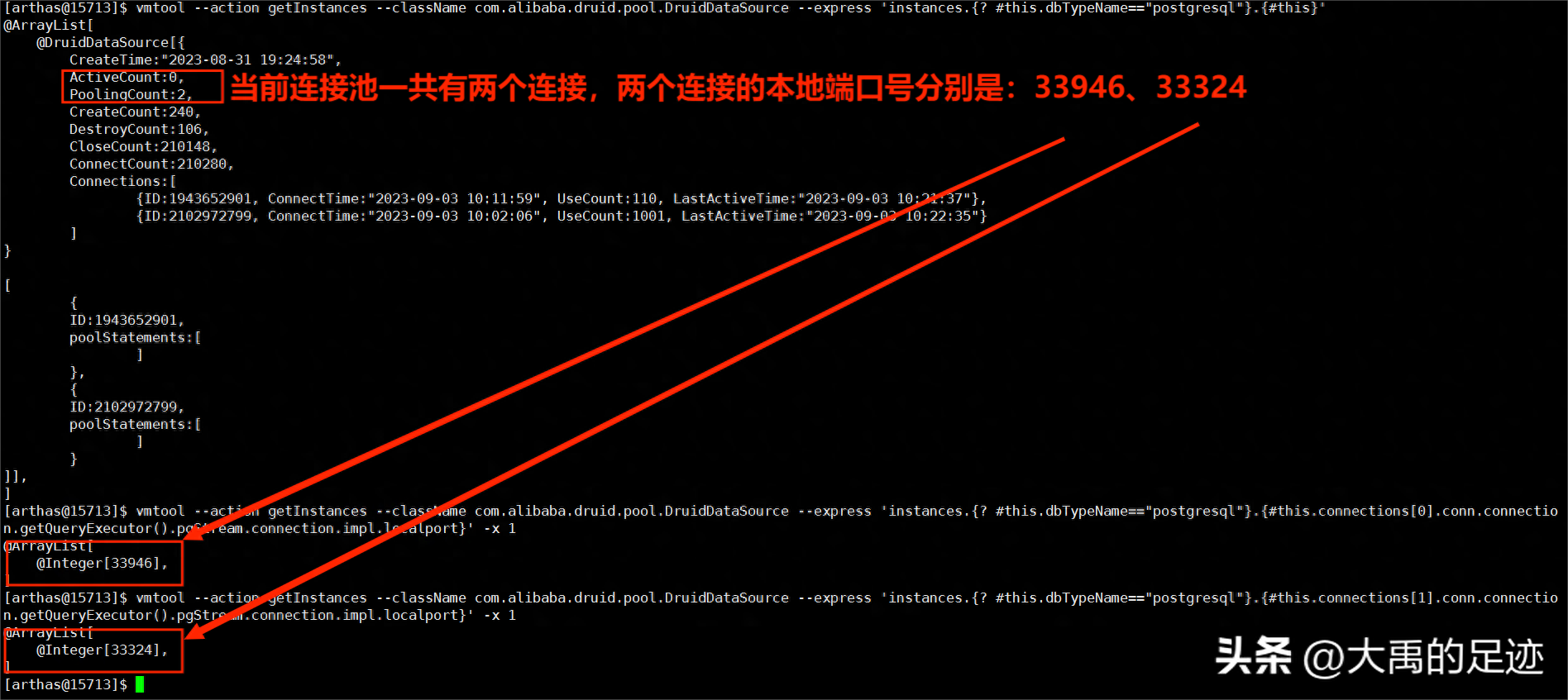

數據庫連接池連接與netstat看到的不一致

既然連接都是連接池管理的,那么分析下連接池中的連接信息:

連接池中連接信息

//查看連接池信息

vmtool --action getInstances --className com.alibaba.druid.pool.DruidDataSource --express 'instances.{? #this.dbTypeName=="postgresql"}.{#this}'

//查看連接池中連接本地端口號

vmtool --action getInstances --className com.alibaba.druid.pool.DruidDataSource --express 'instances.{? #this.dbTypeName=="postgresql"}.{#this.connections[0].conn.connection.getQueryExecutor().pgStream.connection.impl.localport}' -x 1

指標數據

netstat連接信息

網絡連接

netstat共查出8個連接,其中只有33946和33324是在連接池中的,其他6個都不在連接池中。分析到這里,可以確定存在連接泄露,即有連接沒有被關閉的情況,那么哪些地方進行了連接關閉呢?

連接關閉的路徑有哪些?

上面已經抓取到了每個PgConnection的創建路徑,現在抓取每個PgConnection的關閉路徑,如果一個PgConnection有創建但是沒有關閉,那么這個PgConnection就是泄露的連接。

//抓取PgConnection本地端口號和線程棧

watch org.postgresql.jdbc.PgConnection close "{target.getQueryExecutor().pgStream.connection.impl.localport,@org.apache.commons.lang3.exception.ExceptionUtils@getStackTrace(new java.lang.Exception()),throwExp}" -b -n 99999999 > /xxx/xxx/xxx/xxx.txt &通過分析以上抓取的數據,關閉連接主要有兩條路徑:通過業務路徑:

at org.postgresql.jdbc.PgConnection.close(PgConnection.java:857)

at com.alibaba.druid.filter.FilterChainImpl.connection_close(FilterChainImpl.java:186)

at com.alibaba.druid.filter.FilterAdapter.connection_close(FilterAdapter.java:777)

at com.alibaba.druid.filter.logging.LogFilter.connection_close(LogFilter.java:481)

at com.alibaba.druid.filter.FilterChainImpl.connection_close(FilterChainImpl.java:181)

at com.alibaba.druid.filter.stat.StatFilter.connection_close(StatFilter.java:294)

at com.alibaba.druid.filter.FilterChainImpl.connection_close(FilterChainImpl.java:181)

at com.alibaba.druid.proxy.jdbc.ConnectionProxyImpl.close(ConnectionProxyImpl.java:114)

at com.alibaba.druid.util.JdbcUtils.close(JdbcUtils.java:85)

at com.alibaba.druid.pool.DruidDataSource.discardConnection(DruidDataSource.java:1546)

at com.alibaba.druid.pool.DruidDataSource.recycle(DruidDataSource.java:2011)

at com.alibaba.druid.pool.DruidPooledConnection.recycle(DruidPooledConnection.java:351)

at com.alibaba.druid.filter.FilterChainImpl.dataSource_recycle(FilterChainImpl.java:5049)

at com.alibaba.druid.filter.logging.LogFilter.dataSource_releaseConnection(LogFilter.java:907)

at com.alibaba.druid.filter.FilterChainImpl.dataSource_recycle(FilterChainImpl.java:5045)

at com.alibaba.druid.filter.stat.StatFilter.dataSource_releaseConnection(StatFilter.java:711)

at com.alibaba.druid.filter.FilterChainImpl.dataSource_recycle(FilterChainImpl.java:5045)

at com.alibaba.druid.pool.DruidPooledConnection.syncClose(DruidPooledConnection.java:324)

at com.alibaba.druid.pool.DruidPooledConnection.close(DruidPooledConnection.java:270)

at org.springframework.jdbc.datasource.DataSourceUtils.doCloseConnection(DataSourceUtils.java:341)

at org.springframework.jdbc.datasource.DataSourceUtils.doReleaseConnection(DataSourceUtils.java:328)

at org.springframework.jdbc.datasource.DataSourceUtils.releaseConnection(DataSourceUtils.java:294)

at org.springframework.jdbc.datasource.DataSourceTransactionManager.doCleanupAfterCompletion(DataSourceTransactionManager.java:329)

at org.springframework.transaction.support.AbstractPlatformTransactionManager.cleanupAfterCompletion(AbstractPlatformTransactionManager.java:1016)

at org.springframework.transaction.support.AbstractPlatformTransactionManager.processCommit(AbstractPlatformTransactionManager.java:811)

at org.springframework.transaction.support.AbstractPlatformTransactionManager.commit(AbstractPlatformTransactionManager.java:730)

at org.springframework.transaction.interceptor.TransactionAspectSupport.commitTransactionAfterReturning(TransactionAspectSupport.java:487)

at org.springframework.transaction.interceptor.TransactionAspectSupport.invokeWithinTransaction(TransactionAspectSupport.java:291)

at org.springframework.transaction.interceptor.TransactionInterceptor.invoke(TransactionInterceptor.java:96)

at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:179)

at org.springframework.aop.framework.JdkDynamicAopProxy.invoke(JdkDynamicAopProxy.java:213)

at com.sun.proxy.$Proxy303.xxxxxxx業務方法(Unknown Source)

at sun.reflect.GeneratedMethodAccessor149.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:497)

at com.taobao.hsf.remoting.provider.ReflectInvocationHandler.handleRequest0(ReflectInvocationHandler.java:83)通過druid Destroy線程路徑:

at org.postgresql.jdbc.PgConnection.close(PgConnection.java:857)

at com.alibaba.druid.filter.FilterChainImpl.connection_close(FilterChainImpl.java:186)

at com.alibaba.druid.filter.FilterAdapter.connection_close(FilterAdapter.java:777)

at com.alibaba.druid.filter.logging.LogFilter.connection_close(LogFilter.java:481)

at com.alibaba.druid.filter.FilterChainImpl.connection_close(FilterChainImpl.java:181)

at com.alibaba.druid.filter.stat.StatFilter.connection_close(StatFilter.java:294)

at com.alibaba.druid.filter.FilterChainImpl.connection_close(FilterChainImpl.java:181)

at com.alibaba.druid.proxy.jdbc.ConnectionProxyImpl.close(ConnectionProxyImpl.java:114)

at com.alibaba.druid.util.JdbcUtils.close(JdbcUtils.java:85)

at com.alibaba.druid.pool.DruidDataSource.shrink(DruidDataSource.java:3194)

at com.alibaba.druid.pool.DruidDataSource$DestroyTask.run(DruidDataSource.java:2938)

at com.alibaba.druid.pool.DruidDataSource$DestroyConnectionThread.run(DruidDataSource.java:2922)【抓取到的創建PgConnection的本地端口號】如果沒有出現在【抓取的關閉PgConnection的本地端口號】中,那么這個本地端口號對應的連接就是沒有被關閉的,通過與netstat查的端口號對比,是一致的,所以猜測沒有調用到PgConnection.close或者調用PgConnection.close出現異常了?

連接池到底做沒做釋放連接的操作?

以下分別從宏觀統計數據和單個未關閉連接的角度分析:數據庫連接池到底做沒做釋放連接的操作。

宏觀統計數據

druid記錄了很多指標數據:

vmtool --action getInstances --className com.alibaba.druid.pool.DruidDataSource --express 'instances.{? #this.dbTypeName=="postgresql"}.{#this}'

vmtool --action getInstances --className com.alibaba.druid.pool.DruidDataSource --express 'instances.{? #this.dbTypeName=="postgresql"}.{#this.discardCount}'

指標數據

圖上幾個關鍵字段說明如下:

序號 | 字段 | 說明 |

1 | ActiveCount | 當前正在被應用程序使用中的連接數量 |

2 | PoolingCount | 當前連接池中空閑的連接數量 |

3 | CreateCount | 連接池創建過的物理連接的數量 |

4 | DestroyCount | 連接池關閉過的物理連接的數量 |

5 | ConnectCount | 應用向連接池申請過的邏輯連接的數量 |

6 | CloseCount | 應用向連接池釋放過的邏輯連接的數量 |

7 | discardCount | 關閉過的物理連接的數量,具體邏輯可以查看discardConnection和shrink方法中的邏輯 |

這里我們分析兩個數據,分別是:

物理連接創建和關閉

通過對druid邏輯分析,得出公式:CreateCount = ActiveCount + PoolingCount + DestroyCount + discardCount,將數據代入以上公式,即:243 = 0 + 2 + 107 + 134。

邏輯連接申請和釋放

通過對druid邏輯分析,得出公式:ConnectCount = ActiveCount + CloseCount + discardCount,將數據代入以上公式,即:212586 = 0 + 212452 + 134。通過以上分析,從連接池的角度是已經釋放連接了。

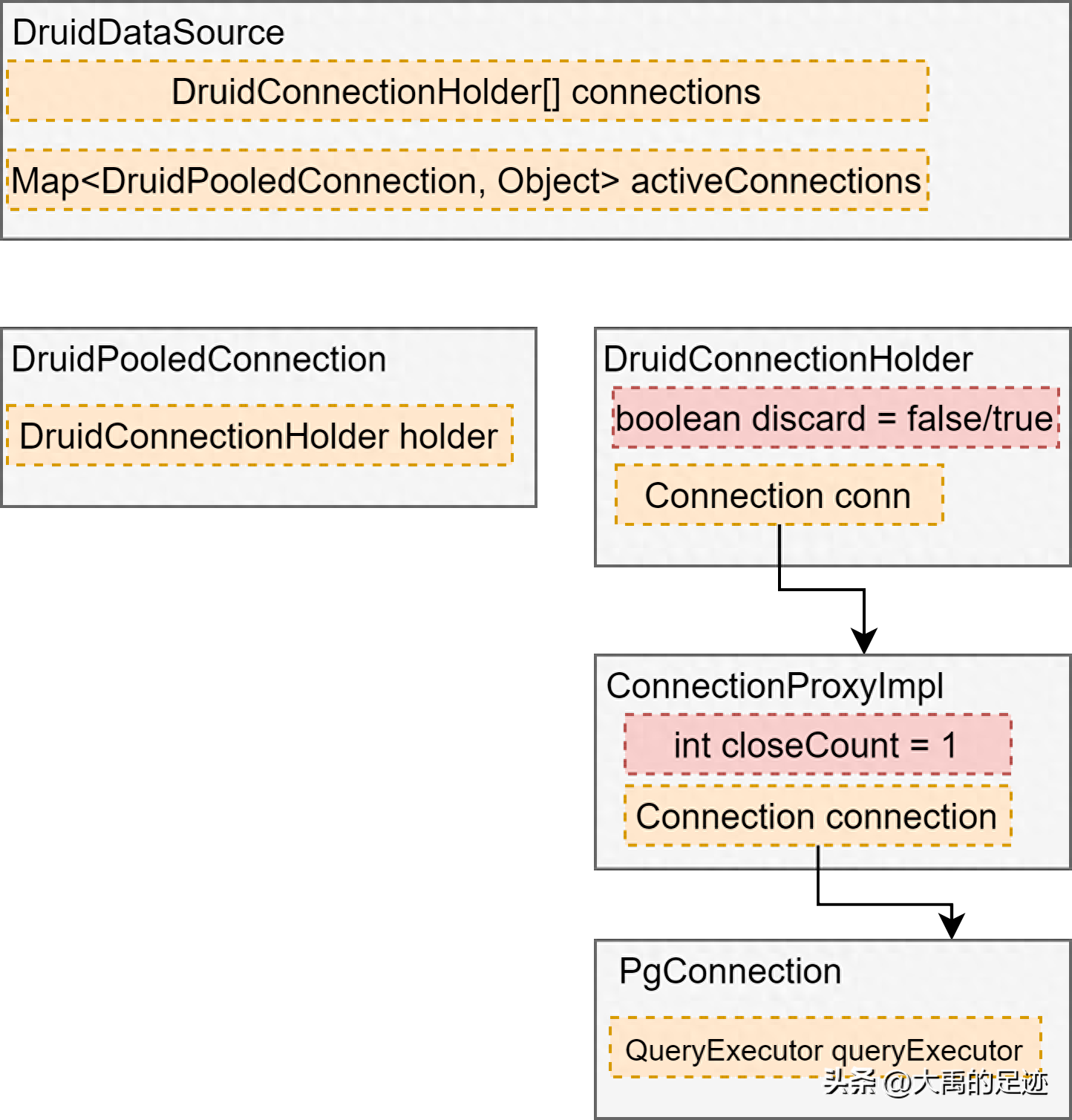

單個未關閉連接的角度

首先需要弄清楚連接池中幾個關鍵對象的關系,當應用向連接池申請連接的時候,連接池返回給應用的是DruidPooledConnection;當應用歸還連接到連接池的時候,調用的是DruidPooledConnection.close();某些條件下連接會被關閉。

應用向連接池申請連接

申請連接

應用向連接池歸還連接

釋放連接

歸還連接的時候,代碼不會走到com.alibaba.druid.util.JdbcUtils.close的邏輯。

關閉連接

關閉連接

如果一個PgConnection被關閉,那么與該PgConnection之前有關聯的對象的狀態會有變化,下面我們分析下與本地端口號是34802的PgConnection有關系的對象的狀態。

DruidPooledConnection

vmtool --action getInstances --className com.alibaba.druid.pool.DruidPooledConnection --express 'instances.{? #this.conn!=null && #this.conn.dataSource.dbTypeName=="postgresql" && #this.conn.connection.getQueryExecutor().pgStream.connection.impl.localport==34802}.{#this.disable}' --limit 200000 -x 2

沒有找到對應的對象,說明已經調用了DruidPooledConnection.close(),因為調用close后,會設置該對象的conn為null。

DruidConnectionHolder

vmtool --action getInstances --className com.alibaba.druid.pool.DruidConnectionHolder --express 'instances.{? #this.dataSource.dbTypeName=="postgresql" && #this.conn.connection.getQueryExecutor().pgStream.connection.impl.localport==34802}.{#this.discard}' --limit 20000

DruidConnectionHolder.discard=false,因為連接的關閉有兩條路徑:

- 通過DestroyTask.run路徑關閉,不會設置DruidConnectionHolder.discard為true,分析發現泄露連接的discard字段值都為false,所以泄露的連接是通過該路徑執行的關閉

- 通過DruidPooledConnection.close()路徑關閉,會設置DruidConnectionHolder.discard為true

ConnectionProxyImpl

@Override

public void close() throws SQLException {

FilterChainImpl chain = createChain();

chain.connection_close(this);

closeCount++;

recycleFilterChain(chain);

}vmtool --action getInstances --className com.alibaba.druid.proxy.jdbc.ConnectionProxyImpl --express 'instances.{? #this.dataSource.dbTypeName=="postgresql" && #this.connection.getQueryExecutor().pgStream.connection.impl.localport==34802}.{#this.closeCount}' --limit 20000

ConnectionProxyImpl.closeCount=0,如果連接池正確關閉的話應該等于1。

PgConnection

@Override

public void close() throws SQLException {

if (queryExecutor == null) {

return;

}

if (queryExecutor.isClosed()) {

return;

}

releaseTimer();

queryExecutor.close();

openStackTrace = null;

}

// queryExecutor.close()

@Override

public void close() {

if (closed) {

return;

}

try {

LOGGER.log(Level.FINEST, " FE=> Terminate");

sendCloseMessage();

pgStream.flush();

pgStream.close();

} catch (IOException ioe) {

LOGGER.log(Level.FINEST, "Discarding IOException on close:", ioe);

}

closed = true;

}

// pgStream.close()

@Override

public void close() throws IOException {

if (encodingWriter != null) {

encodingWriter.close();

}

pgOutput.close();

pgInput.close();

connection.close();

}

PgConnection.isClosed()=false,如果連接正確關閉的話應該等于true。

繼續分析

從以上分析可知,泄露連接DruidConnectionHolder.discard都為false,所以發現問題的代碼大概率出現在DestroyTask.run()方法中:該方法主要包括shrink方法和removeAbandoned方法,其中removeAbandoned方法已經在前面分析過,下面主要分析下shrink邏輯,shrink邏輯主要包括(我們的配置不涉及keepAlive處理邏輯):【篩選出evictConnections和keepAliveConnections】、【從connections中去掉evictConnections和keepAliveConnections的連接】、【處理evictConnections和keepAliveConnections】。

連接池刪除了連接,但是連接沒close掉?

最開始懷疑問題出在【處理evictConnections和keepAliveConnections】,即在關閉連接的時候出現了異常,雖然連接池中刪除了該連接,但是close該連接的時候并沒有關閉干凈,所以通過arthas watch抓取close階段是否出現異常:

watch com.alibaba.druid.proxy.jdbc.ConnectionProxyImpl close "{target.connection.getQueryExecutor().pgStream.connection.impl.localport,@org.apache.commons.lang3.exception.ExceptionUtils@getStackTrace(new java.lang.Exception())}" 'target.dataSource.dbTypeName=="postgresql"' -e > /xxx/xxx/xxx/error.txt &結果沒有抓到。另外從PgConnection角度分析,如果PgConnection調用了close方法,那么PgConnection相關聯的org.postgresql.core.QueryExecutorCloseAction類的PG_STREAM_UPDATER會變為null,而PG_STREAM_UPDATER并沒有變為null。下面是QueryExecutorCloseAction中的方法:

@Override

public void close() throws IOException {

... ...

PGStream pgStream = this.pgStream;

if (pgStream == null || !PG_STREAM_UPDATER.compareAndSet(this, pgStream, null)) {

// The connection has already been closed

return;

}

sendCloseMessage(pgStream);

if (pgStream.isClosed()) {

return;

}

pgStream.flush();

pgStream.close();

}總上分析,泄露的連接壓根就沒有調用其close方法。

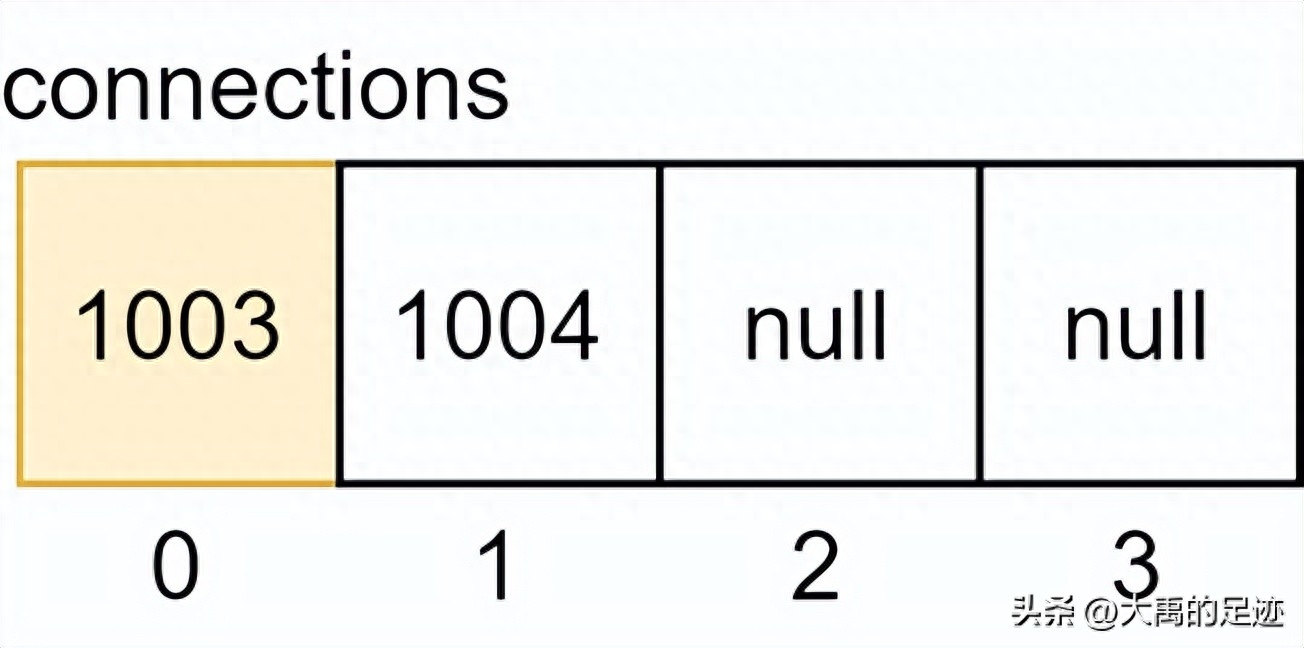

難道在【從connections中去掉evictConnections和keepAliveConnections的連接】階段把連接弄丟了?

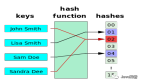

通過分析代碼及翻找druid issues,出問題的代碼主要在:

int removeCount = evictCount + keepAliveCount;

if (removeCount > 0) {

System.arraycopy(connections, removeCount, connections, 0, poolingCount - removeCount);

Arrays.fill(connections, poolingCount - removeCount, poolingCount, null);

poolingCount -= removeCount;

}問題大概是這樣子的:1.假設connections中有四個連接,分別是1001、1002、1003、1004,其中1001、1003被確認是evictConnection

2.去除connections中的evictConnection

從上面圖中可以看出,最終把1002連接給弄丟了,所以該連接也不會再調用到close方法,導致了連接泄露。

解決辦法

從druid 1.2.18開始對shrink邏輯進行了優化,可以嘗試切換到新版本