ChatGPT | Prompt中的CoT和ReAct

我們在寫Prompt可能經常遇到不準確或者無法獲得外部知識,因此本文講述CoT和ReAct如何運作并提高大語言模型準確性。

第一部分:CoT(思維鏈)

1、什么是CoT

通用的Prompt:

問題:Roger有5個網球。他買了2罐網球。每罐有3個網球。他現在有多少個網球?

答案:答案是11個。

問題:自助餐廳有23個蘋果。如果他們用了20個來做午餐,又買了6個,他們現在有多少個蘋果?

# 輸出

答案:答案是29個。CoT的Prompt:

問題:Roger有5個網球。他買了2罐網球。每罐有3個網球。他現在有多少個網球?

答案:Roger一開始有5個網球。2罐每罐3個網球,共6個網球。5 + 6 = 11。答案是11個。

問題:自助餐廳有23個蘋果。如果他們用了20個來做午餐,又買了6個,他們現在有多少個蘋果?

# 輸出

自助餐廳有23個蘋果。

他們用了20個來做午餐。

又買了6個。

他們現在有多少個蘋果?

23 - 20 + 6 = 9

答案是9個。(通過文心一言提問)

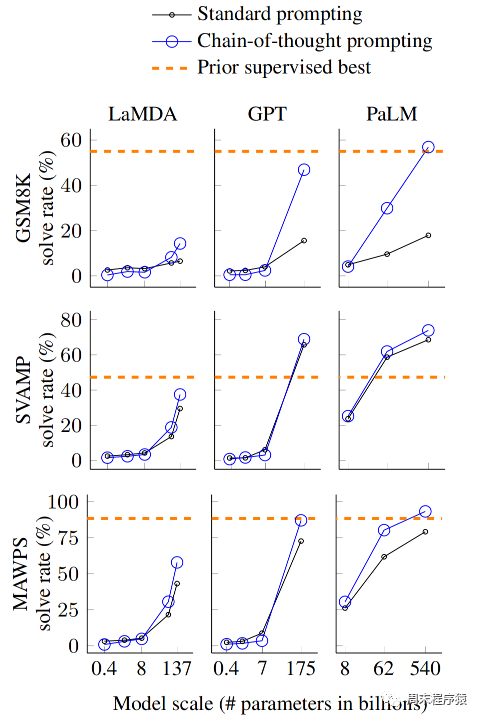

CoT是通過讓GPT參考Prompt中的中間推理步驟,然后詢問類似的問題,將GPT的推理透明化,從而獲得比原始提問更高的準確率,從上面兩個Prompt對比可以看出,CoT的Prompt?才是正確答案,通用的Prompt?回答是錯誤的。Jason Wei, Xuezhi Wang等在 ?https://arxiv.org/abs/2201.11903 論文中提出CoT的方法,通過中間推理步驟實現了復雜的推理能力,并給出了一些試驗數據:

CoT對比數據

2、什么場景下能使用CoT

- CoT對小模型作用不大,模型參數至少達到10B才有效果,達到100B效果才明顯,所以對于私有化訓練并且是大模型情況下,可以嘗試提供推理步驟讓其訓練

- CoT對復雜的問題收益較高,簡單問題可能對大模型已經容易推理,復雜問題更好體現價值

- CoT有一定的局限性,給出的推理路徑不一定是完全正確的,需要提問者自己辨別

3、開發一個簡單的AutoCoT

對于CoT有個開源項目(https://github.com/amazon-science/auto-cot),是基于如何讓GPT3更加聰明(非GPT3.5),大家有興趣的可以看一下源碼,我把這個項目改為中文了,并跑了一下demo,輸出如下:

========== manual_cot

2023/09/26 22:33:22

*****************************

Test Question:

一名服務員有14個客人要服務。如果有3個客人離開了,他又得到了另外39個客人,那么他現在總共有多少個客人

*****************************

Prompted Input:

問題: 林地里有15棵樹。林地工人今天將在林地里種樹。完成后,林地里將有21棵樹。林地工人今天種了多少棵樹?

答案: 原本有15棵樹。然后種了一些樹后,變成了21棵。所以一定種了21-15=6棵樹。 這個答案是 6.

問題: 如果停車場里有3輛車,又有2輛車到達,那么停車場里有多少輛車?

答案: 原本有3輛車。又到來了2輛車。3+2=5輛車。 這個答案是 5.

Q: 一名服務員有14個客人要服務。如果有3個客人離開了,他又得到了另外39個客人,那么他現在總共有多少個客人

A:

*****************************

Output:

原本有14個客人。又來了39個客人。14+39=53個客人。 這個答案是 53.

*****************************

========== auto_cot

2023/09/26 22:33:26

*****************************

Test Question:

一名服務員有14個客人要服務。如果有3個客人離開了,他又得到了另外39個客人,那么他現在總共有多少個客人

*****************************

Prompted Input:

問題: Wendy在Facebook上傳了45張照片。她將27張照片放在一個相冊中,將其余的照片放在了9個不同的相冊中。每個相冊有多少張照片?

答案: 讓我們逐步思考。首先,我們知道Wendy總共上傳了45張照片。其次,我們知道Wendy將27張照片放在一個相冊中。這意味著Wendy將剩余的18張照片放在了9個不同的相冊中。這意味著每個相冊都有2張照片。 這個答案是 2.

問題: 在萬圣節,Katie和她的妹妹合并了他們收到的糖果。Katie有8塊糖果,而她的妹妹有23塊糖果。如果他們在第一天晚上吃了8塊糖果,那么他們還剩下多少塊糖果?

答案: 讓我們逐步思考。Katie和她的妹妹一共有8+23=31塊糖果。如果他們在第一天晚上吃了8塊糖果,那么他們還剩下23塊糖果。 這個答案是 23.

Q: 一名服務員有14個客人要服務。如果有3個客人離開了,他又得到了另外39個客人,那么他現在總共有多少個客人

A: 讓我們一步一步思考.

*****************************

Output:

他最初有14個客人. 然后3個客人離開了, 所以他只剩下11個客人. 然后他又得到了39個客人, 所以他現在總共有50個客人. 這個答案是 50.

*****************************具體代碼,如下:

# -*- coding: utf-8 -*-

import argparse

import time

import json

import datetime

import requests

TOKEN = "{你的TOKEN}"

multiarith_auto_json = {

"demo": [

{

"question": "問題: Wendy在Facebook上傳了45張照片。她將27張照片放在一個相冊中,將其余的照片放在了9個不同的相冊中。每個相冊有多少張照片?\n答案:",

"rationale": "讓我們逐步思考。首先,我們知道Wendy總共上傳了45張照片。其次,我們知道Wendy將27張照片放在一個相冊中。這意味著Wendy將剩余的18張照片放在了9個不同的相冊中。這意味著每個相冊都有2張照片。",

"pred_ans": "2",

"gold_ans": "2"

},

{

"question": "問題: 在萬圣節,Katie和她的妹妹合并了他們收到的糖果。Katie有8塊糖果,而她的妹妹有23塊糖果。如果他們在第一天晚上吃了8塊糖果,那么他們還剩下多少塊糖果?\n答案:",

"rationale": "讓我們逐步思考。Katie和她的妹妹一共有8+23=31塊糖果。如果他們在第一天晚上吃了8塊糖果,那么他們還剩下23塊糖果。",

"pred_ans": "23",

"gold_ans": "23"

}

]

}

multiarith_manual_json = {

"demo": [

{

"question": "問題: 林地里有15棵樹。林地工人今天將在林地里種樹。完成后,林地里將有21棵樹。林地工人今天種了多少棵樹?\n答案:",

"rationale": "原本有15棵樹。然后種了一些樹后,變成了21棵。所以一定種了21-15=6棵樹。",

"pred_ans": "6"

},

{

"question": "問題: 如果停車場里有3輛車,又有2輛車到達,那么停車場里有多少輛車?\n答案:",

"rationale": "原本有3輛車。又到來了2輛車。3+2=5輛車。",

"pred_ans": "5"

}

]

}

def request_api(prompt, engine, max_tokens, temperature, stop, top_p, frequency_penalty, presence_penalty):

url = "https://{你的代理地址}/v1/completions"

headers = {

"Content-Type": "application/json",

"QPilot-ID": "246",

"Authorization": "Bearer " + TOKEN,

}

params = {

"model": engine,

"prompt": prompt,

"temperature": temperature,

"max_tokens": max_tokens,

"stop": stop,

"top_p": top_p,

"frequency_penalty": frequency_penalty,

"presence_penalty": presence_penalty,

}

result = requests.post(url, data=json.dumps(params),

headers=headers)

# print("result: ", str(result.text))

return result.json()

def decoder_for_gpt3(args, input, max_length):

# GPT-3 API allows each users execute the API within 60 times in a minute ...

# time.sleep(1)

time.sleep(args.api_time_interval)

# https://beta.openai.com/account/api-keys

# openai.api_key = "[Your OpenAI API Key]"

# Specify engine ...

# Instruct GPT3

if args.model == "gpt3":

engine = "text-ada-001"

elif args.model == "gpt3-medium":

engine = "text-babbage-001"

elif args.model == "gpt3-large":

engine = "text-curie-001"

elif args.model == "gpt3-xl":

engine = "text-davinci-002"

elif args.model == "text-davinci-001":

engine = "text-davinci-001"

elif args.model == "code-davinci-002":

engine = "code-davinci-002"

else:

raise ValueError("model is not properly defined ...")

if ("few_shot" in args.method or "auto" in args.method) and engine == "code-davinci-002":

response = request_api(

engine=engine,

prompt=input,

max_tokens=max_length,

temperature=args.temperature,

top_p=1,

frequency_penalty=0,

presence_penalty=0,

stop=["\n"]

)

else:

response = request_api(

engine=engine,

prompt=input,

max_tokens=max_length,

temperature=args.temperature,

top_p=1,

frequency_penalty=0,

presence_penalty=0,

stop=None

)

return response["choices"][0]["text"]

def create_demo_text(args, cot_flag):

x, z, y = [], [], []

json_data = args.demo_content["demo"]

for line in json_data:

x.append(line["question"])

z.append(line["rationale"])

y.append(line["pred_ans"])

index_list = list(range(len(x)))

demo_text = ""

for i in index_list:

if cot_flag:

demo_text += x[i] + " " + z[i] + " " + \

args.direct_answer_trigger_for_fewshot + " " + y[i] + ".\n\n"

else:

demo_text += x[i] + " " + \

args.direct_answer_trigger_for_fewshot + " " + y[i] + ".\n\n"

return demo_text

class Decoder():

def __init__(self):

pass

def decode(self, args, input, max_length):

response = decoder_for_gpt3(args, input, max_length)

return response

def cot(method, question):

args = parse_arguments()

decoder = Decoder()

args.method = method

if args.method != "zero_shot_cot":

if args.method == "auto_cot":

args.demo_content = multiarith_auto_json

else:

args.demo_content = multiarith_manual_json

demo = create_demo_text(args, cot_flag=True)

else:

demo = None

x = "Q: " + question + "\n" + "A:"

print('*****************************')

print("Test Question:")

print(question)

print('*****************************')

if args.method == "zero_shot":

x = x + " " + args.direct_answer_trigger_for_zeroshot

elif args.method == "zero_shot_cot":

x = x + " " + args.cot_trigger

elif args.method == "manual_cot":

x = demo + x

elif args.method == "auto_cot":

x = demo + x + " " + args.cot_trigger

else:

raise ValueError("method is not properly defined ...")

print("Prompted Input:")

print(x.replace("\n\n", "\n").strip())

print('*****************************')

max_length = args.max_length_cot if "cot" in args.method else args.max_length_direct

z = decoder.decode(args, x, max_length)

z = z.replace("\n\n", "\n").replace("\n", "").strip()

if args.method == "zero_shot_cot":

z2 = x + z + " " + args.direct_answer_trigger_for_zeroshot_cot

max_length = args.max_length_direct

pred = decoder.decode(args, z2, max_length)

print("Output:")

print(z + " " + args.direct_answer_trigger_for_zeroshot_cot + " " + pred)

print('*****************************')

else:

pred = z

print("Output:")

print(pred)

print('*****************************')

def parse_arguments():

parser = argparse.ArgumentParser(descriptinotallow="Zero-shot-CoT")

parser.add_argument("--max_num_worker", type=int, default=0,

help="maximum number of workers for dataloader")

parser.add_argument(

"--model", type=str, default="gpt3-xl", help="model used for decoding. Note that 'gpt3' are the smallest models."

)

parser.add_argument(

"--method", type=str, default="auto_cot", choices=["zero_shot", "zero_shot_cot", "few_shot", "few_shot_cot", "auto_cot"], help="method"

)

parser.add_argument(

"--cot_trigger_no", type=int, default=1, help="A trigger sentence that elicits a model to execute chain of thought"

)

parser.add_argument(

"--max_length_cot", type=int, default=256, help="maximum length of output tokens by model for reasoning extraction"

)

parser.add_argument(

"--max_length_direct", type=int, default=32, help="maximum length of output tokens by model for answer extraction"

)

parser.add_argument(

"--api_time_interval", type=float, default=1.0, help=""

)

parser.add_argument(

"--temperature", type=float, default=0, help=""

)

args = parser.parse_args()

args.direct_answer_trigger_for_fewshot = "這個答案是"

args.direct_answer_trigger_for_zeroshot = "這個答案是"

args.direct_answer_trigger_for_zeroshot_cot = "這個答案是"

args.cot_trigger = "讓我們一步一步思考."

return args

if __name__ == "__main__":

ans = "一名服務員有14個客人要服務。如果有3個客人離開了,他又得到了另外39個客人,那么他現在總共有多少個客人"

print("========== manual_cot")

cot("manual_cot", ans)

print("========== auto_cot")

cot("auto_cot", ans)第二部分:ReAct(推理+行動)

1、什么是ReAct

在了解ReAct之前,先來一個示例:

問題 科羅拉多造山帶東部區域延伸到的區域的海拔范圍是多少?

思考 1 我需要搜索科羅拉多造山帶,找到科羅拉多造山帶東段延伸的區域,然后找到該區域的海拔范圍。

操作 1 搜索 [科羅拉多造山帶]

觀察 1 科羅拉多造山帶是科羅拉多及其周邊地區造山運動的一段。

思考 2 它沒有提到東部地區。所以我需要查一下東部地區。

操作 2 查找 [東部地區]

觀察 2 (結果 1 / 1) 東部區域延伸至高平原,稱為中原造山帶。

思考 3 科羅拉多造山運動的東部區域延伸到高平原。所以我需要搜索高平原,找到它的海拔范圍。

操作 3 搜索 [高平原]

觀察 3 高平原指的是兩個截然不同的陸地區域之一。

思考 4 我需要搜索高平原(美國)

操作 4 搜索 [高平原(美國)]

觀察 4 高平原是大平原的一個分區。從東到西,高平原的海拔從 1800 到 7000 英尺 (550 到 2130 米) 不等。[3]

思考 5 高平原海拔高度從 1800 到 7000 英尺,所以答案是 1800 到 7000 英尺。

操作 5 結束 [1800 到 7000 英尺]

...(這里面搜索是使用利用GPT插件在搜索引擎查找內容)

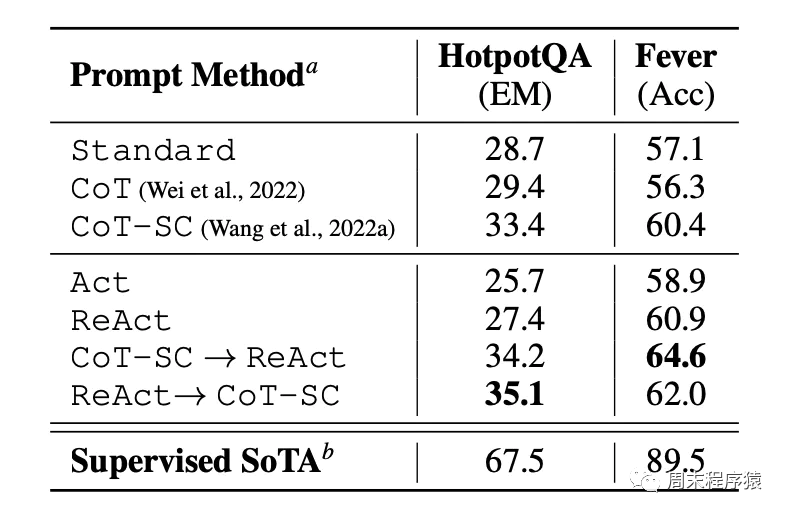

Shunyu Yao, Jeffrey Zhao等在 https://arxiv.org/abs/2210.03629? 提出通過LLMs以交錯的方式生成推理軌跡和任務特定操作(ReAct?),可以在語言和決策任務上的表現要高于幾個最先進水準要求的的基線,同時還提高了LLMs的人類可解釋性和可信度。ReAct? 是將上面提到 CoT? 和工具組合,通過內部知識庫和外部信息,一起組合思考過程,其中論文的效果如下圖,通過在HotPotQA?(知識密集型推理任務如問答)和Fever?(事實驗證)上使用不同提示方法得到的提示的表現結果說明了 ReAct? 表現結果通常優于Act?(只涉及操作),同時可以觀察到 ReAct? 在Fever?(事實驗證)上的表現優于 CoT?,而在 HotpotQA?(知識密集型推理任務如問答) 上落后于 CoT`,論文對該方法進行了詳細的誤差分析,總而言之:

- CoT 存在事實幻覺的問題

- ReAct 的結構性約束降低了它在制定推理步驟方面的靈活性

- ReAct 在很大程度上依賴于它正在檢索的信息

來源https://arxiv.org/abs/2210.03629

2、驗證ReAct功能

通過LangChain現有的工具鏈,我們來驗證一個問題 珠穆拉瑪峰旁邊的西北方向的山峰叫什么名字?有誰成功登頂這座山峰?請用中文回答,先用GPT3.5來問答一下:

ReAct驗證問題

從上述的結果看來,顯然沒有答對點上,于是我們用ReAct?嘗試(使用text-davinci-003),其代碼如下:

# 更新或安裝必要的庫

# pip install --upgrade openai

# pip install --upgrade langchain

# pip install google-search-results

import os

from langchain.llms import OpenAI

from langchain.agents import load_tools

from langchain.agents import initialize_agent

os.environ["OPENAI_API_KEY"] = "{你的TOKEN}"

os.environ["OPENAI_API_BASE"] = "{你的代理地址}"

# https://serpapi.com/manage-api-key

os.environ["SERPAPI_API_KEY"] = "{serpapi.com注冊可以獲得key}"

llm = OpenAI(model_name="text-davinci-003", temperature=0)

tools = load_tools(["serpapi", "llm-math"], llm=llm)

agent = initialize_agent(

tools, llm, agent="zero-shot-react-description", verbose=True)

agent.run("珠穆拉瑪峰旁邊的西北方向的山峰叫什么名字?有誰成功登頂這座山峰?請用中文回答")其輸出的過程和結果:

> Entering new AgentExecutor chain...

I need to find out who successfully climbed the mountain and then calculate their age cubed

Action: Search

Action Input: "who successfully climbed the mountain near Mount Everest northwest"

Observation: Edmund Hillary (left) and Sherpa Tenzing Norgay reached the 29,035-foot summit of Everest on May 29, 1953, becoming the first people to stand atop the world's highest mountain.

Thought: I need to find out Edmund Hillary's age

Action: Search

Action Input: "Edmund Hillary age"

Observation: 88 years

Thought: I need to calculate 88 cubed

Action: Calculator

Action Input: 88^3

Observation: Answer: 681472

Thought: I now know the final answer

Final Answer: 意蒙德·希拉里(Edmund Hillary)成功登頂過山峰珠穆拉瑪峰旁邊的西北方向的山峰,他的年齡的3次方是681472。

> Finished chain從上述可以看出,利用ReAct和其他模型結合,可能產生更高的智能。

3、ReAct是如何實現的?

在LangChain的源碼中,ReAct?實現比較簡單,仍然是基于Prompt模板實現,我們來看看 langchain/agents/react 下的python代碼:

EXAMPLES = [

"""Question: What is the elevation range for the area that the eastern sector of the Colorado orogeny extends into?

Thought: I need to search Colorado orogeny, find the area that the eastern sector of the Colorado orogeny extends into, then find the elevation range of the area.

Action: Search[Colorado orogeny]

Observation: The Colorado orogeny was an episode of mountain building (an orogeny) in Colorado and surrounding areas.

Thought: It does not mention the eastern sector. So I need to look up eastern sector.

Action: Lookup[eastern sector]

Observation: (Result 1 / 1) The eastern sector extends into the High Plains and is called the Central Plains orogeny.

Thought: The eastern sector of Colorado orogeny extends into the High Plains. So I need to search High Plains and find its elevation range.

Action: Search[High Plains]

Observation: High Plains refers to one of two distinct land regions

Thought: I need to instead search High Plains (United States).

Action: Search[High Plains (United States)]

Observation: The High Plains are a subregion of the Great Plains. From east to west, the High Plains rise in elevation from around 1,800 to 7,000 ft (550 to 2,130 m).[3]

Thought: High Plains rise in elevation from around 1,800 to 7,000 ft, so the answer is 1,800 to 7,000 ft.

Action: Finish[1,800 to 7,000 ft]""",

"""Question: Musician and satirist Allie Goertz wrote a song about the "The Simpsons" character Milhouse, who Matt Groening named after who?

Thought: The question simplifies to "The Simpsons" character Milhouse is named after who. I only need to search Milhouse and find who it is named after.

Action: Search[Milhouse]

Observation: Milhouse Mussolini Van Houten is a recurring character in the Fox animated television series The Simpsons voiced by Pamela Hayden and created by Matt Groening.

Thought: The paragraph does not tell who Milhouse is named after, maybe I can look up "named after".

Action: Lookup[named after]

Observation: (Result 1 / 1) Milhouse was named after U.S. president Richard Nixon, whose middle name was Milhous.

Thought: Milhouse was named after U.S. president Richard Nixon, so the answer is Richard Nixon.

Action: Finish[Richard Nixon]""",

"""Question: Which documentary is about Finnish rock groups, Adam Clayton Powell or The Saimaa Gesture?

Thought: I need to search Adam Clayton Powell and The Saimaa Gesture, and find which documentary is about Finnish rock groups.

Action: Search[Adam Clayton Powell]

Observation: Could not find [Adam Clayton Powell]. Similar: [’Adam Clayton Powell III’, ’Seventh Avenue (Manhattan)’, ’Adam Clayton Powell Jr. State Office Building’, ’Isabel Washington Powell’, ’Adam Powell’, ’Adam Clayton Powell (film)’, ’Giancarlo Esposito’].

Thought: To find the documentary, I can search Adam Clayton Powell (film).

Action: Search[Adam Clayton Powell (film)]

Observation: Adam Clayton Powell is a 1989 American documentary film directed by Richard Kilberg. The film is about the rise and fall of influential African-American politician Adam Clayton Powell Jr.[3][4] It was later aired as part of the PBS series The American Experience.

Thought: Adam Clayton Powell (film) is a documentary about an African-American politician, not Finnish rock groups. So the documentary about Finnish rock groups must instead be The Saimaa Gesture.

Action: Finish[The Saimaa Gesture]""",

"""Question: What profession does Nicholas Ray and Elia Kazan have in common?

Thought: I need to search Nicholas Ray and Elia Kazan, find their professions, then find the profession they have in common.

Action: Search[Nicholas Ray]

Observation: Nicholas Ray (born Raymond Nicholas Kienzle Jr., August 7, 1911 - June 16, 1979) was an American film director, screenwriter, and actor best known for the 1955 film Rebel Without a Cause.

Thought: Professions of Nicholas Ray are director, screenwriter, and actor. I need to search Elia Kazan next and find his professions.

Action: Search[Elia Kazan]

Observation: Elia Kazan was an American film and theatre director, producer, screenwriter and actor.

Thought: Professions of Elia Kazan are director, producer, screenwriter, and actor. So profession Nicholas Ray and Elia Kazan have in common is director, screenwriter, and actor.

Action: Finish[director, screenwriter, actor]""",

"""Question: Which magazine was started first Arthur’s Magazine or First for Women?

Thought: I need to search Arthur’s Magazine and First for Women, and find which was started first.

Action: Search[Arthur’s Magazine]

Observation: Arthur’s Magazine (1844-1846) was an American literary periodical published in Philadelphia in the 19th century.

Thought: Arthur’s Magazine was started in 1844. I need to search First for Women next.

Action: Search[First for Women]

Observation: First for Women is a woman’s magazine published by Bauer Media Group in the USA.[1] The magazine was started in 1989.

Thought: First for Women was started in 1989. 1844 (Arthur’s Magazine) < 1989 (First for Women), so Arthur’s Magazine was started first.

Action: Finish[Arthur’s Magazine]""",

"""Question: Were Pavel Urysohn and Leonid Levin known for the same type of work?

Thought: I need to search Pavel Urysohn and Leonid Levin, find their types of work, then find if they are the same.

Action: Search[Pavel Urysohn]

Observation: Pavel Samuilovich Urysohn (February 3, 1898 - August 17, 1924) was a Soviet mathematician who is best known for his contributions in dimension theory.

Thought: Pavel Urysohn is a mathematician. I need to search Leonid Levin next and find its type of work.

Action: Search[Leonid Levin]

Observation: Leonid Anatolievich Levin is a Soviet-American mathematician and computer scientist.

Thought: Leonid Levin is a mathematician and computer scientist. So Pavel Urysohn and Leonid Levin have the same type of work.

Action: Finish[yes]""",

]

SUFFIX = """\nQuestion: {input}

{agent_scratchpad}"""

WIKI_PROMPT = PromptTemplate.from_examples(

EXAMPLES, SUFFIX, ["input", "agent_scratchpad"]

)實現流程如下:(1)用Prompt喂一個樣例,比如上面的模板:?Thought: xxx Action: Search[xxx];(2)LLMs會根據模板相同的邏輯,結合 ?CoT? 思維鏈方式一步一步思考,并獲取外部知識;(3)最后 ?Action: Finish 獲取最終結果后結束;

參考

(1)????https://arxiv.org/abs/2201.11903??

(2)https://arxiv.org/abs/2210.03629

本文轉載自周末程序猿,作者:周末程序猿