AI幫您解決“太長不看”難題:如何構建一套深層抽象概括模型

譯文過去幾十年來,我們經歷了一系列與信息相關的根本性變化與挑戰。時至今日,信息的獲取已經不再成為瓶頸; 事實上,真正的難題在于如何消化巨大的信息量。相信每位朋友都有這樣的切身感受:我們必須閱讀更多內容以了解與工作、新聞以及社交媒體相關的熱門資訊。為了解決這一挑戰,我們開始研究如何利用AI以幫助人們在信息大潮中改善工作體驗——而潛在的解決思路之一在于利用算法自動總結篇幅過長的文本內容。

然而要訓練出這樣一套能夠產生較長、連續且有意義摘要內容的模型仍是個開放性的研究課題。事實上,即使對于最為先進的深度學習算法而言,生成較長文本內容仍是個難以完成的任務。為了成功完成總結,我們向其中引入了兩項獨立的重要改進:更多的上下文詞匯生成模型以及通過強化學習(簡稱RL)新方法對匯總模型加以訓練。

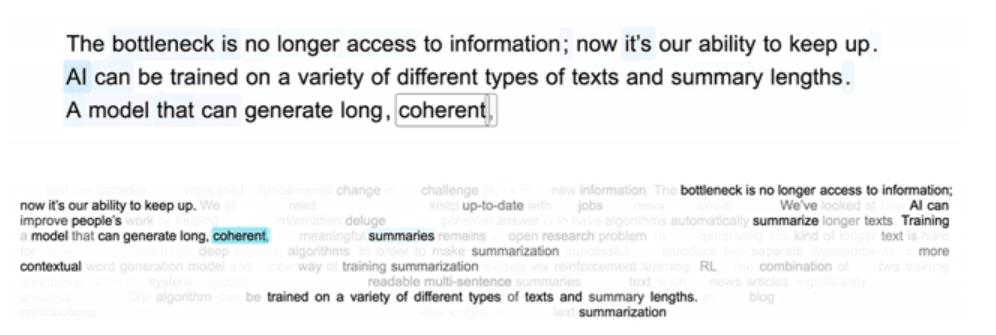

將這兩種訓練方法加以結合,意味著整體系統能夠將新聞文章等較長文本整理為具有相關性以及高度可讀性的多句式摘要內容,且實際效果遠優于以往方案。我們的算法能夠對不同類型的文本與摘錄長度進行訓練。在今天的博文中,我們將介紹這套模型的主要突破,同時對自然語言的文本概括相關挑戰加以說明。

圖一(點擊原文看gif圖):演示我們的模型如何從新聞文章當中生成多句式摘要內容。對于各個生成的詞匯,這套模型都會參考輸入的特定單詞以及此前給出的輸出選項。

提取與抽象總結

自動匯總模型的具體實現可采取以下兩種方法之一:即提取或者抽象。提取模型執行“復制與粘貼”操作,即選擇輸入文檔中的相關短語并加以連接,借此整理出摘要內容。由于直接使用來自文檔之內的現成自然語言表達,因此其功能非常強大——但在另一方面,由于無法使用新的詞匯或者連接表達,所以提取模型往往缺乏靈活性。另外,其有時候的表達效果也與人類的習慣有所差異。在另一方面,抽象模型基于具體“抽象”內容生成摘要:其能夠完全不使用原始輸入文檔內的現有詞匯。這意味著此類模型能夠生成更為流暢且連續的內容,但其實現難度也更高——因為我們需要確保其有能力生成連續的短語與連接表達。

盡管抽象模型在理論上更為強大,但其在實踐中也經常犯錯誤。典型的錯誤包括在生成的摘要中使用不連續、不相關或者重復的短語,這類問題在嘗試創建較長文本輸出內容時表現得更為明顯。另外,其還往往缺少上下文之間的一致性、濟性與可讀性。為了解決這些問題,我們顯然需要設計出一套更為強大且更具一致性的抽象概括模型。

為了了解我們的這套全新抽象模型,我們需要首先定義其基本構建塊,而后講解我們所采用的新型訓練方式。

利用編碼器-解碼器模型讀取并生成文本

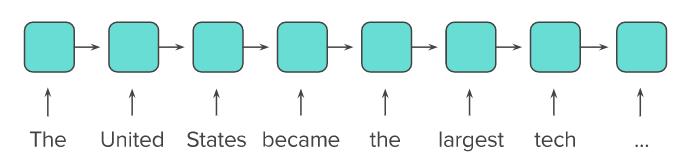

遞歸神經網絡(簡稱RNN)屬于一類深度學習模型,其能夠處理可變長度的序列(例如文本序列)并分段計算其中的可用表達(或者隱藏狀態)。此類網絡能夠逐一處理序列中的每項元素(在本示例中為每個單詞); 而對于序列中的每條新輸入內容,該網絡能夠將新的隱藏狀態作為該輸入內容及先前隱藏狀態的函數。如此一來,根據各個單詞計算得出的隱藏狀態都將作為全體單詞皆可讀取的函數。

圖二:遞歸神經網絡利用各單詞提供的同一函數(綠框)讀取輸入的句子。

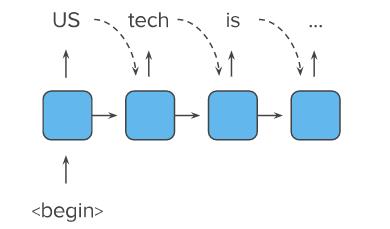

遞歸神經網絡亦可同樣的方式用于生成輸出序列。在每個步驟當中,遞歸神經網絡的隱藏狀態將用于生成一個新的單詞,并被添加至最終輸出結果內,同時被納入下一條輸入內容中。

圖三:遞歸神經網絡能夠生成輸出序列,同時復用各輸出單詞作為下一函數的輸入內容。

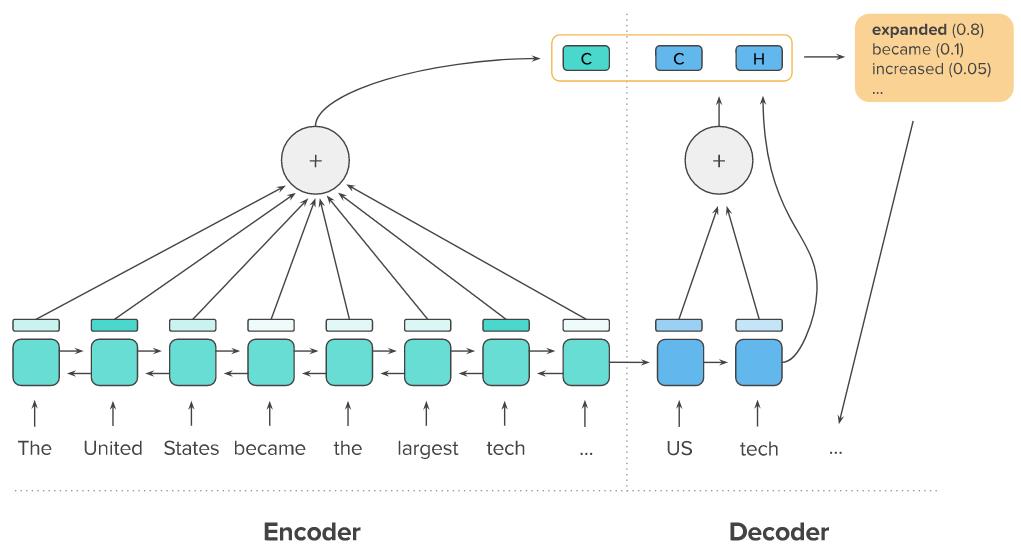

遞歸神經網絡能夠利用一套聯合模型將輸入(讀取)與輸出(生成)內容加以結合,其中輸入遞歸神經網絡的最終隱藏狀態將被作為輸出遞歸神經網絡的初始隱藏狀態。通過這種結合方式,該聯合模型將能夠讀取任意文本并以此為基礎生成不同文本信息。這套框架被稱為編碼器-解碼器遞歸神經網絡(亦簡稱Seq2Seq),并作為我們這套匯總模型的實現基礎。另外,我們還將利用一套雙向編碼器替代傳統的編碼器遞歸神經網絡,其使用兩套不同的遞歸神經網絡讀取輸入序列:一套從左到右進行文本讀取(如圖四所示),另一套則從右向左進行讀取。這將幫助我們的模型更好地根據上下文對輸入內容進行二次表達。

圖四:編碼器-解碼器遞歸神經網絡模型可用于解決自然語言當中的序列到序列處理任務(例如內容匯總)。

新的關注與解碼機制

為了讓我們的模型能夠輸出更為一致的結果,我們利用所謂時間關注(temporal attention)技術允許解碼器在新單詞生成時對輸出文檔內容進行回顧。相較于完全依賴其自有隱藏狀態,此解碼器能夠利用一條關注函數對輸入文本內容中的不同部分進行上下文信息聯動。該關注函數隨后會進行調整,旨在確保模型能夠在生成輸出文本時使用不同輸入內容作為參考,從而提升匯總結果的信息覆蓋能力。

另外,為了確保模型不會發生重復表達,我們還允許其回顧解碼器中的原有隱藏狀態。在這里,我們定義一條解碼器內關注函數以回顧解碼器遞歸神經網絡的先前隱藏狀態。最后,解碼器會將來自時間關注技術的上下文矢量與來自解碼器內關注函數的上下文矢量加以結合,共同生成輸出結果中的下一個單詞。圖五所示為特定解碼步驟當中這兩項關注功能的組合方式。

圖五:由編碼器隱藏狀態與解碼器隱藏狀態共同計算得出的兩條上下文矢量(標記為‘C’)。利用這兩條上下文矢量與當前解碼器隱藏狀態(標記為‘H’)相結合,即可生成一個新的單詞(右側)并將其添加至輸出序列當中。

如何訓練這套模型?監督學習與強化學習

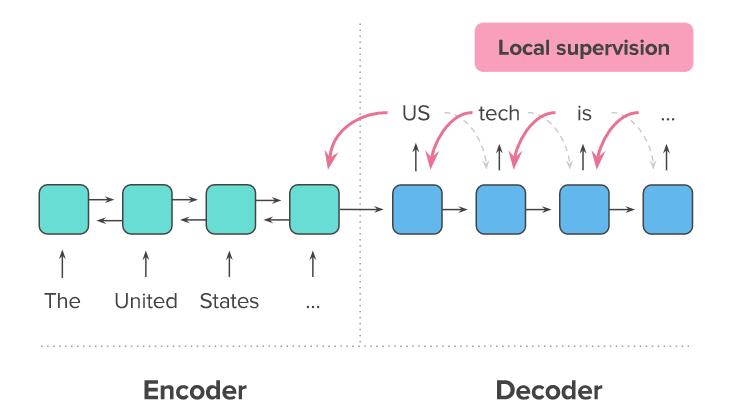

要利用新聞文章等實際數據對這套模型進行訓練,最為常規的方法在于使用教師強制算法(teacher forcing algorithm):模型利用參考摘要生成一份新摘要,并在其每次生成新單詞時進行逐詞錯誤提示(或者稱為‘本地監督’,具體如圖六所示)。

圖六:監督學習機制下的模型訓練流程。每個生成的單詞都會獲得一個訓練監督信號,具體由將該單詞與同一位置的實際摘要詞匯進行比較計算得出。

這種方法可用于訓練基于遞歸神經網絡的任意序列生成模型,且實際結果相當令人滿意。然而,對于我們此次探討的特定任務,摘要內容并不一定需要逐詞進行參考序列匹配以判斷其正確與否。可以想象,盡管面對的是同一份新聞文章,但兩位編輯仍可能寫出完全不同的摘要內容表達——具體包括使用不同的語言風格、用詞乃至句子順序,但二者皆能夠很好地完成總結任務。教師強制方法的問題在于,在生成數個單詞之后,整個訓練過程即會遭受誤導:即需要嚴格遵循正式的總結方式,而無法適應同樣正確但卻風格不同的起始表達。

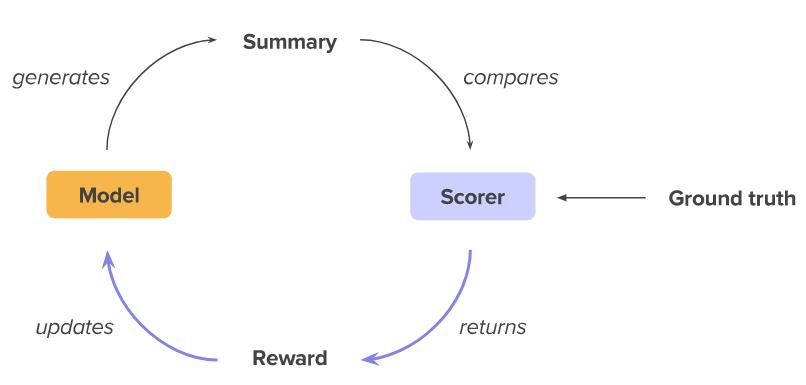

考慮到這一點,我們應當在教師強制方法之外找到更好的處理辦法。在這里,我們選擇了另一種完全不同的訓練類型,名為強化學習(簡稱RL)。首先,強化學習算法要求模型自行生成摘要,而后利用外部記分器來比較所生成摘要與正確參考文本間的差異。這一得分隨后會向模型表達其生成的摘要究竟質量如何。如果分數很高,那么該模型即可自我更新以使得此份摘要中的處理方式以更高機率在未來的處理中繼續出現。相反,如果得分較低,那么該模型將調整其生成過程以防止繼續輸出類似的摘要。這種強化學習模型能夠極大提升序列整體的評估效果,而非通過逐字分析以評判摘要質量。

圖七:在強化學習訓練方案當中,模型本身并不會根據每個單詞接受本地監督,而是依靠整體輸出結果與參考答案間的比照情況給出指導。

如何評估摘要質量?

那么之前提到的記分器到底是什么,它又如何判斷摘要內容的實際質量?由于要求人類以手動方式評估數百萬條摘要內容幾乎不具備任何實踐可行性,因此我們需要一種所謂ROUGE(即面向回顧的學習評估)技術。ROUGE通過將所生成摘要中的子短語與參考答案中的子短語進行比較對前者進行評估,且并不要求二者必須完全一致。ROUGE的各類不同變體(包括ROUGE-1、ROUGE-2以及ROUGE-L)都采用同樣的工作原理,但具體使用的子序列長度則有所區別。

盡管ROUGE給出的分數在很大程度上趨近于人類的主觀判斷,但ROUGE給出最高得分的摘要結果卻不一定具有最好的可讀性或者順暢度。在我們對模型進行訓練時,單獨使用強化學習訓練將使得ROUGE最大化成為一種硬性要求,而這無疑會帶來新的問題。事實上,在對ROUGE得分最高的摘要結果時,我們發現其中一部分內容幾乎完全不具備可讀性。

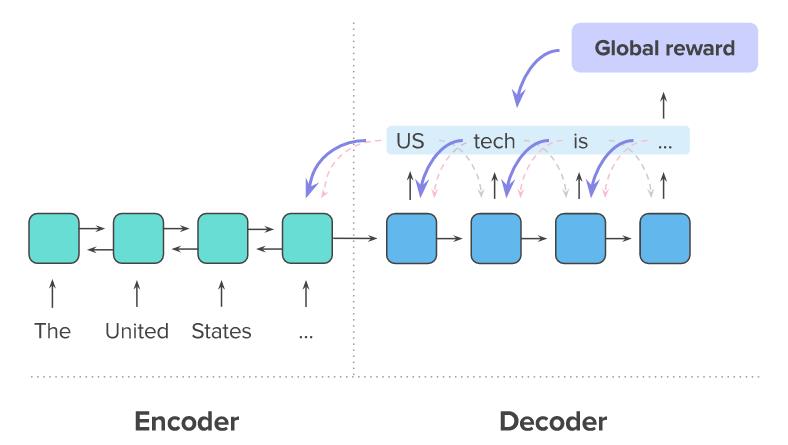

為了發揮二者的優勢,我們的模型同時利用教師強制與強化學習兩種方式進行訓練,希望借此通過單詞級監督與全面引導最大程度提升總結內容的一致性與可讀性。具體來講,我們發現ROUGE優化型強化學習機制能夠顯著提升強調能力(即確保囊括一切重要信息),而單詞層級的監督學習則有助于改善語言流暢度,最終令輸出內容更連續、更可讀。

圖八:監督學習(紅色箭頭)與強化學習(紫色箭頭)相結合,可以看到我們的模型如何同時利用本地與全局回饋的方式優化可讀性與整體ROUGE分數。

直到最近,CNN/Daily Mail數據集上的抽象總結最高ROUGE-1得分為35.46。而在我們將監督學習與強化學習相結合訓練方案的推動下,我們的解碼器內關注遞歸神經網絡模型將該分數提升到了39.87,而純強化學習訓練后得分更是高達41.16。圖九所示為其它現有模型與我們這套模型的總結內容得分情況。盡管我們的純強化學習模型擁有更高的ROUGE得分,但監督學習加強化學習模型在摘要內容的可讀性方面仍更勝一籌,這是因為其內容相關度更高。需要注意的是,See et al.采用了另一種不同的數據格式,因此其結果無法直接懷我們乃至其它模型的得分進行直接比較——這里僅將其作為參考。

|

模型 |

ROUGE-1 |

ROUGE-L |

|

35.46 |

32.65 |

|

|

39.2 |

35.5 |

|

|

39.6 |

35.3 |

|

|

39.53* |

36.38* |

|

|

我們的模型 (僅強化學習) |

41.16 |

39.08 |

|

我們的模型 (監督學習+強化學習) |

39.87 |

36.90 |

圖九:CNN/Daily Mail數據集上的內容摘要結果,其中包括我們的模型以及其它幾種現有提取與抽象方案。

輸出結果示例

那么如此大的進步在實際摘要匯總方面到底體現如何?在這里,我們對數據集進行了拆分以生成幾段多句式摘要內容。我們的模型及其更為簡單的基準設置在利用CNN/Daily Mail數據集訓練后得出以下結果。如大家所見,盡管摘要內容已經得到顯著改善,但距離完美仍有很長的距離要走。

|

文章 |

摘要(參考答案) |

摘要(我們的模型) |

|---|---|---|

|

Google Wallet says it has changed its policy when storing users' funds as they will now be federally-insured (file photo) For those who use Google Wallet, their money just became safer with federal-level insurance. Google confirmed to Yahoo Finance in a statement that its current policy changed - meaning the company will store the balances for users of the mobile transfer service (similar to PayPal and Venmo) in multiple federally-insured banking institutions. This is good news for people who place large amounts of money in their Wallet Balance because the Federal Deposit Insurance Corporation insures funds for banking institutions up to $250,000. Currently, Google's user agreement says funds are not protected by the FDIC. However, a Google spokesperson told Yahoo Finance that the current policy has changed. (...) |

Google spokesperson confirmed current policy changed meaning funds will be protected by the federal deposit insurance corporation. As a non-banking institution, Google Wallet, along with competitors PayPal and Venmo, is not legally required to be federally insured. With the new change to its policy, funds in wallet balance are protected if anything were to happen to the company like bankruptcy. |

Google confirmed to Yahoo Finance in a statement that its current policy changed. The company will store the balances for users of the mobile transfer service (similar to PayPal and Venmo) in multiple federally-insured banking institutions. Google's user agreement says funds are not protected by the federal deposit insurance corporation. |

| Talk about a chain reaction! This is the moment a billiards player performs a complex trick shot by setting up a domino train to pot four balls. Video footage shows a white ball being rolled down a positioned cue. It then bounces off one side of the red-clothed table and hits the first in a long line of dominoes. One by one the small counters fall down, tapping balls into various pockets as they go. First a yellow, then a blue, then a red. Finally, the last domino gently hits an orange ball, causing it to roll down another positioned cue lying on the table. The orb then knocks a green ball into the center pocket. In less than 30 seconds the stunt comes to a close. (...) | The clip was uploaded by youtube user honda4ridered. In another upload the skilled billiards player shows viewers how to pocket four balls in a single shot-and for those who miss it there's a slow motion version. | Video footage shows a white ball being rolled down a jumper. It then bounces off one side of the red-clothed table and hits the first in a long line of dominoes. One by one the small counters fall down, tapping balls into pockets as they go-first a yellow. It comes to a close. The clip was uploaded by youtube user honda4ridered. |

| Kelly Osbourne didn't always want to grow up to be like her famous mom - but in a letter published in the new book A Letter to My Mom, the TV personality admitted that she is now proud to be Sharon Osbourne's daughter. For author Lisa Erspamer's third collection of tributes, celebrities such as Melissa Rivers, Shania Twain, will.i.am, Christy Turlington Burns, and Kristin Chenoweth all composed messages of love and gratitude to the women who raised them. And the heartwarming epistolary book, which was published last week, has arrived just in time for Mother's Day on May 10. 'Like all teenage girls I had this ridiculous fear of growing up and becoming just like you,' Kelly Osbourne wrote in her letter, republished on Yahoo Parenting. 'I was so ignorant and adamant about creating my "own" identity.' Scroll down for video Mini-me: In Lisa Erspamer's new book A Letter to My Mom, Kelly Osbourne (R) wrote a letter to her mother Sharon (L) saying that she's happy to have grown up to be just like her (...) | Author Lisa Erspamer invited celebrities and a number of other people to write heartfelt notes to their mothers for her new book a letter to my mom. Stars such as Melissa Rivers, will.i.am, and Christy Turlington participated in the moving project. | Kelly didn't always want to grow up to be like her famous mom. Lisa Erspamer's third collection of tributes, celebrities such as Melissa rivers, Shania Twain, will.i.am, Christy Turlington, and Kristin Chenoweth all composed messages of love and gratitude to the women who raised them. Kelly wrote a letter to her mom before Joan's death last year. She has arrived just in time for Mother's Day on May 10. |

圖十:這里為我們的模型生成的更多摘要示例,并與由人類為同篇文章撰寫的摘要進行了比較。

為了說明我們的方案對于文本內容概括的重要改進效果,圖十一所示為我們在移除關注內與強化學習訓練之后的生成結果。

文章

Tony Blair has said he does not want to retire until he is 91 – as he unveiled plans to set up a ‘cadre’ of ex-leaders to advise governments around the world. The defiant 61-year-old former Prime Minister said he had ‘decades’ still in him and joked that he would ‘turn to drink’ if he ever stepped down from his multitude of global roles. He told Newsweek magazine that his latest ambition was to recruit former heads of government to go round the world to advise presidents and prime ministers on how to run their countries. In an interview with the magazine Newsweek Mr Blair said he did not want to retire until he was 91 years old Mr Blair said his latest ambition is to recruit former heads of government to advise presidents and prime ministers on how to run their countries Mr Blair said he himself had been ‘mentored’ by US president Bill Clinton when he took office in 1997. And he said he wanted to build up his organisations, such as his Faith Foundation, so they are ‘capable of changing global policy’. Last night, Tory MPs expressed horror at the prospect of Mr Blair remaining in public life for another 30 years. Andrew Bridgen said: ‘We all know weak Ed Miliband’s called on Tony to give his flailing campaign a boost, but the attention’s clearly gone to his head.’ (...)

摘要(由人類撰寫的參考答案)

The former Prime Minister claimed he has 'decades' of work left in him. Joked he would 'turn to drink' if he ever stepped down from global roles. Wants to recruit former government heads to advise current leaders. He was 'mentored' by US president Bill Clinton when he started in 1997.

摘要(我們的模型)

Blair said he did not want to retire until he was 91 years old. 61-year-old former prime minister said he would 'turn to drink' if he ever stepped down from his own. He said he wanted to build up his charity to advise presidents and prime ministers on how to run their countries. Mr Blair says he is to recruit former heads of government to go round the world to advise ministers. He says he wants to emulate ex-Israeli president Shimon Peres.

摘要(排除關注內與強化學習訓練)

61-year-old former prime minister said he did not want to retire until he was 91 years old. He said he wanted to build up his organisations, such as his Faith Foundation. He said he wanted to emulate ex-Israeli president Shimon Peres. Mr Blair said he wanted to emulate ex-Israeli President Shimon Peres. He said he wanted to be seeing someone when he took office in 1997. Mr Blair said he wanted to be seeing someone when he took office in 1997. Mr Blair said he wanted to

圖十一:將我們的模型所生成的摘要示例與移除改進機制后生成的結果進行比較。原始文檔當中并未出現的新詞匯以綠色形式標記。摘要中出現的重復性短語以紅色形式標記。

總結

我們的模型顯著改善了多句式文本摘要生成中所使用的各類先進技術,且實際結果優于現有抽象模型與提取模型的基準水平。我們相信,我們所作出的解碼器內關注模塊與復合型訓練目標貢獻亦能夠改善其它序列生成任務,特別是在長文本輸出場景之下。

我們的工作亦涉及諸如ROUGE等自動評估指標的限制,根據結果來看,理想的指標確實能夠較好地評估并優化內容摘要模型。理想的指標應與人類擁有基本一致的判斷標準,具體包括摘要內容的一致性與可讀性等方面。當我們利用此類度量標準對總結模型進行改進時,其結果的質量應該能夠得到進一步提升。

引用提示

如果您希望在發行物中引用此篇博文,請注明:

Romain Paulus、Caiming Xiong以及Richard Socher。2017年。

致謝

這里要特別感謝Melvin Gruesbeck為本文提供的圖像與統計數字。

原文鏈接: