一篇學會Caffeine W-TinyLFU源碼分析

本文轉載自微信公眾號「肌肉碼農」,作者肌肉碼農。轉載本文請聯系肌肉碼農公眾號。

Caffeine使用一個ConcurrencyHashMap來保存所有數據,那它的過期淘汰策略采用什么方式與數據結構呢?其中寫過期是使用writeOrderDeque,這個比較簡單無需多說,而讀過期相對復雜很多,使用W-TinyLFU的結構與算法。

網絡上有很多文章介紹W-TinyLFU結構的,大家可以去查一下,這里主要是從源碼來分析,總的來說它使用了三個雙端隊列:accessOrderEdenDeque,accessOrderProbationDeque,accessOrderProtectedDeque,使用雙端隊列的原因是支持LRU算法比較方便。

accessOrderEdenDeque屬于eden區,緩存1%的數據,其余的99%緩存在main區。

accessOrderProbationDeque屬于main區,緩存main內數據的20%,這部分是屬于冷數據,即將補淘汰。

accessOrderProtectedDeque屬于main區,緩存main內數據的80%,這部分是屬于熱數據,是整個緩存的主存區。

我們先看一下淘汰方法入口:

- void evictEntries() {

- if (!evicts()) {

- return;

- }

- //先從edn區淘汰

- int candidates = evictFromEden();

- //eden淘汰后的數據進入main區,然后再從main區淘汰

- evictFromMain(candidates);

- }

accessOrderEdenDeque對應W-TinyLFU的W(window),這里保存的是最新寫入數據的引用,它使用LRU淘汰,這里面的數據主要是應對突發流量的問題,淘汰后的數據進入accessOrderProbationDeque.代碼如下:

- int evictFromEden() {

- int candidates = 0;

- Node<K, V> node = accessOrderEdenDeque().peek();

- while (edenWeightedSize() > edenMaximum()) {

- // The pending operations will adjust the size to reflect the correct weight

- if (node == null) {

- break;

- }

- Node<K, V> next = node.getNextInAccessOrder();

- if (node.getWeight() != 0) {

- node.makeMainProbation();

- //先從eden區移除

- accessOrderEdenDeque().remove(node);

- //移除的數據加入到main區的probation隊列

- accessOrderProbationDeque().add(node);

- candidates++;

- lazySetEdenWeightedSize(edenWeightedSize() - node.getPolicyWeight());

- }

- node = next;

- }

- return candidates;

- }

數據進入probation隊列后,繼續執行以下代碼:

- void evictFromMain(int candidates) {

- int victimQueue = PROBATION;

- Node<K, V> victim = accessOrderProbationDeque().peekFirst();

- Node<K, V> candidate = accessOrderProbationDeque().peekLast();

- while (weightedSize() > maximum()) {

- // Stop trying to evict candidates and always prefer the victim

- if (candidates == 0) {

- candidate = null;

- }

- // Try evicting from the protected and eden queues

- if ((candidate == null) && (victim == null)) {

- if (victimQueue == PROBATION) {

- victim = accessOrderProtectedDeque().peekFirst();

- victimQueue = PROTECTED;

- continue;

- } else if (victimQueue == PROTECTED) {

- victim = accessOrderEdenDeque().peekFirst();

- victimQueue = EDEN;

- continue;

- }

- // The pending operations will adjust the size to reflect the correct weight

- break;

- }

- // Skip over entries with zero weight

- if ((victim != null) && (victim.getPolicyWeight() == 0)) {

- victim = victim.getNextInAccessOrder();

- continue;

- } else if ((candidate != null) && (candidate.getPolicyWeight() == 0)) {

- candidate = candidate.getPreviousInAccessOrder();

- candidates--;

- continue;

- }

- // Evict immediately if only one of the entries is present

- if (victim == null) {

- candidates--;

- Node<K, V> evict = candidate;

- candidate = candidate.getPreviousInAccessOrder();

- evictEntry(evict, RemovalCause.SIZE, 0L);

- continue;

- } else if (candidate == null) {

- Node<K, V> evict = victim;

- victim = victim.getNextInAccessOrder();

- evictEntry(evict, RemovalCause.SIZE, 0L);

- continue;

- }

- // Evict immediately if an entry was collected

- K victimKey = victim.getKey();

- K candidateKey = candidate.getKey();

- if (victimKey == null) {

- Node<K, V> evict = victim;

- victim = victim.getNextInAccessOrder();

- evictEntry(evict, RemovalCause.COLLECTED, 0L);

- continue;

- } else if (candidateKey == null) {

- candidates--;

- Node<K, V> evict = candidate;

- candidate = candidate.getPreviousInAccessOrder();

- evictEntry(evict, RemovalCause.COLLECTED, 0L);

- continue;

- }

- // Evict immediately if the candidate's weight exceeds the maximum

- if (candidate.getPolicyWeight() > maximum()) {

- candidates--;

- Node<K, V> evict = candidate;

- candidate = candidate.getPreviousInAccessOrder();

- evictEntry(evict, RemovalCause.SIZE, 0L);

- continue;

- }

- // Evict the entry with the lowest frequency

- candidates--;

- //最核心算法在這里:從probation的頭尾取出兩個node進行比較頻率,頻率更小者將被remove

- if (admit(candidateKey, victimKey)) {

- Node<K, V> evict = victim;

- victim = victim.getNextInAccessOrder();

- evictEntry(evict, RemovalCause.SIZE, 0L);

- candidate = candidate.getPreviousInAccessOrder();

- } else {

- Node<K, V> evict = candidate;

- candidate = candidate.getPreviousInAccessOrder();

- evictEntry(evict, RemovalCause.SIZE, 0L);

- }

- }

- }

上面的代碼邏輯是從probation的頭尾取出兩個node進行比較頻率,頻率更小者將被remove,其中尾部元素就是上一部分從eden中淘汰出來的元素,如果將兩步邏輯合并起來講是這樣的:在eden隊列通過lru淘汰出來的”候選者“與probation隊列通過lru淘汰出來的“被驅逐者“進行頻率比較,失敗者將被從cache中真正移除。下面看一下它的比較邏輯admit:

- boolean admit(K candidateKey, K victimKey) {

- int victimFreq = frequencySketch().frequency(victimKey);

- int candidateFreq = frequencySketch().frequency(candidateKey);

- //如果候選者的頻率高就淘汰被驅逐者

- if (candidateFreq > victimFreq) {

- return true;

- //如果被驅逐者比候選者的頻率高,并且候選者頻率小于等于5則淘汰者

- } else if (candidateFreq <= 5) {

- // The maximum frequency is 15 and halved to 7 after a reset to age the history. An attack

- // exploits that a hot candidate is rejected in favor of a hot victim. The threshold of a warm

- // candidate reduces the number of random acceptances to minimize the impact on the hit rate.

- return false;

- }

- //隨機淘汰

- int random = ThreadLocalRandom.current().nextInt();

- return ((random & 127) == 0);

- }

從frequencySketch取出候選者與被驅逐者的頻率,如果候選者的頻率高就淘汰被驅逐者,如果被驅逐者比候選者的頻率高,并且候選者頻率小于等于5則淘汰者,如果前面兩個條件都不滿足則隨機淘汰。

整個過程中你是不是發現protectedDeque并沒有什么作用,那它是怎么作為主存區來保存大部分數據的呢?

- //onAccess方法觸發該方法

- void reorderProbation(Node<K, V> node) {

- if (!accessOrderProbationDeque().contains(node)) {

- // Ignore stale accesses for an entry that is no longer present

- return;

- } else if (node.getPolicyWeight() > mainProtectedMaximum()) {

- return;

- }

- long mainProtectedWeightedSize = mainProtectedWeightedSize() + node.getPolicyWeight();

- //先從probation中移除

- accessOrderProbationDeque().remove(node);

- //加入到protected中

- accessOrderProtectedDeque().add(node);

- node.makeMainProtected();

- long mainProtectedMaximum = mainProtectedMaximum();

- //從protected中移除

- while (mainProtectedWeightedSize > mainProtectedMaximum) {

- Node<K, V> demoted = accessOrderProtectedDeque().pollFirst();

- if (demoted == null) {

- break;

- }

- demoted.makeMainProbation();

- //加入到probation中

- accessOrderProbationDeque().add(demoted);

- mainProtectedWeightedSize -= node.getPolicyWeight();

- }

- lazySetMainProtectedWeightedSize(mainProtectedWeightedSize);

- }

當數據被訪問時并且該數據在probation中,這個數據就會移動到protected中去,同時通過lru從protected中淘汰一個數據進入到probation中。

這樣數據流轉的邏輯全部通了:新數據都會進入到eden中,通過lru淘汰到probation,并與probation中通過lru淘汰的數據進行使用頻率pk,如果勝利了就繼續留在probation中,如果失敗了就會被直接淘汰,當這條數據被訪問了,則移動到protected。當其它數據被訪問了,則它可能會從protected中通過lru淘汰到probation中。

TinyLFU

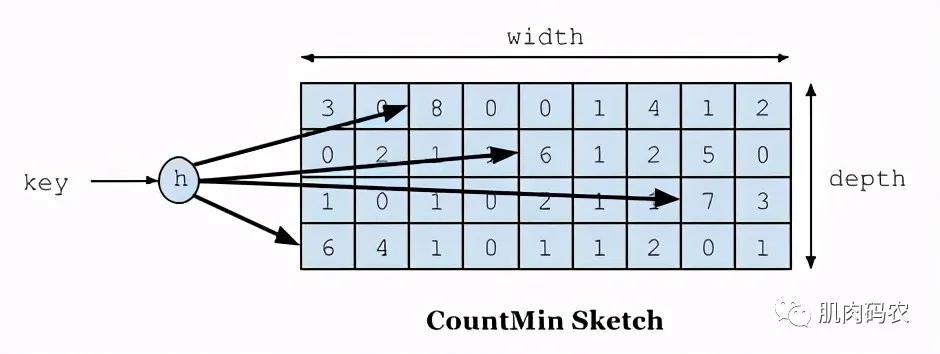

傳統LFU一般使用key-value形式來記錄每個key的頻率,優點是數據結構非常簡單,并且能跟緩存本身的數據結構復用,增加一個屬性記錄頻率就行了,它的缺點也比較明顯就是頻率這個屬性會占用很大的空間,但如果改用壓縮方式存儲頻率呢? 頻率占用空間肯定可以減少,但會引出另外一個問題:怎么從壓縮后的數據里獲得對應key的頻率呢?

TinyLFU的解決方案是類似位圖的方法,將key取hash值獲得它的位下標,然后用這個下標來找頻率,但位圖只有0、1兩個值,那頻率明顯可能會非常大,這要怎么處理呢? 另外使用位圖需要預占非常大的空間,這個問題怎么解決呢?

TinyLFU根據最大數據量設置生成一個long數組,然后將頻率值保存在其中的四個long的4個bit位中(4個bit位不會大于15),取頻率值時則取四個中的最小一個。

Caffeine認為頻率大于15已經很高了,是屬于熱數據,所以它只需要4個bit位來保存,long有8個字節64位,這樣可以保存16個頻率。取hash值的后左移兩位,然后加上hash四次,這樣可以利用到16個中的13個,利用率挺高的,或許有更好的算法能將16個都利用到。

- public void increment(@Nonnull E e) {

- if (isNotInitialized()) {

- return;

- }

- int hash = spread(e.hashCode());

- int start = (hash & 3) << 2;

- // Loop unrolling improves throughput by 5m ops/s

- int index0 = indexOf(hash, 0); //indexOf也是一種hash方法,不過會通過tableMask來限制范圍

- int index1 = indexOf(hash, 1);

- int index2 = indexOf(hash, 2);

- int index3 = indexOf(hash, 3);

- boolean added = incrementAt(index0, start);

- added |= incrementAt(index1, start + 1);

- added |= incrementAt(index2, start + 2);

- added |= incrementAt(index3, start + 3);

- //當數據寫入次數達到數據長度時就重置

- if (added && (++size == sampleSize)) {

- reset();

- }

- }

給對應位置的bit位四位的Int值加1:

- boolean incrementAt(int i, int j) {

- int offset = j << 2;

- long mask = (0xfL << offset);

- //當已達到15時,次數不再增加

- if ((table[i] & mask) != mask) {

- table[i] += (1L << offset);

- return true;

- }

- return false;

- }

獲得值的方法也是通過四次hash來獲得,然后取最小值:

- public int frequency(@Nonnull E e) {

- if (isNotInitialized()) {

- return 0;

- }

- int hash = spread(e.hashCode());

- int start = (hash & 3) << 2;

- int frequency = Integer.MAX_VALUE;

- //四次hash

- for (int i = 0; i < 4; i++) {

- int index = indexOf(hash, i);

- //獲得bit位四位的Int值

- int count = (int) ((table[index] >>> ((start + i) << 2)) & 0xfL);

- //取最小值

- frequency = Math.min(frequency, count);

- }

- return frequency;

- }

當數據寫入次數達到數據長度時就會將次數減半,一些冷數據在這個過程中將歸0,這樣會使hash沖突降低:

- void reset() {

- int count = 0;

- for (int i = 0; i < table.length; i++) {

- count += Long.bitCount(table[i] & ONE_MASK);

- table[i] = (table[i] >>> 1) & RESET_MASK;

- }

- size = (size >>> 1) - (count >>> 2);

- }