適合新手小白的幾個練習Python爬蟲的實戰

經常有新手小白在學習完 Python 的基礎知識之后,不知道該如何進一步提升編碼水平,那么此時找一些友好的網站來練習爬蟲可能是一個比較好的方法,因為高級爬蟲本身就需要掌握很多知識點,以爬蟲作為切入點,既可以掌握鞏固 Python 知識,也可能在未來學習接觸到更多其他方面的知識,比如分布式,多線程等等,何樂而不為呢!

下面我們介紹幾個非常簡單入門的爬蟲項目,相信不會再出現那種直接勸退的現象啦!

豆瓣

豆瓣作為國民級網站,在爬蟲方面也非常友好,幾乎沒有設置任何反爬措施,以此網站來練手實在是在適合不過了。

評論爬取

我們以如下地址為例子

https://movie.douban.com/subject/3878007/

可以看到這里需要進行翻頁處理,通過觀察發現,評論的URL如下:

https://movie.douban.com/subject/3878007/comments?start=0&limit=20&sort=new_score&status=P&percent_type=l

每次翻一頁,start都會增長20,由此可以寫代碼如下

def get_praise():

praise_list = []

for i in range(0, 2000, 20):

url = 'https://movie.douban.com/subject/3878007/comments?start=%s&limit=20&sort=new_score&status=P&percent_type=h' % str(i)

req = requests.get(url).text

content = BeautifulSoup(req, "html.parser")

check_point = content.title.string

if check_point != r"沒有訪問權限":

comment = content.find_all("span", attrs={"class": "short"})

for k in comment:

praise_list.append(k.string)

else:

break

return

使用range函數,步長設置為20,同時通過title等于“沒有訪問權限”來作為翻頁的終點。

下面繼續分析評論等級。

豆瓣的評論是分為三個等級的,這里分別獲取,方便后面的繼續分析

def get_ordinary():

ordinary_list = []

for i in range(0, 2000, 20):

url = 'https://movie.douban.com/subject/3878007/comments?start=%s&limit=20&sort=new_score&status=P&percent_type=m' % str(i)

req = requests.get(url).text

content = BeautifulSoup(req, "html.parser")

check_point = content.title.string

if check_point != r"沒有訪問權限":

comment = content.find_all("span", attrs={"class": "short"})

for k in comment:

ordinary_list.append(k.string)

else:

break

return

def get_lowest():

lowest_list = []

for i in range(0, 2000, 20):

url = 'https://movie.douban.com/subject/3878007/comments?start=%s&limit=20&sort=new_score&status=P&percent_type=l' % str(i)

req = requests.get(url).text

content = BeautifulSoup(req, "html.parser")

check_point = content.title.string

if check_point != r"沒有訪問權限":

comment = content.find_all("span", attrs={"class": "short"})

for k in comment:

lowest_list.append(k.string)

else:

break

return

其實可以看到,這里的三段區別主要在請求URL那里,分別對應豆瓣的好評,一般和差評。

最后把得到的數據保存到文件里。

if __name__ == "__main__":

print("Get Praise Comment")

praise_data = get_praise()

print("Get Ordinary Comment")

ordinary_data = get_ordinary()

print("Get Lowest Comment")

lowest_data = get_lowest()

print("Save Praise Comment")

praise_pd = pd.DataFrame(columns=['praise_comment'], data=praise_data)

praise_pd.to_csv('praise.csv', encoding='utf-8')

print("Save Ordinary Comment")

ordinary_pd = pd.DataFrame(columns=['ordinary_comment'], data=ordinary_data)

ordinary_pd.to_csv('ordinary.csv', encoding='utf-8')

print("Save Lowest Comment")

lowest_pd = pd.DataFrame(columns=['lowest_comment'], data=lowest_data)

lowest_pd.to_csv('lowest.csv', encoding='utf-8')

print("THE END!!!")

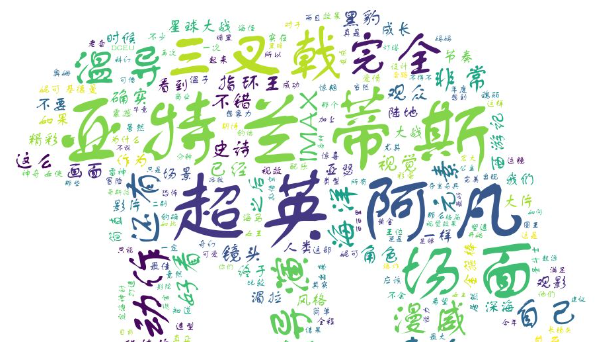

制作詞云

這里使用jieba來分詞,使用wordcloud庫制作詞云,還是分成三類,同時去掉了一些干擾詞,比如“一部”、“一個”、“故事”和一些其他名詞,操作都不是很難,直接上代碼。

import jieba

import pandas as pd

from wordcloud import WordCloud

import numpy as np

from PIL import Image

font = r'C:\Windows\Fonts\FZSTK.TTF'

STOPWORDS = set(map(str.strip, open('stopwords.txt').readlines()))

def wordcloud_praise():

df = pd.read_csv('praise.csv', usecols=[1])

df_list = df.values.tolist()

comment_after = jieba.cut(str(df_list), cut_all=False)

words = ' '.join(comment_after)

img = Image.open('haiwang8.jpg')

img_array = np.array(img)

wc = WordCloud(width=2000, height=1800, background_color='white', font_path=font, mask=img_array, stopwords=STOPWORDS)

wc.generate(words)

wc.to_file('praise.png')

def wordcloud_ordinary():

df = pd.read_csv('ordinary.csv', usecols=[1])

df_list = df.values.tolist()

comment_after = jieba.cut(str(df_list), cut_all=False)

words = ' '.join(comment_after)

img = Image.open('haiwang8.jpg')

img_array = np.array(img)

wc = WordCloud(width=2000, height=1800, background_color='white', font_path=font, mask=img_array, stopwords=STOPWORDS)

wc.generate(words)

wc.to_file('ordinary.png')

def wordcloud_lowest():

df = pd.read_csv('lowest.csv', usecols=[1])

df_list = df.values.tolist()

comment_after = jieba.cut(str(df_list), cut_all=False)

words = ' '.join(comment_after)

img = Image.open('haiwang7.jpg')

img_array = np.array(img)

wc = WordCloud(width=2000, height=1800, background_color='white', font_path=font, mask=img_array, stopwords=STOPWORDS)

wc.generate(words)

wc.to_file('lowest.png')

if __name__ == "__main__":

print("Save praise wordcloud")

wordcloud_praise()

print("Save ordinary wordcloud")

wordcloud_ordinary()

print("Save lowest wordcloud")

wordcloud_lowest()

print("THE END!!!")

海報爬取

對于海報的爬取,其實也十分類似,直接給出代碼

import requests

import json

def deal_pic(url, name):

pic = requests.get(url)

with open(name + '.jpg', 'wb') as f:

f.write(pic.content)

def get_poster():

for i in range(0, 10000, 20):

url = 'https://movie.douban.com/j/new_search_subjects?sort=U&range=0,10&tags=電影&start=%s&genres=愛情' % i

req = requests.get(url).text

req_dict = json.loads(req)

for j in req_dict['data']:

name = j['title']

poster_url = j['cover']

print(name, poster_url)

deal_pic(poster_url, name)

if __name__ == "__main__":

get_poster()

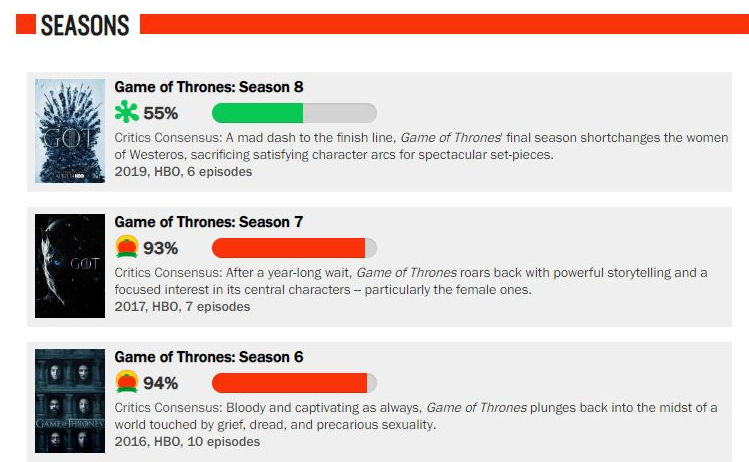

爛番茄網站

這是一個國外的電影影評網站,也比較適合新手練習,網址如下

https://www.rottentomatoes.com/tv/game_of_thrones

我們就以權力的游戲作為爬取例子。

import requests

from bs4 import BeautifulSoup

from pyecharts.charts import Line

import pyecharts.options as opts

from wordcloud import WordCloud

import jieba

baseurl = 'https://www.rottentomatoes.com'

def get_total_season_content():

url = 'https://www.rottentomatoes.com/tv/game_of_thrones'

response = requests.get(url).text

content = BeautifulSoup(response, "html.parser")

season_list = []

div_list = content.find_all('div', attrs={'class': 'bottom_divider media seasonItem '})

for i in div_list:

suburl = i.find('a')['href']

season = i.find('a').text

rotten = i.find('span', attrs={'class': 'meter-value'}).text

consensus = i.find('div', attrs={'class': 'consensus'}).text.strip()

season_list.append([season, suburl, rotten, consensus])

return season_list

def get_season_content(url):

# url = 'https://www.rottentomatoes.com/tv/game_of_thrones/s08#audience_reviews'

response = requests.get(url).text

content = BeautifulSoup(response, "html.parser")

episode_list = []

div_list = content.find_all('div', attrs={'class': 'bottom_divider'})

for i in div_list:

suburl = i.find('a')['href']

fresh = i.find('span', attrs={'class': 'tMeterScore'}).text.strip()

episode_list.append([suburl, fresh])

return episode_list[:5]

mylist = [['/tv/game_of_thrones/s08/e01', '92%'],

['/tv/game_of_thrones/s08/e02', '88%'],

['/tv/game_of_thrones/s08/e03', '74%'],

['/tv/game_of_thrones/s08/e04', '58%'],

['/tv/game_of_thrones/s08/e05', '48%'],

['/tv/game_of_thrones/s08/e06', '49%']]

def get_episode_detail(episode):

# episode = mylist

e_list = []

for i in episode:

url = baseurl + i[0]

# print(url)

response = requests.get(url).text

content = BeautifulSoup(response, "html.parser")

critic_consensus = content.find('p', attrs={'class': 'critic_consensus superPageFontColor'}).text.strip().replace(' ', '').replace('\n', '')

review_list_left = content.find_all('div', attrs={'class': 'quote_bubble top_critic pull-left cl '})

review_list_right = content.find_all('div', attrs={'class': 'quote_bubble top_critic pull-right '})

review_list = []

for i_left in review_list_left:

left_review = i_left.find('div', attrs={'class': 'media-body'}).find('p').text.strip()

review_list.append(left_review)

for i_right in review_list_right:

right_review = i_right.find('div', attrs={'class': 'media-body'}).find('p').text.strip()

review_list.append(right_review)

e_list.append([critic_consensus, review_list])

print(e_list)

if __name__ == '__main__':

total_season_content = get_total_season_content()

王者英雄網站

我這里選取的是如下網站

http://db.18183.com/

import requests

from bs4 import BeautifulSoup

def get_hero_url():

print('start to get hero urls')

url = 'http://db.18183.com/'

url_list = []

res = requests.get(url + 'wzry').text

content = BeautifulSoup(res, "html.parser")

ul = content.find('ul', attrs={'class': "mod-iconlist"})

hero_url = ul.find_all('a')

for i in hero_url:

url_list.append(i['href'])

print('finish get hero urls')

return url_list

def get_details(url):

print('start to get details')

base_url = 'http://db.18183.com/'

detail_list = []

for i in url:

# print(i)

res = requests.get(base_url + i).text

content = BeautifulSoup(res, "html.parser")

name_box = content.find('div', attrs={'class': 'name-box'})

name = name_box.h1.text

hero_attr = content.find('div', attrs={'class': 'attr-list'})

attr_star = hero_attr.find_all('span')

survivability = attr_star[0]['class'][1].split('-')[1]

attack_damage = attr_star[1]['class'][1].split('-')[1]

skill_effect = attr_star[2]['class'][1].split('-')[1]

getting_started = attr_star[3]['class'][1].split('-')[1]

details = content.find('div', attrs={'class': 'otherinfo-datapanel'})

# print(details)

attrs = details.find_all('p')

attr_list = []

for attr in attrs:

attr_list.append(attr.text.split(':')[1].strip())

detail_list.append([name, survivability, attack_damage,

skill_effect, getting_started, attr_list])

print('finish get details')

return detail_list

def save_tocsv(details):

print('start save to csv')

with open('all_hero_init_attr_new.csv', 'w', encoding='gb18030') as f:

f.write('英雄名字,生存能力,攻擊傷害,技能效果,上手難度,最大生命,最大法力,物理攻擊,'

'法術攻擊,物理防御,物理減傷率,法術防御,法術減傷率,移速,物理護甲穿透,法術護甲穿透,攻速加成,暴擊幾率,'

'暴擊效果,物理吸血,法術吸血,冷卻縮減,攻擊范圍,韌性,生命回復,法力回復\n')

for i in details:

try:

rowcsv = '{},{},{},{},{},{},{},{},{},{},{},{},{},{},{},{},{},{},{},{},{},{},{},{},{},{}'.format(

i[0], i[1], i[2], i[3], i[4], i[5][0], i[5][1], i[5][2], i[5][3], i[5][4], i[5][5],

i[5][6], i[5][7], i[5][8], i[5][9], i[5][10], i[5][11], i[5][12], i[5][13], i[5][14], i[5][15],

i[5][16], i[5][17], i[5][18], i[5][19], i[5][20]

)

f.write(rowcsv)

f.write('\n')

except:

continue

print('finish save to csv')

if __name__ == "__main__":

get_hero_url()

hero_url = get_hero_url()

details = get_details(hero_url)

save_tocsv(details)

好了,今天先分享這三個網站,咱們后面再慢慢分享更多好的練手網站與實戰代碼!