比GPT-4更強大的AI模型訓練應該被暫停嗎?

GPT-4的發布,在全球范圍再一次掀起AI技術應用的熱潮。與此同時,一些知名計算機科學家和科技業界人士也對人工智能技術的快速發展表示了擔憂,因為這對人類社會存在著不可預知的潛在風險。

北京時間3月29日,由特斯拉公司CEO 埃隆·馬斯克,圖靈獎得主約書亞·本吉奧(Yoshua Bengio),蘋果聯合創始人瓦茲尼亞克(Steve Wozniak),以及《人類簡史》作者尤瓦爾·赫拉利等人聯名簽署了一封公開信,呼吁全球所有AI實驗室立即暫停訓練比GPT-4更強大的AI系統,為期至少6個月,以確保人類能夠有效管理其風險。如果商業型的AI研究組織不能快速暫停其研發進程,各國政府應該采取有效監管措施實行暫停令。

在這封公開信中,詳細表述了暫停AI模型訓練的理由:AI技術已經強大到能和人類進行某些方面的競爭,將給人類社會帶來深刻的變革。因此,所有人都必須思考AI的潛在風險:假新聞和宣傳充斥信息渠道、大量工作被自動化取代、人工智能甚至有一天會比人類更聰明、更強大,讓我們失去對人類文明的掌控。只有當我們確信強大的人工智能系統的影響將是積極的,其風險將是可控的時候,才應該繼續開發和訓練它們。

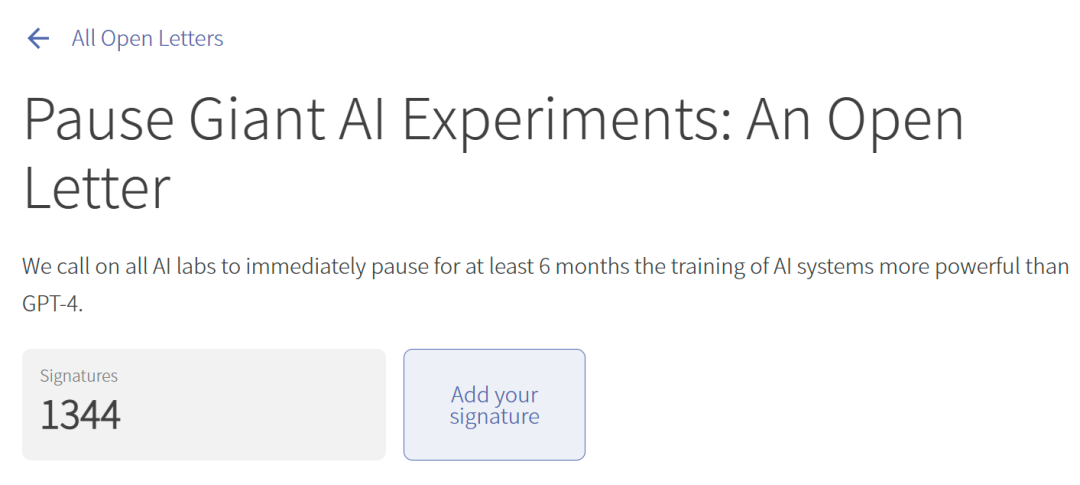

截至今天中午11點,這份公開信已經征集到1344份簽名。?

各大科技公司是否真的過快推進了AI技術,并有可能威脅人類的生存呢?實際上,OpenAI創始人山姆·阿爾特曼自己也對chatGPT的火爆應用表示了擔憂:我對AI如何影響勞動力市場、選舉和虛假信息的傳播有些“害怕”,AI技術需要政府和社會共同參與監管,用戶反饋和規則制定對抑制AI的負面影響非常重要。

如果連AI系統的制造者也并不能完全理解、預測和有效控制其風險,而且相應的安全計劃和管理工作也沒有跟上,那么目前的AI技術研發或許確實已陷入了“失控”的競賽態勢。

附:公開信原文

AI systems with human-competitive intelligence can pose profound risks to society and humanity, as shown by extensive research,and acknowledged by top AI labs. As stated in the widely-endorsed Asilomar AI Principles, Advanced AI could represent a profound change in the history of life on Earth, and should be planned for and managed with commensurate care and resources. Unfortunately, this level of planning and management is not happening, even though recent months have seen AI labs locked in an out-of-control race to develop and deploy ever more powerful digital minds that no one – not even their creators – can understand, predict, or reliably control.

Contemporary AI systems are now becoming human-competitive at general tasks, and we must ask ourselves: Should we let machines flood our information channels with propaganda and untruth? Should we automate away all the jobs, including the fulfilling ones? Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete and replace us? Should we risk loss of control of our civilization? Such decisions must not be delegated to unelected tech leaders. Powerful AI systems should be developed only once we are confident that their effects will be positive and their risks will be manageable. This confidence must be well justified and increase with the magnitude of a system's potential effects. OpenAI's recent statement regarding artificial general intelligence, states that "At some point, it may be important to get independent review before starting to train future systems, and for the most advanced efforts to agree to limit the rate of growth of compute used for creating new models." We agree. That point is now.

Therefore, we call on all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4. This pause should be public and verifiable, and include all key actors. If such a pause cannot be enacted quickly, governments should step in and institute a moratorium.

AI labs and independent experts should use this pause to jointly develop and implement a set of shared safety protocols for advanced AI design and development that are rigorously audited and overseen by independent outside experts. These protocols should ensure that systems adhering to them are safe beyond a reasonable doubt. This does not mean a pause on AI development in general, merely a stepping back from the dangerous race to ever-larger unpredictable black-box models with emergent capabilities.

AI research and development should be refocused on making today's powerful, state-of-the-art systems more accurate, safe, interpretable, transparent, robust, aligned, trustworthy, and loyal.In parallel, AI developers must work with policymakers to dramatically accelerate development of robust AI governance systems. These should at a minimum include: new and capable regulatory authorities dedicated to AI; oversight and tracking of highly capable AI systems and large pools of computational capability; provenance and watermarking systems to help distinguish real from synthetic and to track model leaks; a robust auditing and certification ecosystem; liability for AI-caused harm; robust public funding for technical AI safety research; and well-resourced institutions for coping with the dramatic economic and political disruptions (especially to democracy) that AI will cause.

Humanity can enjoy a flourishing future with AI. Having succeeded in creating powerful AI systems, we can now enjoy an "AI summer" in which we reap the rewards, engineer these systems for the clear benefit of all, and give society a chance to adapt. Society has hit pause on other technologies with potentially catastrophic effects on society.We can do so here.Let's enjoy a long AI summer, not rush unprepared into a fall.