檢索感知微調(RAFT),提升領域RAG效果的新方法

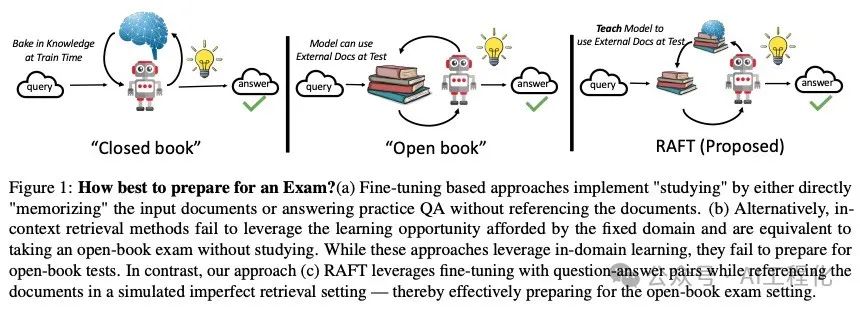

一般來講,讓大模型應用到具體的行業領域,那就必須讓大模型懂得行業里的知識。這種知識的導入一般有三種方法,一種是在預訓練階段喂給模型一些領域的文檔和知識,擴充一些領域詞表的方式解決。而更為常用的是另外兩種做法,微調或者RAG,其中微調是以問答對的方式將領域知識訓練到模型中,而RAG則是通過在Prompt中增加領域知識上下文的方式讓大模型獲得相關領域知識進而回答領域問題。有一個形象的比喻是,微調的方式相當于是閉卷考試,在Prompt中不添加任何的上下文內容(zero-hshot),而RAG相當于是開卷考試。雖然RAG在成本上,內容時效性,靈活性上都優于微調,但它也同時受到兩個問題影響,一個是提供的上下文知識并不包含答案,或者是上下文知識里包含著雜亂,干擾的信息,導致最終導致無法正確回答問題。前者,在前面的RAG2.0中有提到解決思路。??(RAG 2.0來了,它能成為生產落地的福音嗎???)

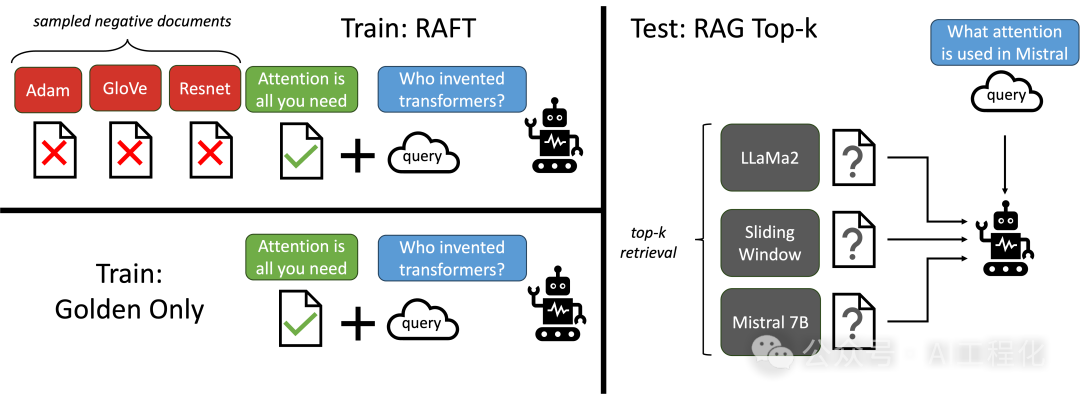

而后者,近日伯克利大學、Meta以及微軟的研究者將RAG的思路應用到微調領域,提出了RAFT(Retrieval Aware Fine-Tuning)的方法[1],該方法介于微調和RAG之間,不同于傳統的微調方法,樣本是采用Q->A的文檔對構成,而RAFT中,增加了一些背景信息內容,里面還包含了一些不相干的干擾信息,也就是說在微調過程中,就讓模型學會從干擾信息中獲得正確答案的能力。

訓練樣本包含問題、上下文、指令、 CoT 答案和最終答案。在答案中,使用 ##begin_quote## 和 ##end_quote## 表示直接從上下文中復制粘貼的引用的開頭和結尾。這是一種防止模型產生幻覺并堅持所提供上下文的有效方法。下面是一個樣本數據示例:

Question: The Oberoi family is part of a hotel company that has a head office in what city?

context: [The Oberoi family is an Indian family that is famous for its involvement in hotels, namely through The Oberoi Group]...[It is located in city center of Jakarta, near Mega Kuningan, adjacent to the sister JW Marriott Hotel. It is operated by The Ritz-Carlton Hotel Company. The complex has two towers that comprises a hotel and the Airlangga Apartment respectively]...[The Oberoi Group is a hotel company with its head office in Delhi.]

Instruction: Given the question, context and answer above, provide a logical reasoning for that answer. Please use the format of: ##Reason: {reason} ##Answer: {answer}.

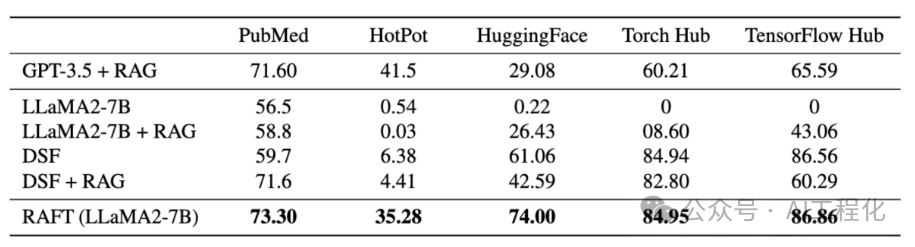

CoT Answer: ##Reason: The document ##begin_quote## The Oberoi family is an Indian family that is famous for its involvement in hotels, namely through The Oberoi Group. ##end_quote## establishes that the Oberoi family is involved in the Oberoi group, and the document ##begin_quote## The Oberoi Group is a hotel company with its head office in Delhi. ##end_quote## establishes the head office of The Oberoi Group. Therefore, the Oberoi family is part of a hotel company whose head office is in Delhi. ##Answer: Delhi這種思路是符合直覺的,研究者在Medical (PubMed), General-knowledge (HotPotQA)和API (Gorilla) 數據集上評估,RAFT性能提升明顯,在llama2-7b+RAG基礎上提升了14.5,超過了GPT-3.5+RAG的性能。

官方也提供了相應的訓練指導[2],包含了生成數據集,微調,評估的全過程,llamaindex也實現了數據集生成的工具包[3],可方便對接llamaindex流程。

參考:

【1】https://arxiv.org/abs/2403.10131

【2】https://github.com/ShishirPatil/gorilla/tree/main/raft

【3】https://github.com/run-llama/llama_index/tree/main/llama-index-packs/llama-index-packs-raft-dataset

本文轉載自***,作者: