提示工程中的代理技術:構建智能自主的AI系統

今天,我們將進入一個更加復雜和動態的領域:提示工程中的代理技術。這種技術允許我們創建能夠自主決策、執行復雜任務序列,甚至與人類和其他系統交互的AI系統。讓我們一起探索如何設計和實現這些智能代理,以及它們如何改變我們與AI交互的方式。

1. 代理技術在AI中的重要性

在深入技術細節之前,讓我們先理解為什么代理技術在現代AI系統中如此重要:

- 任務復雜性:隨著AI應用場景的復雜化,單一的靜態提示已經無法滿足需求。代理可以處理需要多步驟、決策和規劃的復雜任務。

- 自主性:代理技術使AI系統能夠更加自主地運作,減少人類干預的需求。

- 適應性:代理可以根據環境和任務的變化動態調整其行為,提高系統的靈活性。

- 交互能力:代理可以與人類用戶、其他AI系統或外部工具進行復雜的交互。

- 持續學習:通過與環境的交互,代理可以不斷學習和改進其性能。

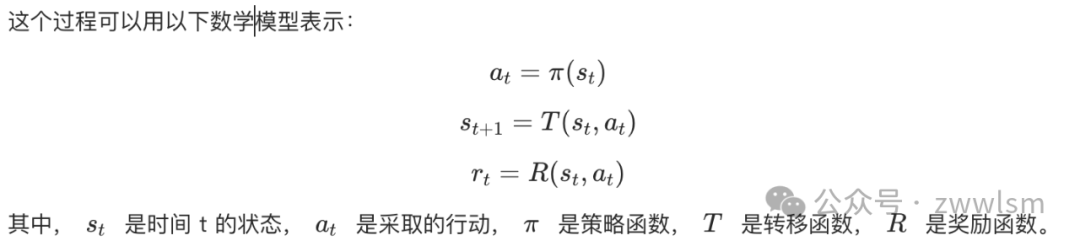

2. AI代理的基本原理

AI代理的核心是一個決策循環,通常包括以下步驟:

- 感知(Perception):收集來自環境的信息。

- 推理(Reasoning):基于收集的信息進行推理和決策。

- 行動(Action):執行選定的行動。

- 學習(Learning):從行動的結果中學習,更新知識庫。

3. 提示工程中的代理技術

現在,讓我們探討如何使用提示工程來實現這些代理。

3.1 基于規則的代理

最簡單的代理是基于預定義規則的。雖然不如更復雜的方法靈活,但它們在某些場景下仍然非常有用。

import openai

def rule_based_agent(task, context):

rules = {

"greeting": "If the task involves greeting, always start with 'Hello! How can I assist you today?'",

"farewell": "If the task is complete, end with 'Is there anything else I can help you with?'",

"clarification": "If the task is unclear, ask for more details before proceeding."

}

prompt = f"""

Task: {task}

Context: {context}

Rules to follow:

{rules['greeting']}

{rules['farewell']}

{rules['clarification']}

Please complete the task following these rules.

"""

response = openai.Completion.create(

engine="text-davinci-002",

prompt=prompt,

max_tokens=150,

temperature=0.7

)

return response.choices[0].text.strip()

# 使用示例

task = "Greet the user and ask about their day"

context = "First interaction with a new user"

result = rule_based_agent(task, context)

print(result)這個例子展示了如何使用簡單的規則來指導AI代理的行為。

3.2 基于目標的代理

基于目標的代理更加靈活,它們能夠根據給定的目標自主規劃和執行任務。

def goal_based_agent(goal, context):

prompt = f"""

Goal: {goal}

Context: {context}

As an AI agent, your task is to achieve the given goal. Please follow these steps:

1. Analyze the goal and context

2. Break down the goal into subtasks

3. For each subtask:

a. Plan the necessary actions

b. Execute the actions

c. Evaluate the result

4. If the goal is not yet achieved, repeat steps 2-3

5. Once the goal is achieved, summarize the process and results

Please start your analysis and planning:

"""

response = openai.Completion.create(

engine="text-davinci-002",

prompt=prompt,

max_tokens=300,

temperature=0.7

)

return response.choices[0].text.strip()

# 使用示例

goal = "Organize a virtual team-building event for a remote team of 10 people"

context = "The team is spread across different time zones and has diverse interests"

result = goal_based_agent(goal, context)

print(result)這個代理能夠分析復雜的目標,將其分解為子任務,并逐步執行以實現目標。

3.3 工具使用代理

一個更高級的代理類型是能夠使用外部工具來完成任務的代理。這種代理可以極大地擴展AI系統的能力。

import openai

import requests

import wolframalpha

def tool_using_agent(task, available_tools):

prompt = f"""

Task: {task}

Available tools: {', '.join(available_tools)}

As an AI agent with access to external tools, your job is to complete the given task. Follow these steps:

1. Analyze the task and determine which tools, if any, are needed

2. For each required tool:

a. Formulate the query for the tool

b. Use the tool (simulated in this example)

c. Interpret the results

3. Combine the information from all tools to complete the task

4. If the task is not fully completed, repeat steps 1-3

5. Present the final result

Begin your analysis:

"""

response = openai.Completion.create(

engine="text-davinci-002",

prompt=prompt,

max_tokens=300,

temperature=0.7

)

return response.choices[0].text.strip()

def use_tool(tool_name, query):

if tool_name == "weather_api":

# Simulated weather API call

return f"Weather data for {query}: Sunny, 25°C"

elif tool_name == "calculator":

# Use WolframAlpha as a calculator

client = wolframalpha.Client('YOUR_APP_ID')

res = client.query(query)

return next(res.results).text

elif tool_name == "search_engine":

# Simulated search engine query

return f"Search results for '{query}': [Result 1], [Result 2], [Result 3]"

else:

return "Tool not available"

# 使用示例

task = "Plan a picnic for tomorrow, including calculating the amount of food needed for 5 people"

available_tools = ["weather_api", "calculator", "search_engine"]

result = tool_using_agent(task, available_tools)

print(result)這個代理能夠根據任務需求選擇和使用適當的外部工具,大大增強了其問題解決能力。

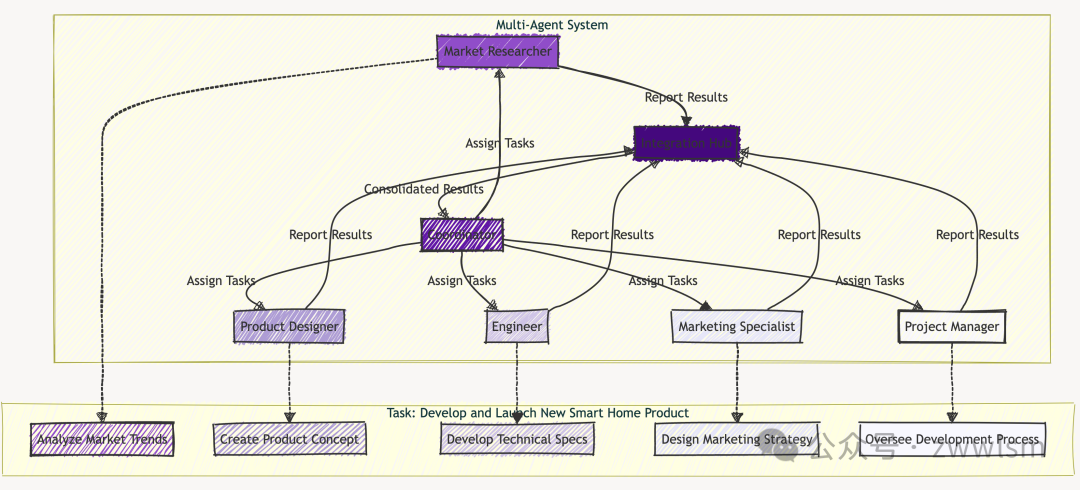

3.4 多代理系統

在某些復雜場景中,我們可能需要多個代理協同工作。每個代理可以專注于特定的任務或角色,共同完成一個大型目標。

def multi_agent_system(task, agents):

prompt = f"""

Task: {task}

Agents: {', '.join(agents.keys())}

You are the coordinator of a multi-agent system. Your job is to:

1. Analyze the task and determine which agents are needed

2. Assign subtasks to appropriate agents

3. Collect and integrate results from all agents

4. Ensure smooth communication and coordination between agents

5. Resolve any conflicts or inconsistencies

6. Present the final integrated result

For each agent, consider their role and capabilities:

{', '.join([f"{k}: {v}" for k, v in agents.items()])}

Begin your coordination process:

"""

response = openai.Completion.create(

engine="text-davinci-002",

prompt=prompt,

max_tokens=400,

temperature=0.7

)

return response.choices[0].text.strip()

# 使用示例

task = "Develop and launch a new product in the smart home market"

agents = {

"market_researcher": "Analyzes market trends and consumer needs",

"product_designer": "Creates product concepts and designs",

"engineer": "Develops technical specifications and prototypes",

"marketing_specialist": "Creates marketing strategies and materials",

"project_manager": "Oversees the entire product development process"

}

result = multi_agent_system(task, agents)

print(result)這個系統展示了如何協調多個專門的代理來完成一個復雜的任務。

4. 高級技巧和最佳實踐

在實際應用中,以下一些技巧可以幫助你更好地設計和實現AI代理:

4.1 記憶和狀態管理

代理需要能夠記住過去的交互和決策,以保持連貫性和學習能力。

class AgentMemory:

def __init__(self):

self.short_term = []

self.long_term = {}

def add_short_term(self, item):

self.short_term.append(item)

if len(self.short_term) > 5: # 只保留最近的5個項目

self.short_term.pop(0)

def add_long_term(self, key, value):

self.long_term[key] = value

def get_context(self):

return f"Short-term memory: {self.short_term}\nLong-term memory: {self.long_term}"

def stateful_agent(task, memory):

context = memory.get_context()

prompt = f"""

Task: {task}

Context: {context}

As an AI agent with memory, use your short-term and long-term memory to inform your actions.

After completing the task, update your memories as necessary.

Your response:

"""

response = openai.Completion.create(

engine="text-davinci-002",

prompt=prompt,

max_tokens=200,

temperature=0.7

)

# 更新記憶(這里簡化處理,實際應用中可能需要更復雜的邏輯)

memory.add_short_term(task)

memory.add_long_term(task, response.choices[0].text.strip())

return response.choices[0].text.strip()

# 使用示例

memory = AgentMemory()

task1 = "Introduce yourself to a new user"

result1 = stateful_agent(task1, memory)

print(result1)

task2 = "Recommend a product based on the user's previous interactions"

result2 = stateful_agent(task2, memory)

print(result2)這個例子展示了如何為代理實現簡單的短期和長期記憶,使其能夠在多次交互中保持狀態。

4.2 元認知和自我改進

高級代理應該能夠評估自己的性能,并不斷學習和改進。

def metacognitive_agent(task, performance_history):

prompt = f"""

Task: {task}

Performance History: {performance_history}

As an AI agent with metacognitive abilities, your job is to:

1. Analyze the given task

2. Reflect on your past performance in similar tasks

3. Identify areas for improvement based on your performance history

4. Develop a strategy to complete the current task, incorporating lessons learned

5. Execute the task

6. After completion, evaluate your performance and update your learning

Begin your metacognitive process:

"""

response = openai.Completion.create(

engine="text-davinci-002",

prompt=prompt,

max_tokens=300,

temperature=0.7

)

return response.choices[0].text.strip()

# 使用示例

task = "Write a persuasive email to potential clients about our new software product"

performance_history = [

"Previous email campaigns had a 15% open rate and 2% click-through rate",

"Personalized subject lines increased open rates by 25%",

"Including customer testimonials improved click-through rates by 40%"

]

result = metacognitive_agent(task, performance_history)

print(result)這個代理能夠反思過去的性能,并將學到的經驗應用到新任務中。

4.3 倫理決策

隨著AI代理變得越來越自主,確保它們做出符合倫理的決策變得至關重要。

def ethical_agent(task, ethical_guidelines):

prompt = f"""

Task: {task}

Ethical Guidelines:

{ethical_guidelines}

As an AI agent with a strong ethical framework, your job is to:

1. Analyze the given task

2. Identify any potential ethical concerns or dilemmas

3. Consider multiple approaches to the task, evaluating each against the ethical guidelines

4. Choose the most ethical approach that still accomplishes the task

5. If no ethical approach is possible, explain why and suggest alternatives

6. Execute the chosen approach, ensuring all actions align with the ethical guidelines

Begin your ethical analysis and task execution:

"""

response = openai.Completion.create(

engine="text-davinci-002",

prompt=prompt,

max_tokens=300,

temperature=0.7

)

return response.choices[0].text.strip()

# 使用示例

task = "Develop a marketing campaign for a new energy drink targeting teenagers"

ethical_guidelines = """

1. Do not exploit vulnerable populations

2. Be truthful and transparent about product effects

3. Promote responsible consumption

4. Consider long-term health impacts

5. Respect privacy and data protection

"""

result = ethical_agent(task, ethical_guidelines)

print(result)這個代理在執行任務時非常抱歉之前的回復被意外截斷。讓我繼續完成這個關于提示工程中的代理技術的文章。

這個代理在執行任務時會考慮倫理因素,確保其行動符合預定的倫理準則。

5. 評估和優化

評估AI代理的性能比評估簡單的提示更加復雜,因為我們需要考慮代理在多個交互中的整體表現。以下是一些評估和優化的方法:

5.1 任務完成度評估

def evaluate_task_completion(agent, tasks):

total_score = 0

for task in tasks:

result = agent(task)

score = rate_completion(task, result) # 這個函數需要單獨實現

total_score += score

return total_score / len(tasks)

def rate_completion(task, result):

prompt = f"""

Task: {task}

Result: {result}

On a scale of 1-10, rate how well the result completes the given task.

Consider factors such as accuracy, completeness, and relevance.

Rating (1-10):

"""

response = openai.Completion.create(

engine="text-davinci-002",

prompt=prompt,

max_tokens=10,

temperature=0.3

)

return int(response.choices[0].text.strip())5.2 決策質量評估

def evaluate_decision_quality(agent, scenarios):

total_score = 0

for scenario in scenarios:

decision = agent(scenario)

score = rate_decision(scenario, decision)

total_score += score

return total_score / len(scenarios)

def rate_decision(scenario, decision):

prompt = f"""

Scenario: {scenario}

Decision: {decision}

Evaluate the quality of this decision considering the following criteria:

1. Appropriateness for the scenario

2. Potential consequences

3. Alignment with given objectives or ethical guidelines

4. Creativity and innovation

Provide a rating from 1-10 and a brief explanation.

Rating (1-10):

Explanation:

"""

response = openai.Completion.create(

engine="text-davinci-002",

prompt=prompt,

max_tokens=100,

temperature=0.5

)

lines = response.choices[0].text.strip().split('\n')

rating = int(lines[0].split(':')[1].strip())

explanation = '\n'.join(lines[1:])

return rating, explanation5.3 長期學習和適應性評估

def evaluate_adaptability(agent, task_sequence):

performance_trend = []

for task in task_sequence:

result = agent(task)

score = rate_completion(task, result)

performance_trend.append(score)

# 分析性能趨勢

improvement_rate = (performance_trend[-1] - performance_trend[0]) / len(performance_trend)

return improvement_rate, performance_trend

# 使用示例

task_sequence = [

"Summarize a news article",

"Write a product description",

"Respond to a customer complaint",

"Create a marketing slogan",

"Draft a press release"

]

improvement_rate, performance_trend = evaluate_adaptability(some_agent, task_sequence)

print(f"Improvement rate: {improvement_rate}")

print(f"Performance trend: {performance_trend}")6. 實際應用案例:智能個人助理

讓我們通過一個實際的應用案例來綜合運用我們學到的代理技術。我們將創建一個智能個人助理,它能夠處理各種日常任務,學習用戶的偏好,并做出符合倫理的決策。

import openai

import datetime

class PersonalAssistant:

def __init__(self, user_name):

self.user_name = user_name

self.memory = AgentMemory()

self.ethical_guidelines = """

1. Respect user privacy and data protection

2. Provide accurate and helpful information

3. Encourage healthy habits and well-being

4. Avoid actions that could harm the user or others

5. Be transparent about AI limitations

"""

def process_request(self, request):

context = self.memory.get_context()

current_time = datetime.datetime.now().strftime("%Y-%m-%d %H:%M:%S")

prompt = f"""

User: {self.user_name}

Current Time: {current_time}

Request: {request}

Context: {context}

Ethical Guidelines: {self.ethical_guidelines}

As an AI personal assistant, your task is to:

1. Understand the user's request and current context

2. Consider any relevant information from your memory

3. Devise a plan to fulfill the request, breaking it into steps if necessary

4. Ensure all actions align with the ethical guidelines

5. Execute the plan, simulating any necessary actions or API calls

6. Provide a helpful and friendly response to the user

7. Update your memory with any important information from this interaction

Your response:

"""

response = openai.Completion.create(

engine="text-davinci-002",

prompt=prompt,

max_tokens=300,

temperature=0.7

)

result = response.choices[0].text.strip()

self.update_memory(request, result)

return result

def update_memory(self, request, result):

self.memory.add_short_term(f"Request: {request}")

self.memory.add_short_term(f"Response: {result}")

# 這里可以添加更復雜的邏輯來提取和存儲長期記憶

# 使用示例

assistant = PersonalAssistant("Alice")

requests = [

"What's on my schedule for today?",

"Remind me to buy groceries this evening",

"I'm feeling stressed, any suggestions for relaxation?",

"Can you help me plan a surprise party for my friend next week?",

"I need to book a flight to New York for next month"

]

for request in requests:

print(f"User: {request}")

response = assistant.process_request(request)

print(f"Assistant: {response}\n")這個個人助理展示了如何結合多個高級特性,包括:

- 狀態管理:使用內存系統來記住過去的交互。

- 上下文理解:考慮當前時間和用戶歷史。

- 任務分解:將復雜請求分解為可管理的步驟。

- 倫理決策:確保所有行動都符合預定的倫理準則。

- 適應性:通過記憶系統學習用戶偏好和行為模式。

7. 代理技術的挑戰與解決方案

盡管代理技術為AI系統帶來了巨大的潛力,但它也面臨一些獨特的挑戰:

7.1 長期一致性

挑戰:確保代理在長期交互中保持行為一致性。

解決方案:

- 實現穩健的記憶系統,包括短期和長期記憶

- 定期回顧和綜合過去的交互

- 使用元認知技術來監控和調整行為

def consistency_check(agent, past_interactions, new_interaction):

prompt = f"""

Past Interactions:

{past_interactions}

New Interaction:

{new_interaction}

Analyze the consistency of the agent's behavior across these interactions. Consider:

1. Adherence to established facts and preferences

2. Consistency in personality and tone

3. Logical coherence of decisions and advice

Provide a consistency score (1-10) and explain any inconsistencies:

"""

response = openai.Completion.create(

engine="text-davinci-002",

prompt=prompt,

max_tokens=200,

temperature=0.5

)

return response.choices[0].text.strip()7.2 錯誤累積

挑戰:代理可能在一系列決策中累積錯誤,導致最終結果嚴重偏離預期。

解決方案:

- 實現定期的自我評估和校正機制

- 在關鍵決策點引入人類反饋

- 使用蒙特卡洛樹搜索等技術來模擬決策的長期影響

def error_correction(agent, task_sequence):

results = []

for task in task_sequence:

result = agent(task)

corrected_result = self_correct(agent, task, result)

results.append(corrected_result)

return results

def self_correct(agent, task, initial_result):

prompt = f"""

Task: {task}

Initial Result: {initial_result}

As an AI agent, review your initial result and consider:

1. Are there any logical errors or inconsistencies?

2. Have all aspects of the task been addressed?

3. Could the result be improved or optimized?

If necessary, provide a corrected or improved result. If the initial result is satisfactory, state why.

Your analysis and corrected result (if needed):

"""

response = openai.Completion.create(

engine="text-davinci-002",

prompt=prompt,

max_tokens=200,

temperature=0.5

)

return response.choices[0].text.strip()7.3 可解釋性

挑戰:隨著代理決策過程變得越來越復雜,解釋這些決策變得越來越困難。

解決方案:

- 實現詳細的決策日志系統

- 使用可解釋的AI技術,如LIME或SHAP

- 開發交互式解釋界面,允許用戶詢問具體的決策原因

def explain_decision(agent, decision, context):

prompt = f"""

Decision: {decision}

Context: {context}

As an AI agent, explain your decision-making process in detail:

1. What were the key factors considered?

2. What alternatives were evaluated?

3. Why was this decision chosen over others?

4. What potential risks or downsides were identified?

5. How does this decision align with overall goals and ethical guidelines?

Provide a clear and detailed explanation:

"""

response = openai.Completion.create(

engine="text-davinci-002",

prompt=prompt,

max_tokens=300,

temperature=0.7

)

return response.choices[0].text.strip()8. 未來趨勢

隨著代理技術的不斷發展,我們可以期待看到以下趨勢:

- 多代理協作系統:復雜任務將由多個專門的代理共同完成,每個代理負責特定的子任務或領域。

- 持續學習代理:代理將能夠從每次交互中學習,不斷改進其知識庫和決策能力。

- 情境感知代理:代理將更好地理解和適應不同的環境和社交情境。

- 自主目標設定:高級代理將能夠自主設定和調整目標,而不僅僅是執行預定義的任務。

- 跨模態代理:代理將能夠無縫地在文本、圖像、語音等多種模態之間進行推理和交互。

9. 結語

提示工程中的代理技術為我們開啟了一個充滿可能性的新世界。通過創建能夠自主決策、學習和適應的AI系統,我們正在改變人機交互的本質。這些技術不僅能夠處理更復雜的任務,還能夠創造出更自然、更智能的用戶體驗。

然而,隨著代理變得越來越復雜和自主,我們也面臨著諸如倫理、可控性和透明度等重要挑戰。作為開發者和研究者,我們有責任謹慎地設計和部署這些系統,確保它們造福人類而不是帶來潛在的危害。

本文轉載自??芝士AI吃魚??,作者: 芝士AI吃魚