探索Kubernetes與AI的結(jié)合:PyTorch訓(xùn)練任務(wù)在k8s上調(diào)度實(shí)踐

概述

Kubernetes的核心優(yōu)勢(shì)在于其能夠提供一個(gè)可擴(kuò)展、靈活且高度可配置的平臺(tái),使得應(yīng)用程序的部署、擴(kuò)展和管理變得前所未有的簡(jiǎn)單。通用計(jì)算能力方面的應(yīng)用已經(jīng)相對(duì)成熟,云原生化的應(yīng)用程序、數(shù)據(jù)庫(kù)和其他服務(wù)可以輕松部署在Kubernetes環(huán)境中,實(shí)現(xiàn)高可用性和彈性。

然而,當(dāng)涉及到異構(gòu)計(jì)算資源時(shí),情形便開(kāi)始變得復(fù)雜。異構(gòu)計(jì)算資源如GPU、FPGA和NPU,雖然能夠提供巨大的計(jì)算優(yōu)勢(shì),尤其是在處理特定類型的計(jì)算密集型任務(wù)時(shí),但它們的集成和管理卻不像通用計(jì)算資源那樣簡(jiǎn)單。由于硬件供應(yīng)商提供的驅(qū)動(dòng)和管理工具差異較大,Kubernetes在統(tǒng)一調(diào)度和編排這些資源方面還存在一些局限性。這不僅影響了資源的利用效率,也給開(kāi)發(fā)者帶來(lái)了額外的管理負(fù)擔(dān)。

下面分享下如何在個(gè)人筆記本電腦上完成K8s GPU集群的搭建,并使用kueue、kubeflow、karmada在具有GPU節(jié)點(diǎn)的k8s集群上提交pytorch的訓(xùn)練任務(wù)。

k8s支持GPU

- kubernetes對(duì)于GPU的支持是通過(guò)設(shè)備插件的方式來(lái)實(shí)現(xiàn),需要安裝GPU廠商的設(shè)備驅(qū)動(dòng),通過(guò)POD調(diào)用GPU能力。

- Kind、Minikube、K3d等常用開(kāi)發(fā)環(huán)境集群構(gòu)建工具對(duì)于GPU的支持也各不相同,Kind暫不支持GPU,Minikube和K3d支持Linux環(huán)境下的NVIDIA的GPU

RTX3060搭建具有GPU的K8s

GPU K8s

先決條件

- Go 版本 v1.20+

- kubectl 版本 v1.19+

- Minikube 版本 v1.24.0+

- Docker 版本v24.0.6+

- NVIDIA Driver 最新版本

- NVIDIA Container Toolkit 最新版本

備注:

- ubuntu 系統(tǒng)的 RTX3060+顯卡(不能是虛擬機(jī)系統(tǒng),除非你的虛擬機(jī)支持pve或則esxi顯卡直通功能), windows的wsl 是不支持的,因?yàn)閣sl的Linux內(nèi)核是一個(gè)自定義的內(nèi)核,里面缺失很多內(nèi)核模塊,導(dǎo)致NVIDIA的驅(qū)動(dòng)調(diào)用有問(wèn)題

- 需要Github、Google、Docker的代碼和倉(cāng)庫(kù)訪問(wèn)能力

GPU Docker

完成以上操作后,確認(rèn)Docker具備GPU的調(diào)度能力,可以通過(guò)如下的方式來(lái)進(jìn)行驗(yàn)證

- 創(chuàng)建如下的docker compose 文件

services:

test:

image: nvidia/cuda:12.3.1-base-ubuntu20.04

command: nvidia-smi

deploy:

resources:

reservations:

devices:

- driver: nvidia

count: 1

capabilities: [gpu]- 使用Docker啟動(dòng)cuda任務(wù)

docker compose up

Creating network "gpu_default" with the default driver

Creating gpu_test_1 ... done

Attaching to gpu_test_1

test_1 | +-----------------------------------------------------------------------------+

test_1 | | NVIDIA-SMI 450.80.02 Driver Version: 450.80.02 CUDA Version: 11.1 |

test_1 | |-------------------------------+----------------------+----------------------+

test_1 | | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

test_1 | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

test_1 | | | | MIG M. |

test_1 | |===============================+======================+======================|

test_1 | | 0 Tesla T4 On | 00000000:00:1E.0 Off | 0 |

test_1 | | N/A 23C P8 9W / 70W | 0MiB / 15109MiB | 0% Default |

test_1 | | | | N/A |

test_1 | +-------------------------------+----------------------+----------------------+

test_1 |

test_1 | +-----------------------------------------------------------------------------+

test_1 | | Processes: |

test_1 | | GPU GI CI PID Type Process name GPU Memory |

test_1 | | ID ID Usage |

test_1 | |=============================================================================|

test_1 | | No running processes found |

test_1 | +-----------------------------------------------------------------------------+

gpu_test_1 exited with code 0GPU Minikube

配置Minikube,啟動(dòng)kubernetes集群

minikube start --driver docker --container-runtime docker --gpus all驗(yàn)證集群的GPU能力

- 確認(rèn)節(jié)點(diǎn)具備GPU信息

kubectl describe node minikube

...

Capacity:

nvidia.com/gpu: 1

...- 測(cè)試在集群中執(zhí)行CUDA

$ cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: gpu-pod

spec:

restartPolicy: Never

containers:

- name: cuda-container

image: nvcr.io/nvidia/k8s/cuda-sample:vectoradd-cuda10.2

resources:

limits:

nvidia.com/gpu: 1 # requesting 1 GPU

tolerations:

- key: nvidia.com/gpu

operator: Exists

effect: NoSchedule

EOF$ kubectl logs gpu-pod

[Vector addition of 50000 elements]

Copy input data from the host memory to the CUDA device

CUDA kernel launch with 196 blocks of 256 threads

Copy output data from the CUDA device to the host memory

Test PASSED

Done使用kueue提交pytorch訓(xùn)練任務(wù)

kueue簡(jiǎn)介

kueue是k8s特別興趣小組(SIG)的一個(gè)開(kāi)源項(xiàng)目,是一個(gè)基于 Kubernetes 的任務(wù)隊(duì)列管理系統(tǒng),旨在簡(jiǎn)化和優(yōu)化 Kubernetes 中的作業(yè)管理。 主要具備以下功能:

- 作業(yè)管理:支持基于優(yōu)先級(jí)的作業(yè)隊(duì)列,提供不同的隊(duì)列策略,如StrictFIFO和BestEffortFIFO。

- 資源管理:支持資源的公平分享和搶占,以及不同租戶之間的資源管理策略。

- 動(dòng)態(tài)資源回收:一種釋放資源配額的機(jī)制,隨著作業(yè)的完成而動(dòng)態(tài)釋放資源。

- 資源靈活性:在 ClusterQueue 和 Cohort 中支持資源的借用或搶占。

- 內(nèi)置集成:內(nèi)置支持常見(jiàn)的作業(yè)類型,如 BatchJob、Kubeflow 訓(xùn)練作業(yè)、RayJob、RayCluster、JobSet 等。

- 系統(tǒng)監(jiān)控:內(nèi)置 Prometheus 指標(biāo),用于監(jiān)控系統(tǒng)狀態(tài)。

- 準(zhǔn)入檢查:一種機(jī)制,用于影響工作負(fù)載是否可以被接受。

- 高級(jí)自動(dòng)縮放支持:與 cluster-autoscaler 的 provisioningRequest 集成,通過(guò)準(zhǔn)入檢查進(jìn)行管理。

- 順序準(zhǔn)入:一種簡(jiǎn)單的全或無(wú)調(diào)度實(shí)現(xiàn)。

- 部分準(zhǔn)入:允許作業(yè)以較小的并行度運(yùn)行,基于可用配額。

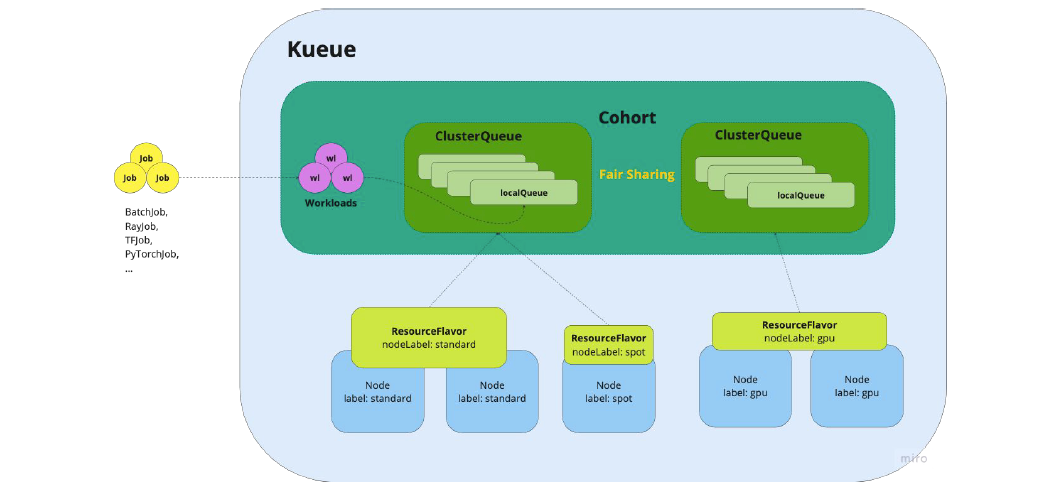

kueue的架構(gòu)圖如下:

圖片

圖片

通過(guò)拓展workload來(lái)支持BatchJob、Kubeflow 訓(xùn)練作業(yè)、RayJob、RayCluster、JobSet 等作業(yè)任務(wù),通過(guò)ClusterQueue來(lái)共享LocalQueue資源,任務(wù)最終提交到LocalQueue進(jìn)行調(diào)度和執(zhí)行,而不同的ClusterQueue可以通過(guò)Cohort進(jìn)行資源共享和通信,通過(guò)Cohort->ClusterQueue->LocalQueue->Node實(shí)現(xiàn)不同層級(jí)的資源共享已支持AI、ML等Ray相關(guān)的job在k8s集群中調(diào)度。 在kueue中區(qū)分管理員用戶和普通用戶,管理員用戶負(fù)責(zé)管理ResourceFlavor、ClusterQueue、LocalQueue等資源,以及管理資源池的配額(quota)。普通用戶負(fù)責(zé)提批處理任務(wù)或者各類的Ray任務(wù)。

運(yùn)行PyTorch訓(xùn)練任務(wù)

安裝kueue

需要k8s 1.22+,使用如下的命令安裝

kubectl apply --server-side -f https://github.com/kubernetes-sigs/kueue/releases/download/v0.6.0/manifests.yaml配置集群配額

git clone https://github.com/kubernetes-sigs/kueue.git && cd kueue

kubectl apply -f examples/admin/single-clusterqueue-setup.yaml其實(shí)不安裝kueue也是能夠提交Pytorch的訓(xùn)練任務(wù),因?yàn)檫@個(gè)PytorchJob是kubeflow traning-operator的一個(gè)CRD,但是安裝kueue的好處是,他可以支持更多任務(wù)。

除了kubeflow的任務(wù),還可以支持kuberay的任務(wù),并且它內(nèi)置了管理員角色,方便對(duì)于集群的配置和集群的資源做限額和管理,支持優(yōu)先級(jí)隊(duì)列和任務(wù)搶占,更好的支持AI、ML等任務(wù)的調(diào)度和管理。上面安裝的集群配額就是設(shè)置任務(wù)的限制,避免一些負(fù)載過(guò)高的任務(wù)提交,在任務(wù)執(zhí)行前快速失敗。

安裝kubeflow的training-operator

kubectl apply -k "github.com/kubeflow/training-operator/manifests/overlays/standalone"運(yùn)行FashionMNIST的訓(xùn)練任務(wù)

FashionMNIST 數(shù)據(jù)集是一個(gè)用于圖像分類任務(wù)的常用數(shù)據(jù)集,類似于經(jīng)典的 MNIST 數(shù)據(jù)集,但是它包含了更加復(fù)雜的服裝類別。

- FashionMNIST 數(shù)據(jù)集包含了 10 個(gè)類別的服裝圖像,每個(gè)類別包含了 6,000 張訓(xùn)練圖像和 1,000 張測(cè)試圖像,共計(jì) 60,000 張訓(xùn)練圖像和 10,000 張測(cè)試圖像。

- 每張圖像都是 28x28 像素的灰度圖像,表示了不同類型的服裝,如 T 恤、褲子、襯衫、裙子等。

在kueue上提交PyTorchJob類型的任務(wù),為了能夠保存訓(xùn)練過(guò)程中的日志和結(jié)果,我們需要使用openebs的hostpath來(lái)將訓(xùn)練過(guò)程的數(shù)據(jù)保存到節(jié)點(diǎn)上,因?yàn)槿蝿?wù)訓(xùn)練結(jié)束后,不能登錄到節(jié)點(diǎn)查看。所以創(chuàng)建如下的資源文件

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pytorch-results-pvc

spec:

storageClassName: openebs-hostpath

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

---

apiVersion: "kubeflow.org/v1"

kind: PyTorchJob

metadata:

name: pytorch-simple

namespace: kubeflow

spec:

pytorchReplicaSpecs:

Master:

replicas: 1

restartPolicy: OnFailure

template:

spec:

containers:

- name: pytorch

image: pytorch-mnist:2.2.1-cuda12.1-cudnn8-runtime

imagePullPolicy: IfNotPresent

command:

- "python3"

- "/opt/pytorch-mnist/mnist.py"

- "--epochs=10"

- "--batch-size"

- "32"

- "--test-batch-size"

- "64"

- "--lr"

- "0.01"

- "--momentum"

- "0.9"

- "--log-interval"

- "10"

- "--save-model"

- "--log-path"

- "/results/master.log"

volumeMounts:

- name: result-volume

mountPath: /results

volumes:

- name: result-volume

persistentVolumeClaim:

claimName: pytorch-results-pvc

Worker:

replicas: 1

restartPolicy: OnFailure

template:

spec:

containers:

- name: pytorch

image: pytorch-mnist:2.2.1-cuda12.1-cudnn8-runtime

imagePullPolicy: IfNotPresent

command:

- "python3"

- "/opt/pytorch-mnist/mnist.py"

- "--epochs=10"

- "--batch-size"

- "32"

- "--test-batch-size"

- "64"

- "--lr"

- "0.01"

- "--momentum"

- "0.9"

- "--log-interval"

- "10"

- "--save-model"

- "--log-path"

- "/results/worker.log"

volumeMounts:

- name: result-volume

mountPath: /results

volumes:

- name: result-volume

persistentVolumeClaim:

claimName: pytorch-results-pvc其中pytorch-mnist:v1beta1-45c5727是一個(gè)在pytorch上運(yùn)行CNN訓(xùn)練任務(wù)的代碼,具體的代碼如下:

from __future__ import print_function

import argparse

import logging

import os

from torchvision import datasets, transforms

import torch

import torch.distributed as dist

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

WORLD_SIZE = int(os.environ.get("WORLD_SIZE", 1))

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 20, 5, 1)

self.conv2 = nn.Conv2d(20, 50, 5, 1)

self.fc1 = nn.Linear(4*4*50, 500)

self.fc2 = nn.Linear(500, 10)

def forward(self, x):

x = F.relu(self.conv1(x))

x = F.max_pool2d(x, 2, 2)

x = F.relu(self.conv2(x))

x = F.max_pool2d(x, 2, 2)

x = x.view(-1, 4*4*50)

x = F.relu(self.fc1(x))

x = self.fc2(x)

return F.log_softmax(x, dim=1)

def train(args, model, device, train_loader, optimizer, epoch):

model.train()

for batch_idx, (data, target) in enumerate(train_loader):

data, target = data.to(device), target.to(device)

optimizer.zero_grad()

output = model(data)

loss = F.nll_loss(output, target)

loss.backward()

optimizer.step()

if batch_idx % args.log_interval == 0:

msg = "Train Epoch: {} [{}/{} ({:.0f}%)]\tloss={:.4f}".format(

epoch, batch_idx * len(data), len(train_loader.dataset),

100. * batch_idx / len(train_loader), loss.item())

logging.info(msg)

niter = epoch * len(train_loader) + batch_idx

def test(args, model, device, test_loader, epoch):

model.eval()

test_loss = 0

correct = 0

with torch.no_grad():

for data, target in test_loader:

data, target = data.to(device), target.to(device)

output = model(data)

test_loss += F.nll_loss(output, target, reductinotallow="sum").item() # sum up batch loss

pred = output.max(1, keepdim=True)[1] # get the index of the max log-probability

correct += pred.eq(target.view_as(pred)).sum().item()

test_loss /= len(test_loader.dataset)

logging.info("{{metricName: accuracy, metricValue: {:.4f}}};{{metricName: loss, metricValue: {:.4f}}}\n".format(

float(correct) / len(test_loader.dataset), test_loss))

def should_distribute():

return dist.is_available() and WORLD_SIZE > 1

def is_distributed():

return dist.is_available() and dist.is_initialized()

def main():

# Training settings

parser = argparse.ArgumentParser(descriptinotallow="PyTorch MNIST Example")

parser.add_argument("--batch-size", type=int, default=64, metavar="N",

help="input batch size for training (default: 64)")

parser.add_argument("--test-batch-size", type=int, default=1000, metavar="N",

help="input batch size for testing (default: 1000)")

parser.add_argument("--epochs", type=int, default=10, metavar="N",

help="number of epochs to train (default: 10)")

parser.add_argument("--lr", type=float, default=0.01, metavar="LR",

help="learning rate (default: 0.01)")

parser.add_argument("--momentum", type=float, default=0.5, metavar="M",

help="SGD momentum (default: 0.5)")

parser.add_argument("--no-cuda", actinotallow="store_true", default=False,

help="disables CUDA training")

parser.add_argument("--seed", type=int, default=1, metavar="S",

help="random seed (default: 1)")

parser.add_argument("--log-interval", type=int, default=10, metavar="N",

help="how many batches to wait before logging training status")

parser.add_argument("--log-path", type=str, default="",

help="Path to save logs. Print to StdOut if log-path is not set")

parser.add_argument("--save-model", actinotallow="store_true", default=False,

help="For Saving the current Model")

if dist.is_available():

parser.add_argument("--backend", type=str, help="Distributed backend",

choices=[dist.Backend.GLOO, dist.Backend.NCCL, dist.Backend.MPI],

default=dist.Backend.GLOO)

args = parser.parse_args()

# Use this format (%Y-%m-%dT%H:%M:%SZ) to record timestamp of the metrics.

# If log_path is empty print log to StdOut, otherwise print log to the file.

if args.log_path == "":

logging.basicConfig(

format="%(asctime)s %(levelname)-8s %(message)s",

datefmt="%Y-%m-%dT%H:%M:%SZ",

level=logging.DEBUG)

else:

logging.basicConfig(

format="%(asctime)s %(levelname)-8s %(message)s",

datefmt="%Y-%m-%dT%H:%M:%SZ",

level=logging.DEBUG,

filename=args.log_path)

use_cuda = not args.no_cuda and torch.cuda.is_available()

if use_cuda:

print("Using CUDA")

torch.manual_seed(args.seed)

device = torch.device("cuda" if use_cuda else "cpu")

if should_distribute():

print("Using distributed PyTorch with {} backend".format(args.backend))

dist.init_process_group(backend=args.backend)

kwargs = {"num_workers": 1, "pin_memory": True} if use_cuda else {}

train_loader = torch.utils.data.DataLoader(

datasets.FashionMNIST("./data",

train=True,

download=True,

transform=transforms.Compose([

transforms.ToTensor()

])),

batch_size=args.batch_size, shuffle=True, **kwargs)

test_loader = torch.utils.data.DataLoader(

datasets.FashionMNIST("./data",

train=False,

transform=transforms.Compose([

transforms.ToTensor()

])),

batch_size=args.test_batch_size, shuffle=False, **kwargs)

model = Net().to(device)

if is_distributed():

Distributor = nn.parallel.DistributedDataParallel if use_cuda \

else nn.parallel.DistributedDataParallelCPU

model = Distributor(model)

optimizer = optim.SGD(model.parameters(), lr=args.lr, momentum=args.momentum)

for epoch in range(1, args.epochs + 1):

train(args, model, device, train_loader, optimizer, epoch)

test(args, model, device, test_loader, epoch)

if (args.save_model):

torch.save(model.state_dict(), "mnist_cnn.pt")

if __name__ == "__main__":

main()將訓(xùn)練任務(wù)提交到k8s集群

kubectl apply -f sample-pytorchjob.yaml提交成功后會(huì)出現(xiàn)兩個(gè)訓(xùn)練任務(wù),分別是master和worker的訓(xùn)練任務(wù),如下:

? ~ kubectl get po

NAME READY STATUS RESTARTS AGE

pytorch-simple-master-0 1/1 Running 0 5m5s

pytorch-simple-worker-0 1/1 Running 0 5m5s再查看宿主機(jī)的顯卡運(yùn)行情況,發(fā)現(xiàn)能夠明顯聽(tīng)到集群散熱的聲音,運(yùn)行nvida-smi可以看到有兩個(gè)Python任務(wù)在執(zhí)行,等待執(zhí)行完后,會(huì)生成模型文件mnist_cnn.pt。

? ~ nvidia-smi

Mon Mar 4 10:18:39 2024

+---------------------------------------------------------------------------------------+

| NVIDIA-SMI 535.161.07 Driver Version: 535.161.07 CUDA Version: 12.2 |

|-----------------------------------------+----------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+======================+======================|

| 0 NVIDIA GeForce RTX 3060 ... Off | 00000000:01:00.0 Off | N/A |

| N/A 39C P0 24W / 80W | 753MiB / 6144MiB | 1% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

+---------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=======================================================================================|

| 0 N/A N/A 1674 G /usr/lib/xorg/Xorg 219MiB |

| 0 N/A N/A 1961 G /usr/bin/gnome-shell 47MiB |

| 0 N/A N/A 3151 G gnome-control-center 2MiB |

| 0 N/A N/A 4177 G ...irefox/3836/usr/lib/firefox/firefox 149MiB |

| 0 N/A N/A 14476 C python3 148MiB |

| 0 N/A N/A 14998 C python3 170MiB |

+---------------------------------------------------------------------------------------+在提交和執(zhí)行任務(wù)的時(shí)候,要注意cuda的版本和pytorch的版本要保持對(duì)應(yīng),官方demo中的dockerfile是這樣的

FROM pytorch/pytorch:1.0-cuda10.0-cudnn7-runtime

ADD examples/v1beta1/pytorch-mnist /opt/pytorch-mnist

WORKDIR /opt/pytorch-mnist

# Add folder for the logs.

RUN mkdir /katib

RUN chgrp -R 0 /opt/pytorch-mnist \

&& chmod -R g+rwX /opt/pytorch-mnist \

&& chgrp -R 0 /katib \

&& chmod -R g+rwX /katib

ENTRYPOINT ["python3", "/opt/pytorch-mnist/mnist.py"]這個(gè)要求你要使用pytorch1.0和cuda10的版本進(jìn)行訓(xùn)練,而我們實(shí)際的使用的cuda12,所以直接用這個(gè)基礎(chǔ)鏡像去構(gòu)建是不行,任務(wù)會(huì)一致處于運(yùn)行中,永遠(yuǎn)結(jié)束不了,為了能夠避免每次重復(fù)下載mnist的數(shù)據(jù)集,我們需要提前下載然后將數(shù)據(jù)集打包到容器里面,所以修改后的Dockerfile如下:

FROM pytorch/pytorch:2.2.1-cuda12.1-cudnn8-runtime

ADD . /opt/pytorch-mnist

WORKDIR /opt/pytorch-mnist

# Add folder for the logs.

RUN mkdir /katib

RUN chgrp -R 0 /opt/pytorch-mnist \

&& chmod -R g+rwX /opt/pytorch-mnist \

&& chgrp -R 0 /katib \

&& chmod -R g+rwX /katib

ENTRYPOINT ["python3", "/opt/pytorch-mnist/mnist.py"]使用最終的訓(xùn)練結(jié)束后mnist_cnn.pt模型文件,進(jìn)行模型預(yù)測(cè)和測(cè)試得到的結(jié)果如下:{metricName: accuracy, metricValue: 0.9039};{metricName: loss, metricValue: 0.2756}, 即這個(gè)模型的準(zhǔn)確性為90.39%,模型損失值為0.2756,說(shuō)明我們訓(xùn)練的模型在FashionMNIST 數(shù)據(jù)集上表現(xiàn)良好,在訓(xùn)練過(guò)程中epoch參數(shù)比較重要,它代表訓(xùn)練的輪次,過(guò)小會(huì)出現(xiàn)效果不好,過(guò)大會(huì)出現(xiàn)過(guò)擬合問(wèn)題,在測(cè)試的時(shí)候我們可以適當(dāng)調(diào)整這個(gè)參數(shù)來(lái)控制模型訓(xùn)練運(yùn)行的時(shí)間。

通過(guò)kueue通過(guò)webhook的方式對(duì)于的進(jìn)行AI、ML等GPU任務(wù)進(jìn)行準(zhǔn)入控制和資源限制,提供租戶隔離的概念,為k8s對(duì)于GPU的支持提供了根據(jù)豐富的場(chǎng)景。如果筆記本的顯卡能力夠強(qiáng),可以將chatglm等開(kāi)源的大模型部署到k8s集群中,從而搭建自己個(gè)人離線專屬的大模型服務(wù)。

karmada多集群提交pytorch訓(xùn)練任務(wù)

創(chuàng)建多集群k8s

在多集群的管控上,我們可以使用karamda來(lái)實(shí)現(xiàn)管理,其中member2作為控制面主集群,member3、member4作為子集群。在完成minikube的nvidia的GPU配置后,使用如下的命令創(chuàng)建3個(gè)集群。

docker network create --driver=bridge --subnet=xxx.xxx.xxx.0/24 --ip-range=xxx.xxx.xxx.0/24 minikube-net

minikube start --driver docker --cpus max --memory max --container-runtime docker --gpus all --network minikube-net --subnet='xxx.xxx.xxx.xxx' --mount-string="/run/udev:/run/udev" --mount -p member2 --static-ip='xxx.xxx.xxx.xxx'

minikube start --driver docker --cpus max --memory max --container-runtime docker --gpus all --network minikube-net --subnet='xxx.xxx.xxx.xxx' --mount-string="/run/udev:/run/udev" --mount -p member3 --static-ip='xxx.xxx.xxx.xxx'

minikube start --driver docker --cpus max --memory max --container-runtime docker --gpus all --network minikube-net --subnet='xxx.xxx.xxx.xxx' --mount-string="/run/udev:/run/udev" --mount -p member4 --static-ip='xxx.xxx.xxx.xxx'

? ~ minikube profile list

|---------|-----------|---------|-----------------|------|---------|---------|-------|--------|

| Profile | VM Driver | Runtime | IP | Port | Version | Status | Nodes | Active |

|---------|-----------|---------|-----------------|------|---------|---------|-------|--------|

| member2 | docker | docker | xxx.xxx.xxx.xxx | 8443 | v1.28.3 | Running | 1 | |

| member3 | docker | docker | xxx.xxx.xxx.xxx | 8443 | v1.28.3 | Running | 1 | |

| member4 | docker | docker | xxx.xxx.xxx.xxx | 8443 | v1.28.3 | Running | 1 | |

|---------|-----------|---------|-----------------|------|---------|---------|-------|--------|在3個(gè)集群分別安裝Training Operator、karmada,并且需要在karmada的控制面安裝Training Operator,這樣才能在控制面提交pytorchjob的任務(wù)。由于同一個(gè)pytorch任務(wù)分布在不同的集群在服務(wù)發(fā)現(xiàn)和master、worker交互通信會(huì)存在困難,所以我們這邊只演示將同一個(gè)pytorch任務(wù)提交到同一個(gè)集群,通過(guò)kosmos的控制面實(shí)現(xiàn)將多個(gè)pytorch任務(wù)調(diào)度到不同的集群完成訓(xùn)練。 在karmada的控制面上創(chuàng)建訓(xùn)練任務(wù)

apiVersion: "kubeflow.org/v1"

kind: PyTorchJob

metadata:

name: pytorch-simple

namespace: kubeflow

spec:

pytorchReplicaSpecs:

Master:

replicas: 1

restartPolicy: OnFailure

template:

spec:

containers:

- name: pytorch

image: pytorch-mnist:2.2.1-cuda12.1-cudnn8-runtime

imagePullPolicy: IfNotPresent

command:

- "python3"

- "/opt/pytorch-mnist/mnist.py"

- "--epochs=30"

- "--batch-size"

- "32"

- "--test-batch-size"

- "64"

- "--lr"

- "0.01"

- "--momentum"

- "0.9"

- "--log-interval"

- "10"

- "--save-model"

- "--log-path"

- "/opt/pytorch-mnist/master.log"

Worker:

replicas: 1

restartPolicy: OnFailure

template:

spec:

containers:

- name: pytorch

image: pytorch-mnist:2.2.1-cuda12.1-cudnn8-runtime

imagePullPolicy: IfNotPresent

command:

- "python3"

- "/opt/pytorch-mnist/mnist.py"

- "--epochs=30"

- "--batch-size"

- "32"

- "--test-batch-size"

- "64"

- "--lr"

- "0.01"

- "--momentum"

- "0.9"

- "--log-interval"

- "10"

- "--save-model"

- "--log-path"

- "/opt/pytorch-mnist/worker.log"在karmada的控制面上創(chuàng)建傳播策略

apiVersion: policy.karmada.io/v1alpha1

kind: PropagationPolicy

metadata:

name: pytorchjob-propagation

namespace: kubeflow

spec:

resourceSelectors:

- apiVersion: kubeflow.org/v1

kind: PyTorchJob

name: pytorch-simple

namespace: kubeflow

placement:

clusterAffinity:

clusterNames:

- member3

- member4

replicaScheduling:

replicaDivisionPreference: Weighted

replicaSchedulingType: Divided

weightPreference:

staticWeightList:

- targetCluster:

clusterNames:

- member3

weight: 1

- targetCluster:

clusterNames:

- member4

weight: 1然后我們就可以看到這個(gè)訓(xùn)練任務(wù)成功的提交到member3和member4的子集群上執(zhí)行任務(wù)

? pytorch kubectl karmada --kubeconfig ~/karmada-apiserver.config get po -n kubeflow

NAME CLUSTER READY STATUS RESTARTS AGE

pytorch-simple-master-0 member3 0/1 Completed 0 7m51s

pytorch-simple-worker-0 member3 0/1 Completed 0 7m51s

training-operator-64c768746c-gvf9n member3 1/1 Running 0 165m

pytorch-simple-master-0 member4 0/1 Completed 0 7m51s

pytorch-simple-worker-0 member4 0/1 Completed 0 7m51s

training-operator-64c768746c-nrkdv member4 1/1 Running 0 168m總結(jié)

通過(guò)搭建本地的k8s GPU環(huán)境,可以方便的進(jìn)行AI相關(guān)的開(kāi)發(fā)和測(cè)試,也能充分利用閑置的筆記本GPU性能。利用kueue、karmada、kuberay和ray等框架,讓GPU等異構(gòu)算力調(diào)度在云原生成為可能。目前只是在單k8s集群完成訓(xùn)練任務(wù)的提交和運(yùn)行,在實(shí)際AI、ML或者大模型的訓(xùn)練其實(shí)更加復(fù)雜,組網(wǎng)和技術(shù)架構(gòu)也需要進(jìn)行精心的設(shè)計(jì)。要實(shí)現(xiàn)千卡、萬(wàn)卡的在k8s集群的訓(xùn)練和推理解決包括但不僅限于

- 網(wǎng)絡(luò)通信性能:傳統(tǒng)的數(shù)據(jù)中心網(wǎng)絡(luò)一般是10Gbps,這個(gè)在大模型訓(xùn)練和推理中是捉襟見(jiàn)肘的,所以需要構(gòu)建RDMA網(wǎng)絡(luò)(Remote Direct Memory Access)

- GPU調(diào)度和配置:多云多集群場(chǎng)景下,如何進(jìn)行GPU的調(diào)度和管理

- 監(jiān)控和調(diào)試:如何進(jìn)行有效地監(jiān)控和調(diào)試訓(xùn)練任務(wù),以及對(duì)異常情況進(jìn)行處理和服務(wù)恢復(fù)

參考資料

1. [Go](https://go.dev/)

2. [Docker](https://docker.com)

3. [minikube](https://minikube.sigs.k8s.io/docs/tutorials/nvidia/)

4. [nvidia](https://docs.nvidia.com/datacenter/tesla/tesla-installation-notes/index.html)

5. [kubernetes](https://kubernetes.io/docs/tasks/manage-gpus/scheduling-gpus/)

6. [kueue](https://github.com/kubernetes-sigs/kueue)

7. [kubeflow](https://github.com/kubeflow/training-operator)

8. [ray](https://github.com/ray-project/ray)

9. [kuberay](https://github.com/ray-project/kuberay)

10. [karmada](https://github.com/karmada-io/karmada)

11. [kind](https://kind.sigs.k8s.io/)

12. [k3s](https://github.com/k3s-io/k3s)

13. [k3d](https://github.com/k3d-io/k3d)