ML工程師一次微調七個模型,擊敗OpenAI GPT-4

模型微調(Fine Tuning)指的是在已經訓練好的大語言模型(LLM)的基礎上,使用特定的數據集進行進一步訓練,這種「站在巨人肩膀上」的做法,可以用比較小的數據集,比較低的訓練成本獲得更好的收益,避免了重復造輪子。

在大模型時代,提示詞工程(Prompt Engineering)、模型微調和檢索增強生成(RAG)都是非常重要的能力,對于大多數個人使用者來說,掌握提示詞工程就夠用了,但如果想要在自己的服務中接入大模型,模型微調是必由之路,也因為其對于技能的更高要求,成為了ML工程師高手過招之地。

今日,一位ML工程師的微調模型就登上了HN熱榜,「我的微調模型擊敗了OpenAI的GPT-4」!

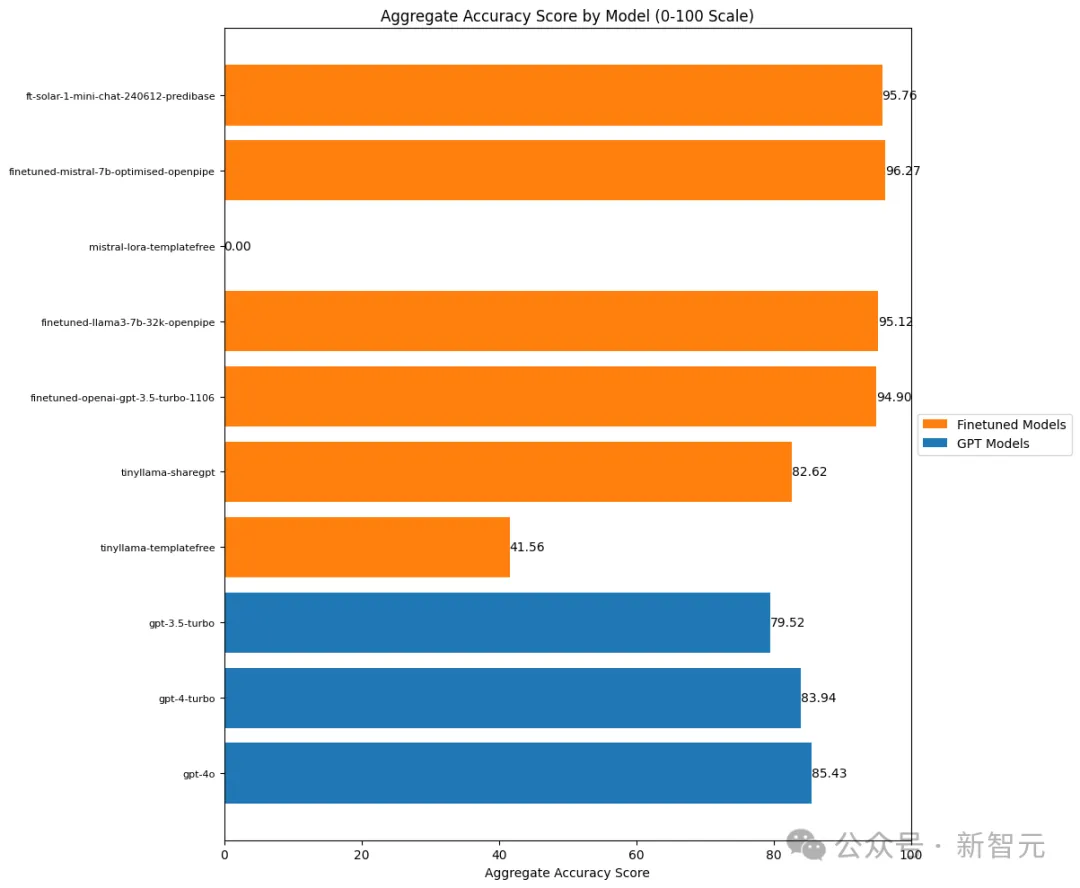

工程師Alex Strick van Linschoten在博客中指出,就他的測試數據而言,Mistral、Llama3和Solar LLM的微調模型都要比OpenAI的模型更準確。

文章地址:https://mlops.systems/posts/2024-07-01-full-finetuned-model-evaluation.html#start-date-accuracy

對此,作者戲稱道,這篇文章的標題可以直接改為:微調模型擊敗OpenAI,但評估過程實在痛苦。

他坦言,代碼量很多,運行速度也較慢。在這次微調中,他第一次為微調選擇而糾結權衡。如果沒有某種系統來處理這個問題,維護這一切的復雜性就會開始增加。

現在,我們來看一下這位工程師是如何做的。(詳細代碼請參見原文)

加載數據集

所有數據都存儲在Hugging Face Hub的一個公共倉庫中。

為了進行這些評估,作者選擇使用數據集的測試部分,因為模型之前沒有接觸過這些數據,這樣可以更好地評估模型在新數據上的表現。

test_datasetDataset({

features: ['name', 'eventrefnumber', 'text', 'StartDate', 'eventtype', 'province', 'citydistrict', 'village', 'targetgroup', 'commander', 'position', 'minkilled', 'mincaptured', 'capturedcharacterisation', 'killedcharacterisation', 'killq', 'captureq', 'killcaptureraid', 'airstrike', 'noshotsfired', 'dataprocessed', 'flagged', 'glossarymeta', 'minleaderskilled', 'minfacilitatorskilled', 'minleaderscaptured', 'minfacilitatorscaptured', 'leaderq'],

num_rows: 724

})首先在DataFrame中添加一個額外的列,然后對數據集中的每一行進行預測。將預測的副本存儲到這一列中,以避免重復執行這個計算密集的步驟。

但首先需要將數據組裝成Pydantic對象,以便處理數據驗證。

[

IsafEvent(

name='5',

text='2013-01-S-025\n\nKABUL, Afghanistan (Jan. 25, 2013)\nDuring a security operation in Andar district, Ghazni province, yesterday, an Afghan and coalition force killed the Taliban leader, Alaudin. Alaudin oversaw a group of insurgents responsible for conducting remote-controlled improvised explosive device and small-arms fire attacks against Afghan and coalition forces. Prior to his death, Alaudin was planning attacks against Afghan National Police in Ghazni province.',

start_date=datetime.date(2013, 1, 24),

event_type={'insurgentskilled'},

province={'ghazni'},

target_group={'taliban'},

min_killed=1,

min_captured=0,

killq=True,

captureq=False,

killcaptureraid=False,

airstrike=False,

noshotsfired=False,

min_leaders_killed=1,

min_leaders_captured=0,

predictions={}

),

IsafEvent(

name='2',

text='2011-11-S-034\nISAF Joint Command - Afghanistan\nFor Immediate Release\n\nKABUL, Afghanistan (Nov. 20, 2011)\nA coalition security force detained numerous suspected insurgents during an operation in Marjeh district, Helmand province, yesterday. The force conducted the operation after receiving information that a group of insurgents were at a compound in the area. After calling for the men inside to come out peacefully, the insurgents emerged and were detained without incident.',

start_date=datetime.date(2011, 11, 19),

event_type={'detention'},

province={'helmand'},

target_group={''},

min_killed=0,

min_captured=4,

killq=False,

captureq=True,

killcaptureraid=True,

airstrike=False,

noshotsfired=False,

min_leaders_killed=0,

min_leaders_captured=0,

predictions={}

)

]因此,當進行預測時,我們希望從模型中得到一個類似這樣的JSON字符串:

json_str = events[0].model_dump_json(exclude={"text", "predictions"})

print(json_str){"name":"5","start_date":"2013-01-24","event_type":["insurgentskilled"],"province":["ghazni"],"target_group":["tali

ban"],"min_killed":1,"min_captured":0,"killq":true,"captureq":false,"killcaptureraid":false,"airstrike":false,"nosh

otsfired":false,"min_leaders_killed":1,"min_leaders_captured":0}從使用GPT模型進行完整評估開始,需要一個更復雜的提示詞才能獲得理想的結果。

由于GPT模型沒有經過訓練或微調來響應微調模型的特定提示詞,因此我們不能直接使用相同的提示詞。

這帶來了一個有趣的問題:我們需要花多少精力在設計GPT提示詞上,才能達到微調模型的準確度?換句話說,是否真的有辦法在接受不同提示詞的模型之間進行公平的比較?

嘗試OpenAI的GPT-4和GPT-4 Turbo可以看到,為了讓GPT模型有機會與微調模型競爭,提示詞需要多長。

理想情況下,作者會在上下文中加入更多的示例,但他也不希望增加token的使用量。

from openai import OpenAI

from rich import print

import json

import os

def query_openai(article_text: str, model: str) -> str:

query = (

f"The following is a press release issued by ISAF (formerly operating in Afghanistan):\n{article_text}\n\n"

"## Extraction request\n"

"Please extract the following information from the press release:\n"

"- The name of the event (summarising the event / text as a headline)\n"

"- The start date of the event\n"

"- The event type(s)\n"

"- The province(s) in which the event occurred\n"

"- The target group(s) of the event\n"

"- The minimum number of people killed during the event\n"

"- The minimum number of people captured during the event\n"

"- Whether someone was killed or not during the event\n"

"- Whether someone was captured or not during the event\n"

"- Whether the event was a so-called 'kill-capture raid'\n"

"- Whether an airstrike was used during the event\n"

"- Whether no shots were fired during the event\n"

"- The minimum number of leaders killed during the event\n"

"- The minimum number of leaders captured during the event\n\n"

"## Annotation notes:\n"

"- A 'faciliator' is not a leader.\n"

"- If a press release states that 'insurgents' were detained without further "

"details, assign a minimum number of two detained. Interpret 'a couple' as "

"two. Interpret 'several' as at least three, even though it may sometimes "

"refer to seven or eight. Classify the terms 'a few', 'some', 'a group', 'a "

"small group', and 'multiple' as denoting at least three, even if they "

"sometimes refer to larger numbers. Choose the smaller number if no other "

"information is available in the press release to come up with a minimally "

"acceptable figure. Interpret 'numerous' and 'a handful' as at least four, "

"and 'a large number' as at least five.\n\n"

"## Example:\n"

"Article text: 'ISAF Joint Command Evening Operational Update Feb. 19, 2011\nISAF Joint Command - "

"Afghanistan\u20282011-02-S-143\u2028For Immediate Release \u2028\u2028KABUL, Afghanistan (Feb. 19)\u2028\u2028ISAF "

"service members at a compound in Sangin district, Helmand province observed numerous insurgents north and south of "

"their position talking on radios today. After gaining positive identification of the insurgent positions, the "

"coalition troops engaged, killing several insurgents. Later, the ISAF troops observed more insurgents positioning "

"in the area with weapons. After positive identification, coalition forces continued firing on the various insurgent "

"positions, resulting in several more insurgents being killed.'\n\n"

'Output: `{"name":"Several insurgents killed in '

'Helmand","start_date":"2011-02-18","event_type":["insurgentskilled"],"province":["helmand"],"target_group":[""],"mi'

'n_killed":6,"min_captured":0,"killq":true,"captureq":false,"killcaptureraid":false,"airstrike":false,"noshotsfired"'

':false,"min_leaders_killed":0,"min_leaders_captured":0}`'

)

# set up the prediction harness

client = OpenAI(api_key=os.getenv("OPENAI_API_KEY"))

response = client.chat.completions.create(

model=model,

response_format={"type": "json_object"},

messages=[

{

"role": "system",

"content": "You are an expert at identifying events in a press release. You are precise "

"and always make sure you are correct, drawing inference from the text of the "

"press release.\n\n You always return a JSON string with the following schema: "

"## JSON Schema details\n"

"Here is some of the schema for the JSON output string you "

"should make use of: event_types = ['airstrike', 'detention', "

"'captureandkill', 'insurgentskilled', 'exchangeoffire', 'civiliancasualty'], "

"provinces = ['badakhshan', 'badghis', 'baghlan', 'balkh', 'bamyan', "

"'day_kundi', 'farah', 'faryab', 'ghazni', 'ghor', 'helmand', 'herat', "

"'jowzjan', 'kabul', 'kandahar', 'kapisa', 'khost', 'kunar', 'kunduz', "

"'laghman', 'logar', 'nangarhar', 'nimroz', 'nuristan', 'paktya', 'paktika', "

"'panjshir', 'parwan', 'samangan', 'sar_e_pul', 'takhar', 'uruzgan', "

"'wardak', 'zabul'], target_groups = ['taliban', 'haqqani', 'criminals', "

"'aq', 'hig', 'let', 'imu', 'judq', 'iju', 'hik', 'ttp', 'other']\n\n",

},

{"role": "user", "content": query},

],

temperature=1,

)

return response.choices[0].message.content可以通過一個簡單的示例來驗證這個函數是否正常工作:

json_str = query_openai(events[0].text, "gpt-4o")

print(json.loads(json_str)){

'name': 'Taliban leader Alaudin killed in Ghazni',

'start_date': '2013-01-24',

'event_type': ['insurgentskilled'],

'province': ['ghazni'],

'target_group': ['taliban'],

'min_killed': 1,

'min_captured': 0,

'killq': True,

'captureq': False,

'killcaptureraid': True,

'airstrike': False,

'noshotsfired': False,

'min_leaders_killed': 1,

'min_leaders_captured': 0

}模型正常工作(如預期的那樣),并且我們得到了一個JSON字符串。

接下來,構建一個程序來遍歷所有測試數據,獲取預測結果,并將這些預測結果存儲在Pydantic對象中。

對于批量預測,要確保以異步方式進行,因為有大量的事件,所以不希望耗費太多時間。

此外,作者還在函數中添加了一些重試機制,以應對GPT-3.5-turbo模型的速率限制。

正如我們現在所看到的,作者對每個事件都附加了三個預測結果。

print(events[0])IsafEvent(

name='5',

text='2013-01-S-025\n\nKABUL, Afghanistan (Jan. 25, 2013)\nDuring a security operation in Andar district, Ghazni province, yesterday, an Afghan and coalition force killed the Taliban leader, Alaudin. Alaudin oversaw a group of insurgents responsible for conducting remote-controlled improvised explosive device and small-arms fire attacks against Afghan and coalition forces. Prior to his death, Alaudin was planning attacks against Afghan National Police in Ghazni province.',

start_date=datetime.date(2013, 1, 24),

event_type={'insurgentskilled'},

province={'ghazni'},

target_group={'taliban'},

min_killed=1,

min_captured=0,

killq=True,

captureq=False,

killcaptureraid=False,

airstrike=False,

noshotsfired=False,

min_leaders_killed=1,

min_leaders_captured=0,

predictinotallow={'gpt-4o': '{\n "name": "Taliban leader Alaudin killed in Ghazni",\n "start_date": "2013-01-24",\n "event_type": ["insurgentskilled", "captureandkill"],\n "province": ["ghazni"],\n "target_group": ["taliban"],\n "min_killed": 1,\n "min_captured": 0,\n "killq": true,\n "captureq": false,\n "killcaptureraid": true,\n "airstrike": false,\n "noshotsfired": false,\n "min_leaders_killed": 1,\n "min_leaders_captured": 0\n}',

'gpt-4-turbo': '{\n "name": "Taliban leader Alaudin killed in Ghazni",\n "start_date": "2013-01-24",\n "event_type": ["captureandkill"],\n "province": ["ghazni"],\n "target_group": ["taliban"],\n "min_killed": 1,\n "min_captured": 0,\n "killq": true,\n "captureq": false,\n "killcaptureraid": true,\n "airstrike": false,\n "noshotsfired": false,\n "min_leaders_killed": 1,\n "min_leaders_captured": 0\n}',

'gpt-3.5-turbo': '{\n "name": "Taliban leader Alaudin killed in Ghazni province",\n "start_date": "2013-01-24",\n "event_type": ["captureandkill"],\n "province": ["ghazni"],\n "target_group": ["taliban"],\n "min_killed": 1,\n "min_captured": 0,\n "killq": true,\n "captureq": false,\n "killcaptureraid": false,\n "airstrike": false,\n "noshotsfired": false,\n "min_leaders_killed": 1,\n "min_leaders_captured": 0\n}'

}

)目前,已經將所有預測結果都存儲在內存中,所以現在是時候將它們提交到數據集,并推送到Hugging Face Hub了,以防筆記本崩潰、本地計算機關閉或其他意外情況發生。

作者創建了一個函數來處理這個過程,因為還需要對其他模型重復這個步驟。雖然過程有點冗長,但這樣做更好,這樣方便我們可以清楚地看到每一步的操作。

一個更簡潔和抽象的 convert_to_dataset 函數可能如下所示:

def convert_to_dataset(data: List[BaseModel]) -> Dataset:

dataset_dict = {}

for field_name, field_value in data[0].__fields__.items():

field_type = field_value.outer_type_

if field_type in [str, int, float, bool, date]:

dataset_dict[field_name] = [getattr(item, field_name) for item in data]

elif field_type == set:

dataset_dict[field_name] = [list(getattr(item, field_name)) for item in data]

elif issubclass(field_type, BaseModel):

dataset_dict[field_name] = [getattr(item, field_name).dict() for item in data]

else:

dataset_dict[field_name] = [getattr(item, field_name) for item in data]

dataset = Dataset.from_dict(dataset_dict)

return dataset不過現在,要先把數據推送到Hub上。

convert_and_push_dataset(events, "isafpressreleases_with_preds", split_name="test")添加來自微調模型的預測

在添加完基線OpenAI模型之后,現在再來添加一些之前微調過的模型,包括本地模型以及由一鍵微調服務商托管的模型。

重新加載預測數據集

先加載數據集,然后再添加一些本地模型的預測結果:

from datasets import load_dataset

preds_test_data = load_dataset("strickvl/isafpressreleases_with_preds")[

"test"

].to_list()微調TinyLlama的預測

現在,如果我們檢查數據集,就會發現新的模型預測結果已經保存進去了:

from rich import print

print(preds_test_data[0]){

'name': '5',

'text': '2013-01-S-025\n\nKABUL, Afghanistan (Jan. 25, 2013)\nDuring a security operation in Andar district, Ghazni province, yesterday, an Afghan and coalition force killed the Taliban leader, Alaudin. Alaudin oversaw a group of insurgents responsible for conducting remote-controlled improvised explosive device and small-arms fire attacks against Afghan and coalition forces. Prior to his death, Alaudin was planning attacks against Afghan National Police in Ghazni province.',

'predictions': {'gpt-3.5-turbo': '{\n "name": "Taliban leader Alaudin killed in Ghazni province",\n "start_date": "2013-01-24",\n "event_type": ["captureandkill"],\n "province": ["ghazni"],\n "target_group": ["taliban"],\n "min_killed": 1,\n "min_captured": 0,\n "killq": true,\n "captureq": false,\n "killcaptureraid": false,\n "airstrike": false,\n "noshotsfired": false,\n "min_leaders_killed": 1,\n "min_leaders_captured": 0\n}',

'gpt-4-turbo': '{\n "name": "Taliban leader Alaudin killed in Ghazni",\n "start_date": "2013-01-24",\n "event_type": ["captureandkill"],\n "province": ["ghazni"],\n "target_group": ["taliban"],\n "min_killed": 1,\n "min_captured": 0,\n "killq": true,\n "captureq": false,\n "killcaptureraid": true,\n "airstrike": false,\n "noshotsfired": false,\n "min_leaders_killed": 1,\n "min_leaders_captured": 0\n}',

'gpt-4o': '{\n "name": "Taliban leader Alaudin killed in Ghazni",\n "start_date": "2013-01-24",\n "event_type": ["insurgentskilled", "captureandkill"],\n "province": ["ghazni"],\n "target_group": ["taliban"],\n "min_killed": 1,\n "min_captured": 0,\n "killq": true,\n "captureq": false,\n "killcaptureraid": true,\n "airstrike": false,\n "noshotsfired": false,\n "min_leaders_killed": 1,\n "min_leaders_captured": 0\n}',

'tinyllama-templatefree': '\n{"name":"Taliban leader killed in Ghazni","start_date":"2013-01-24","event_type":["insurgentskilled"],"province":["ghazni"],"target_group":["taliban"],"min_killed":1,"min_captured":0,"killq":true,"captureq":false,"killcaptureraid":false,"airstrike":false,"noshotsfired":false,"min_leaders_killed":1,"min_leaders_captured":0}',

'tinyllama-sharegpt':

'{"name":"2","start_date":"2013-01-24","event_type":["airstrike"],"province":["ghazni"],"target_group":["taliban"],"min_killed":1,"min_captured":0,"killq":true,"captureq":false,"killcaptureraid":false,"airstrike":true,"noshotsfired":false,"min_leaders_killed":1,"min_leaders_captured":0}'

},

'start_date': datetime.date(2013, 1, 24),

'province': ['ghazni'],

'target_group': ['taliban'],

'event_type': ['insurgentskilled'],

'min_killed': 1,

'min_captured': 0,

'killq': True,

'captureq': False,

'killcaptureraid': False,

'airstrike': False,

'noshotsfired': False,

'min_leaders_killed': 1,

'min_leaders_captured': 0

}微調Mistral的預測

正如作者之前提到的,微調后的Mistral模型無法在本地運行,所以他在Modal上進行推理,在那里他可以使用強大的A100顯卡來進行預測。

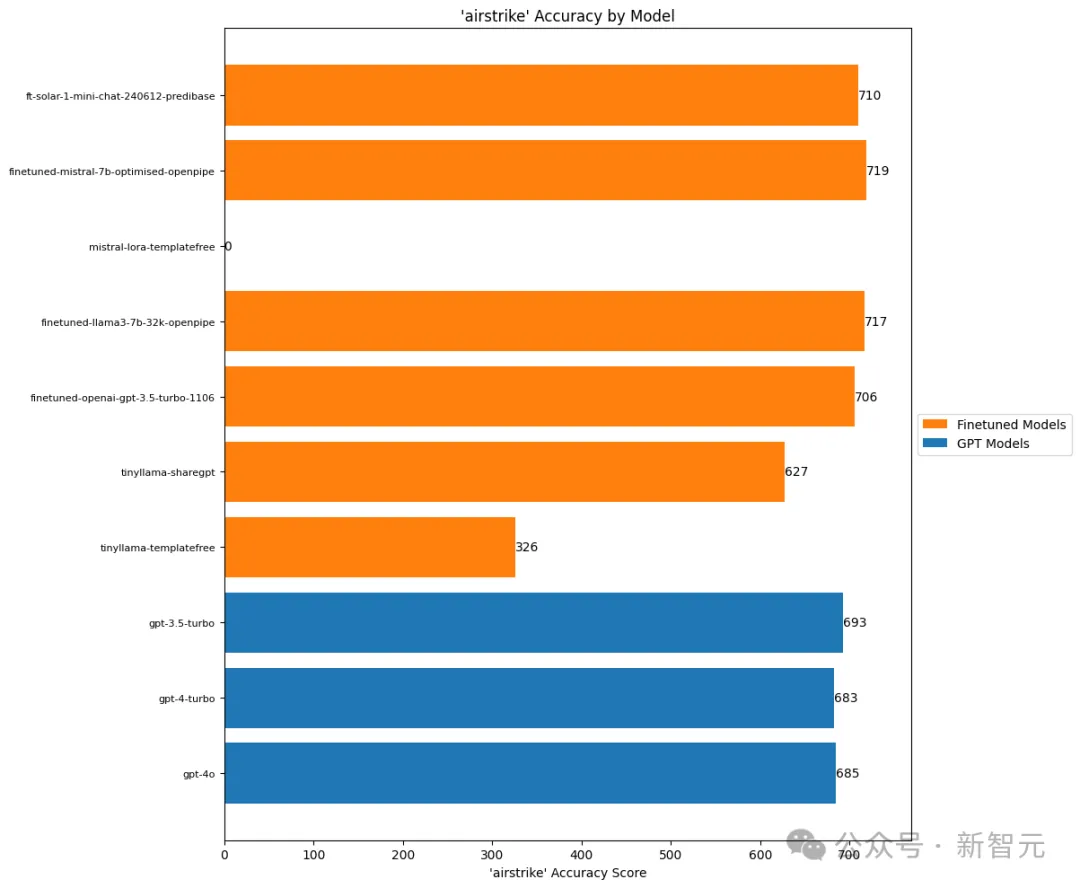

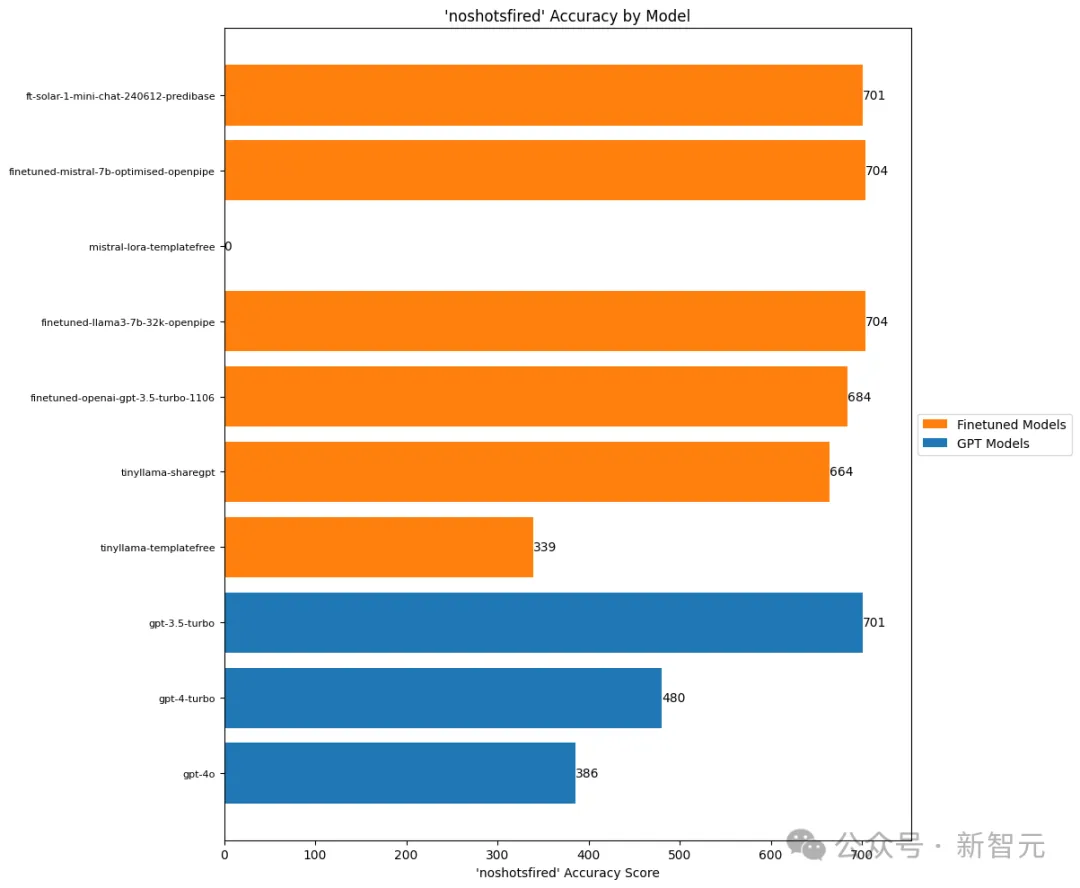

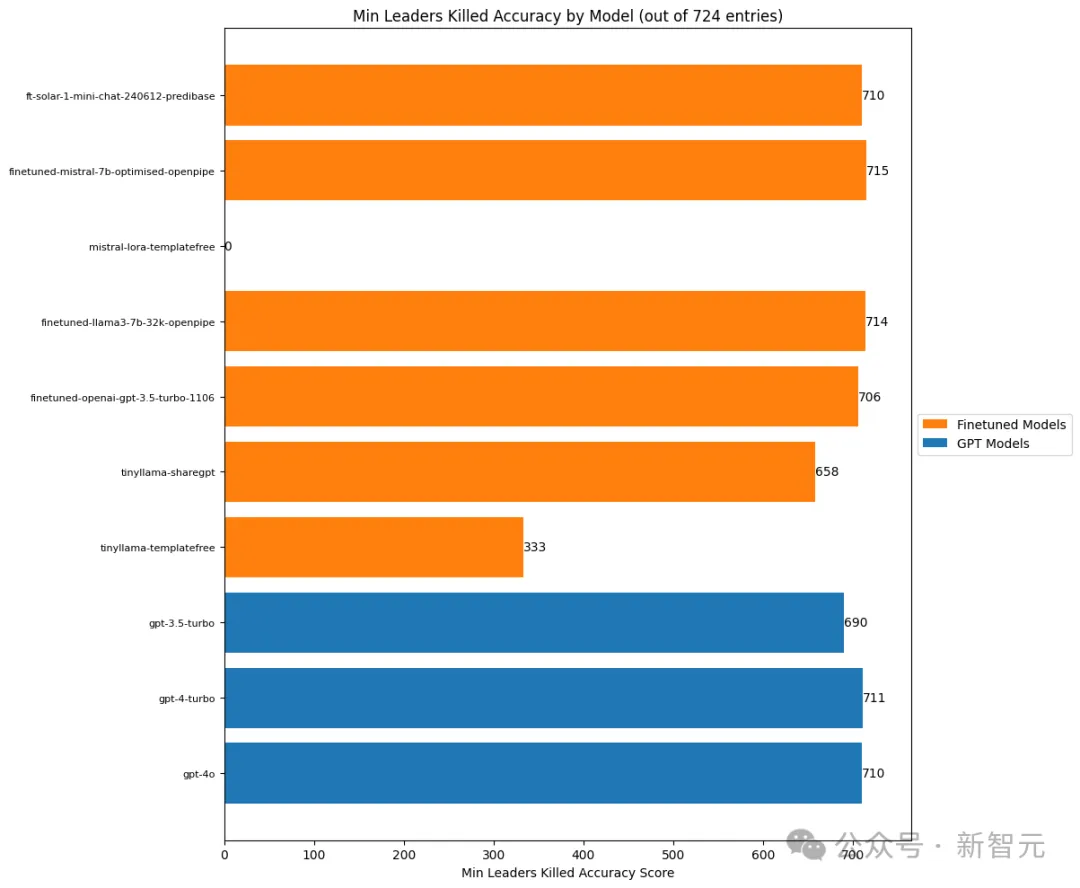

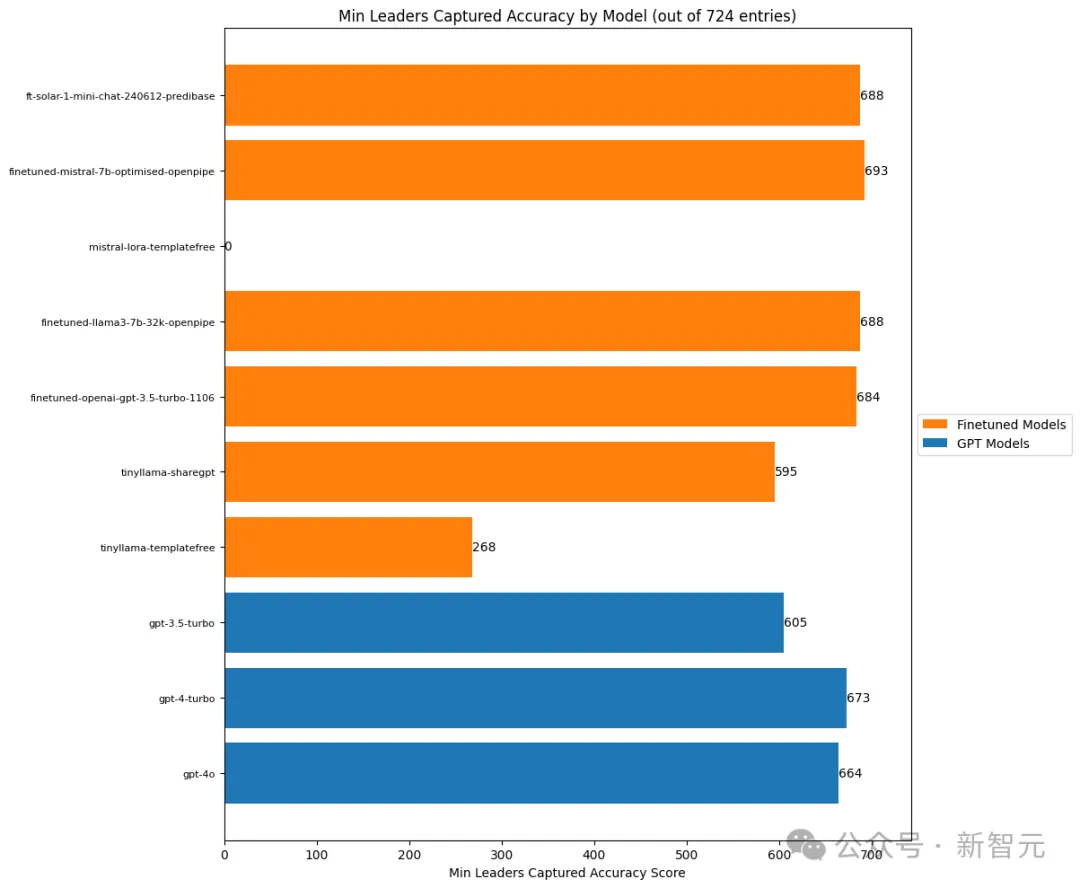

我們會看到,模型的表現并不理想,幾乎所有評估都失敗了。在圖表中數值為零的就是mistral-lora-templatefree模型。

微調OpenAI的預測

使用OpenAI的一鍵微調服務對gpt-3.5-turbo-1106模型進行了微調。通過OpenAI SDK 遍歷數據集,生成了這個微調模型的預測結果。

微調Mistral模型(通過OpenPipe)

使用OpenPipe微調了Mistral 7B和Mistral 8x7B模型,以便有一個合理的基準來比較其他模型。

微調Solar LLM(通過Predibase)

大約一周前,Predibase宣布了一個新的頂級微調模型,即Upstage的Solar LLM,所以作者決定試試。

這個模型的優勢在于它被訓練得非常擅長人們常常微調模型的任務,例如結構化數據提取。正如在圖表中呈現的,它表現得相當不錯!

微調Llama3的預測(通過OpenPipe)

作者本地微調的Llama3模型表現并不好,但在OpenPipe上的輸出看起來還可以,所以他使用這些預測進行最終評估。

from rich import print

print(preds_test_data[0]){

'name': '5',

'text': '2013-01-S-025\n\nKABUL, Afghanistan (Jan. 25, 2013)\nDuring a security operation in Andar district, Ghazni province, yesterday, an Afghan and coalition force killed the Taliban leader, Alaudin. Alaudin oversaw a group of insurgents responsible for conducting remote-controlled improvised explosive device and small-arms fire attacks against Afghan and coalition forces. Prior to his death, Alaudin was planning attacks against Afghan National Police in Ghazni province.',

'predictions': {'finetuned-llama3-7b-32k-openpipe':

'{"name":"1","start_date":"2013-01-24","event_type":["insurgentskilled"],"province":["ghazni"],"target_group":["taliban"],"min_killed":1,"min_captured":0,"killq":true,"captureq":false,"killcaptureraid":true,"airstrike":false,"noshotsfired":false,"min_leaders_killed":1,"min_leaders_captured":0}',

'finetuned-mistral-7b-optimised-openpipe':

'{"name":"1","start_date":"2013-01-24","event_type":["insurgentskilled"],"province":["ghazni"],"target_group":["taliban"],"min_killed":1,"min_captured":0,"killq":true,"captureq":false,"killcaptureraid":true,"airstrike":false,"noshotsfired":false,"min_leaders_killed":1,"min_leaders_captured":0}',

'finetuned-openai-gpt-3.5-turbo-1106':

'{"name":"4","start_date":"2013-01-24","event_type":["insurgentskilled"],"province":["ghazni"],"target_group":["taliban"],"min_killed":1,"min_captured":0,"killq":true,"captureq":false,"killcaptureraid":true,"airstrike":false,"noshotsfired":false,"min_leaders_killed":1,"min_leaders_captured":0}',

'gpt-3.5-turbo': '{\n "name": "Taliban leader Alaudin killed in Ghazni province",\n "start_date": "2013-01-24",\n "event_type": ["captureandkill"],\n "province": ["ghazni"],\n "target_group": ["taliban"],\n "min_killed": 1,\n "min_captured": 0,\n "killq": true,\n "captureq": false,\n "killcaptureraid": false,\n "airstrike": false,\n "noshotsfired": false,\n "min_leaders_killed": 1,\n "min_leaders_captured": 0\n}',

'gpt-4-turbo': '{\n "name": "Taliban leader Alaudin killed in Ghazni",\n "start_date": "2013-01-24",\n "event_type": ["captureandkill"],\n "province": ["ghazni"],\n "target_group": ["taliban"],\n "min_killed": 1,\n "min_captured": 0,\n "killq": true,\n "captureq": false,\n "killcaptureraid": true,\n "airstrike": false,\n "noshotsfired": false,\n "min_leaders_killed": 1,\n "min_leaders_captured": 0\n}',

'gpt-4o': '{\n "name": "Taliban leader Alaudin killed in Ghazni",\n "start_date": "2013-01-24",\n "event_type": ["insurgentskilled", "captureandkill"],\n "province": ["ghazni"],\n "target_group": ["taliban"],\n "min_killed": 1,\n "min_captured": 0,\n "killq": true,\n "captureq": false,\n "killcaptureraid": true,\n "airstrike": false,\n "noshotsfired": false,\n "min_leaders_killed": 1,\n "min_leaders_captured": 0\n}',

'mistral-lora-templatefree': '1',

'tinyllama-sharegpt':

'{"name":"2","start_date":"2013-01-24","event_type":["airstrike"],"province":["ghazni"],"target_group":["taliban"],"min_killed":1,"min_captured":0,"killq":true,"captureq":false,"killcaptureraid":false,"airstrike":true,"noshotsfired":false,"min_leaders_killed":1,"min_leaders_captured":0}',

'tinyllama-templatefree': '\n{"name":"Taliban leader killed in Ghazni","start_date":"2013-01-24","event_type":["insurgentskilled"],"province":["ghazni"],"target_group":["taliban"],"min_killed":1,"min_captured":0,"killq":true,"captureq":false,"killcaptureraid":false,"airstrike":false,"noshotsfired":false,"min_leaders_killed":1,"min_leaders_captured":0}',

'ft-solar-1-mini-chat-240612-predibase':

'\n\n{"name":"2","start_date":"2013-01-24","event_type":["insurgentskilled"],"province":["ghazni"],"target_group":["taliban"],"min_killed":1,"min_captured":0,"killq":true,"captureq":false,"killcaptureraid":true,"airstrike":false,"noshotsfired":false,"min_leaders_killed":1,"min_leaders_captured":0}'

},

'start_date': datetime.date(2013, 1, 24),

'province': ['ghazni'],

'target_group': ['taliban'],

'event_type': ['insurgentskilled'],

'min_killed': 1,

'min_captured': 0,

'killq': True,

'captureq': False,

'killcaptureraid': False,

'airstrike': False,

'noshotsfired': False,

'min_leaders_killed': 1,

'min_leaders_captured': 0

}現在我們有了來自七個微調模型和三個OpenAI模型的預測結果(用于比較),可以開始進行評估了。

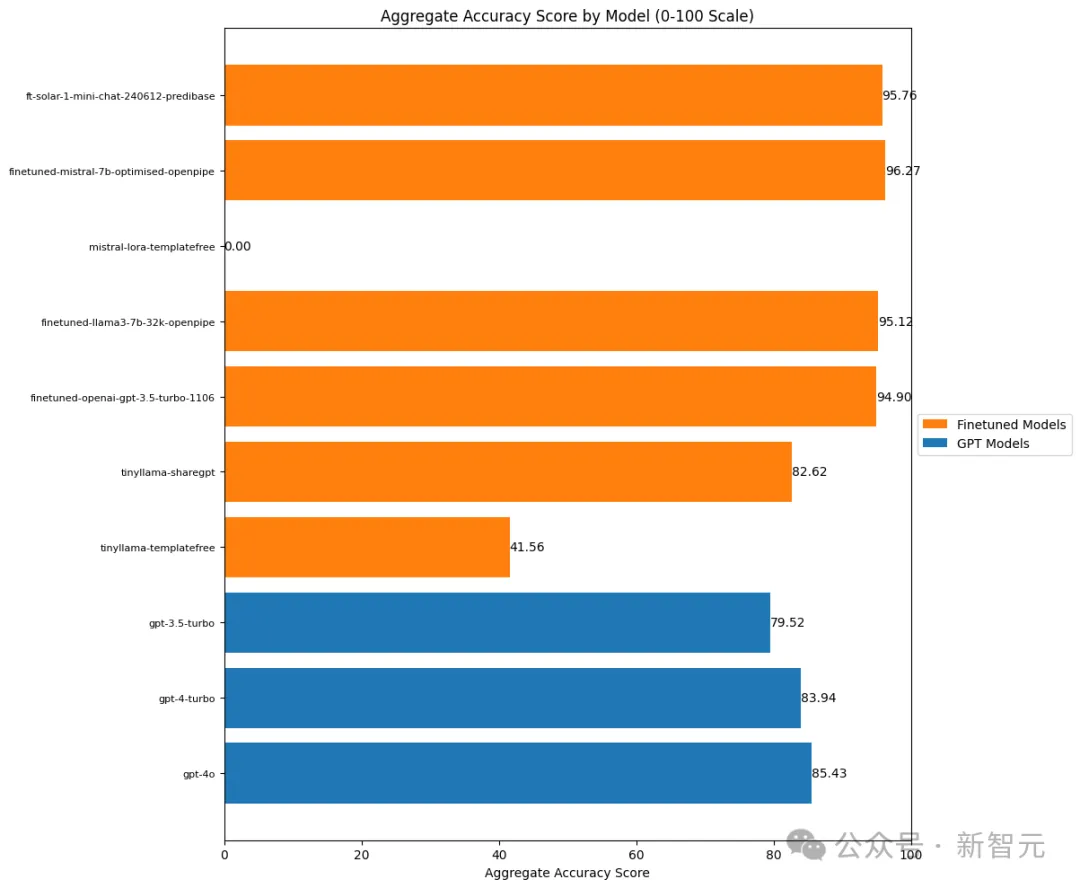

不過在此之前,還需要先進行一個簡單的檢查,看看這些預測中有多少是有效的JSON格式。

JSON有效性測試

from datasets import load_dataset

dataset_with_preds = load_dataset("strickvl/isafpressreleases_test_predictions")[

"train"

].to_list()

通過對比templatefree和sharegpt生成有效JSON的能力,我們已經能看到它們在TinyLlama微調中的差異,這非常具有指導意義。

OpenAI模型每次都能生成有效的JSON,微調后的Mistral和Llama3模型也是如此。

在編寫評估模型的代碼時,作者注意到有些條目是空白的或者根本沒有預測結果,所以他接下來對這個問題進行了調查。

# find out how many of the predictions are None values or empty strings

missing_values = {

"gpt-4o": 0,

"gpt-4-turbo": 0,

"gpt-3.5-turbo": 0,

"tinyllama-templatefree": 0,

"tinyllama-sharegpt": 0,

"finetuned-openai-gpt-3.5-turbo-1106": 0,

"finetuned-llama3-7b-32k-openpipe": 0,

"mistral-lora-templatefree": 0,

"finetuned-mistral-7b-optimised-openpipe": 0,

"ft-solar-1-mini-chat-240612-predibase": 0,

}

for row in dataset_with_preds:

for model in row["predictions"]:

if row["predictions"][model] is None or row["predictions"][model] == "":

missing_values[model] += 1

print(missing_values){

'gpt-4o': 0,

'gpt-4-turbo': 0,

'gpt-3.5-turbo': 0,

'tinyllama-templatefree': 0,

'tinyllama-sharegpt': 38,

'finetuned-openai-gpt-3.5-turbo-1106': 0,

'finetuned-llama3-7b-32k-openpipe': 0,

'mistral-lora-templatefree': 0,

'finetuned-mistral-7b-optimised-openpipe': 0,

'ft-solar-1-mini-chat-240612-predibase': 0

}如果沒有缺失值,tinyllama-sharegpt模型將會有全部724個預測結果,并且都是有效的JSON。

現在我們可以進入我們真正感興趣的部分:準確性。作者將計算所有有意義的屬性的分數,然后展示模型比較的結果。

這些屬性包括:

- start_date

- province

- target_group

- event_type

- min_killed

- min_captured

- killq

- captureq

- killcaptureraid

- airstrike

- noshotsfired

- min_leaders_killed

- min_leaders_captured

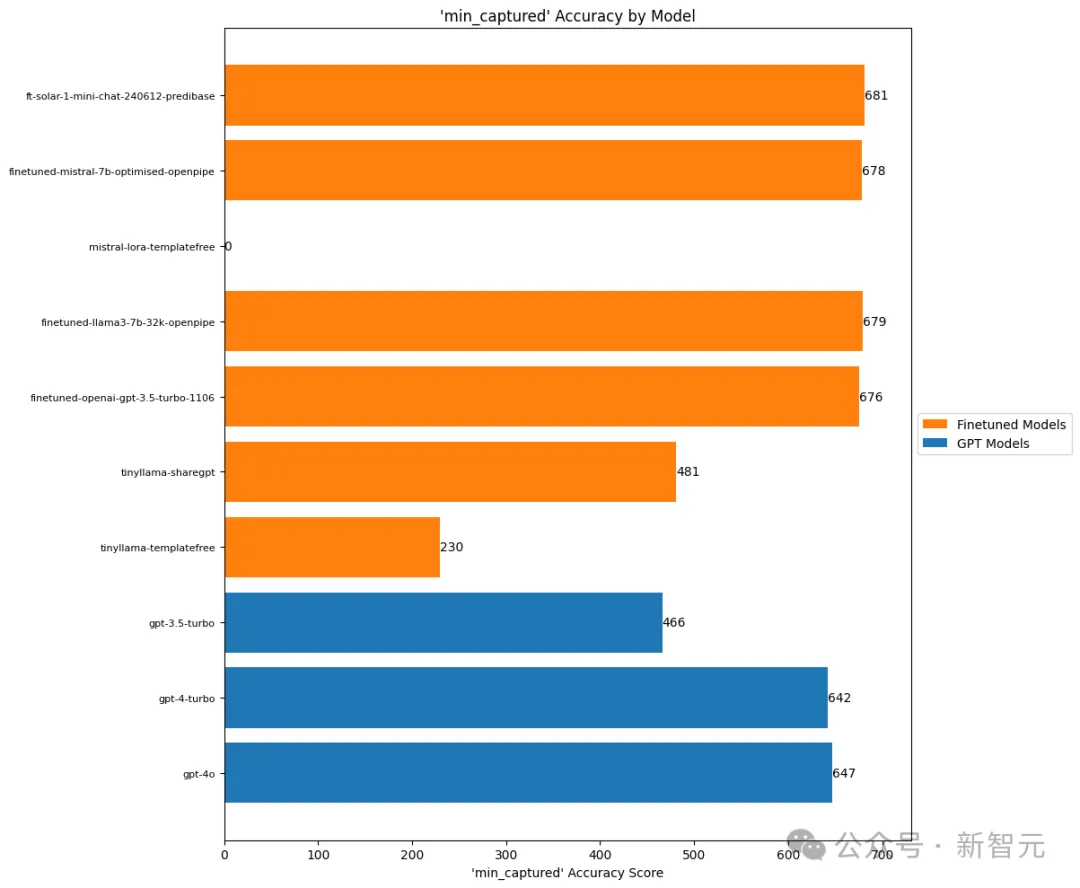

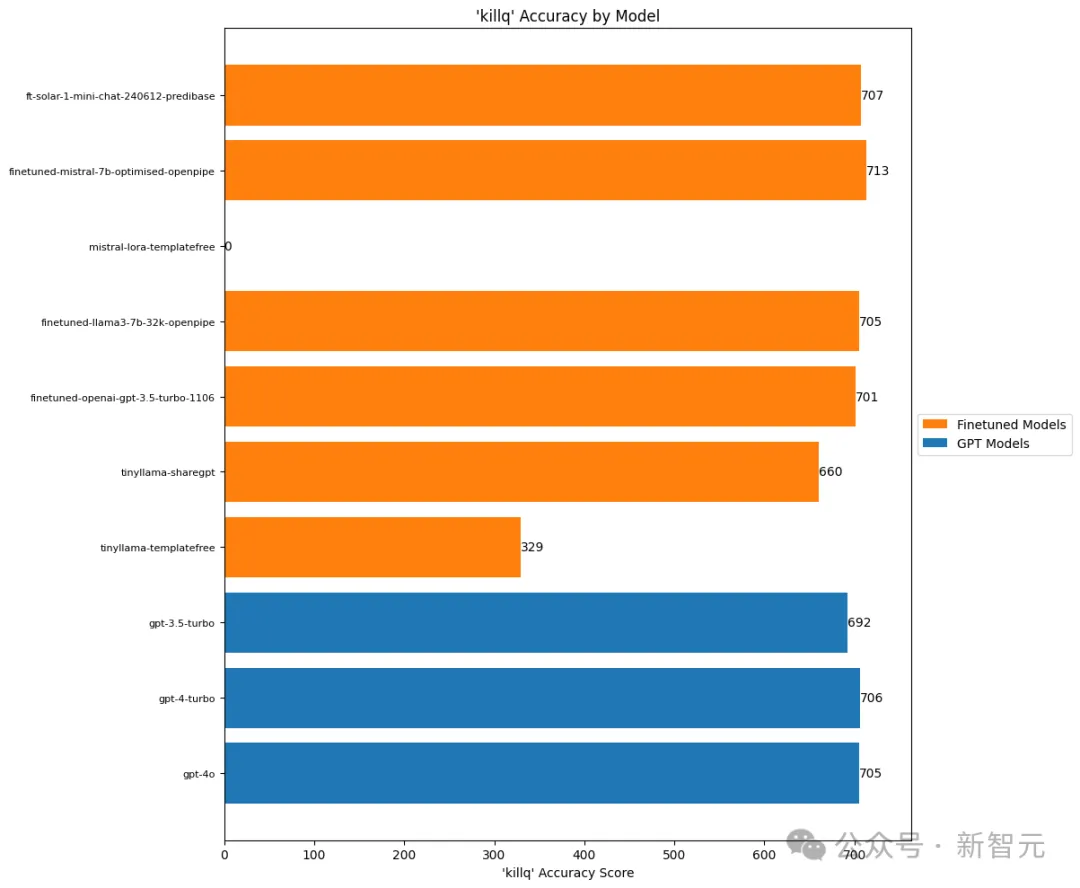

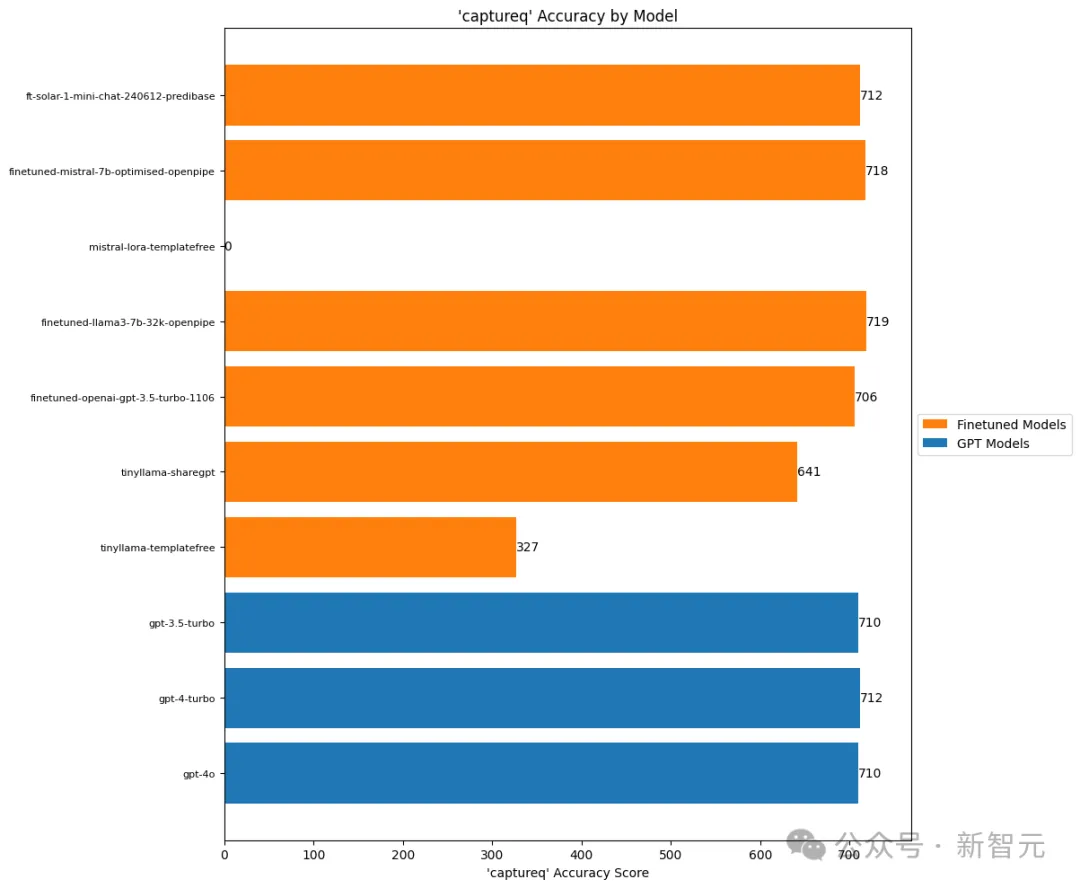

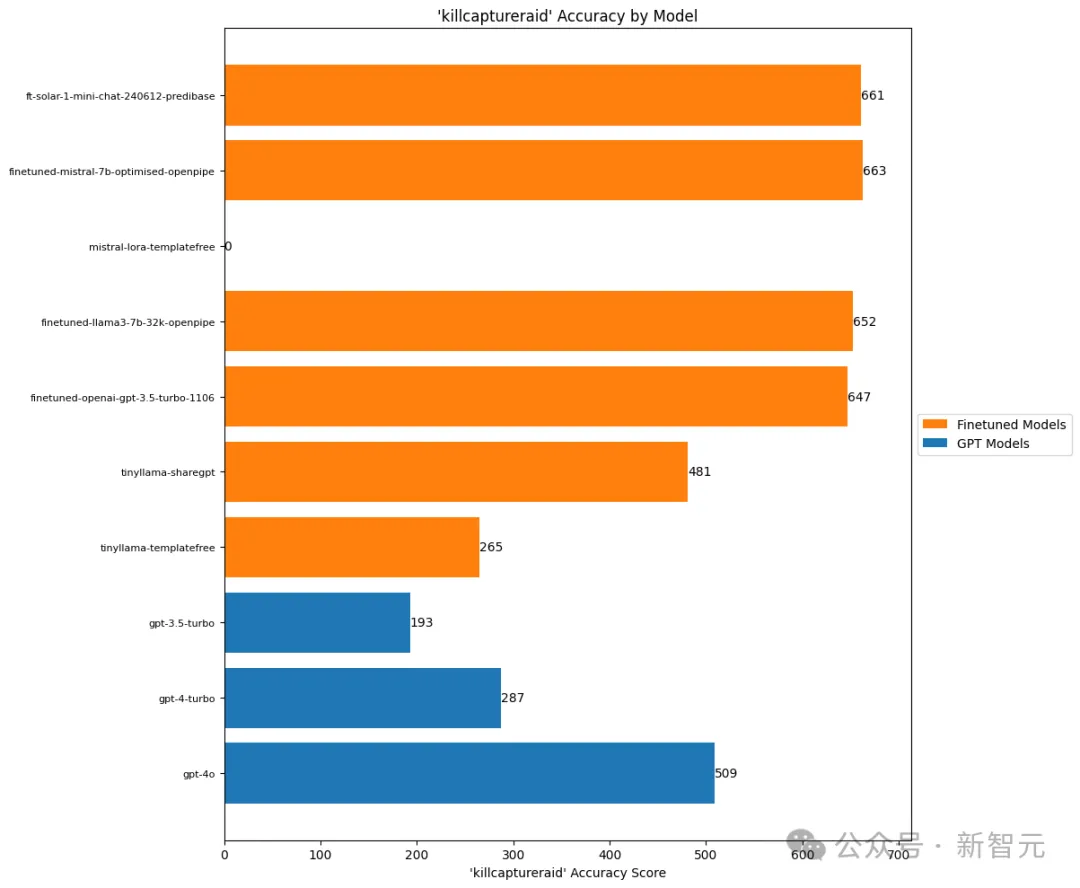

重要提示,對于接下來的所有圖表,總任務數為724,所以這些數字是從724中得出的。

測試結果

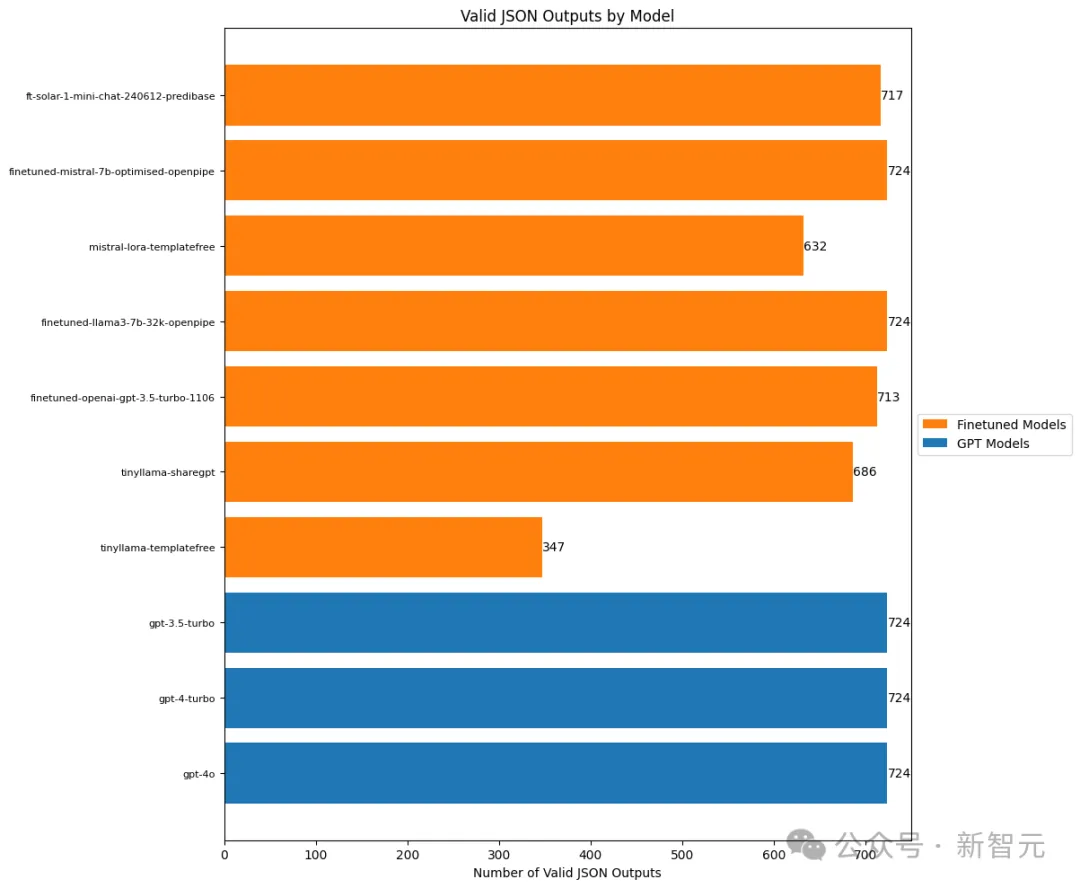

開始日期準確性

{

'gpt-4o': 527,

'gpt-4-turbo': 522,

'gpt-3.5-turbo': 492,

'tinyllama-templatefree': 231,

'tinyllama-sharegpt': 479,

'finetuned-openai-gpt-3.5-turbo-1106': 646,

'finetuned-llama3-7b-32k-openpipe': 585,

'mistral-lora-templatefree': 0,

'finetuned-mistral-7b-optimised-openpipe': 636,

'ft-solar-1-mini-chat-240612-predibase': 649

}

Solar和微調的GPT-3.5模型在預測事件發生日期方面表現最佳。

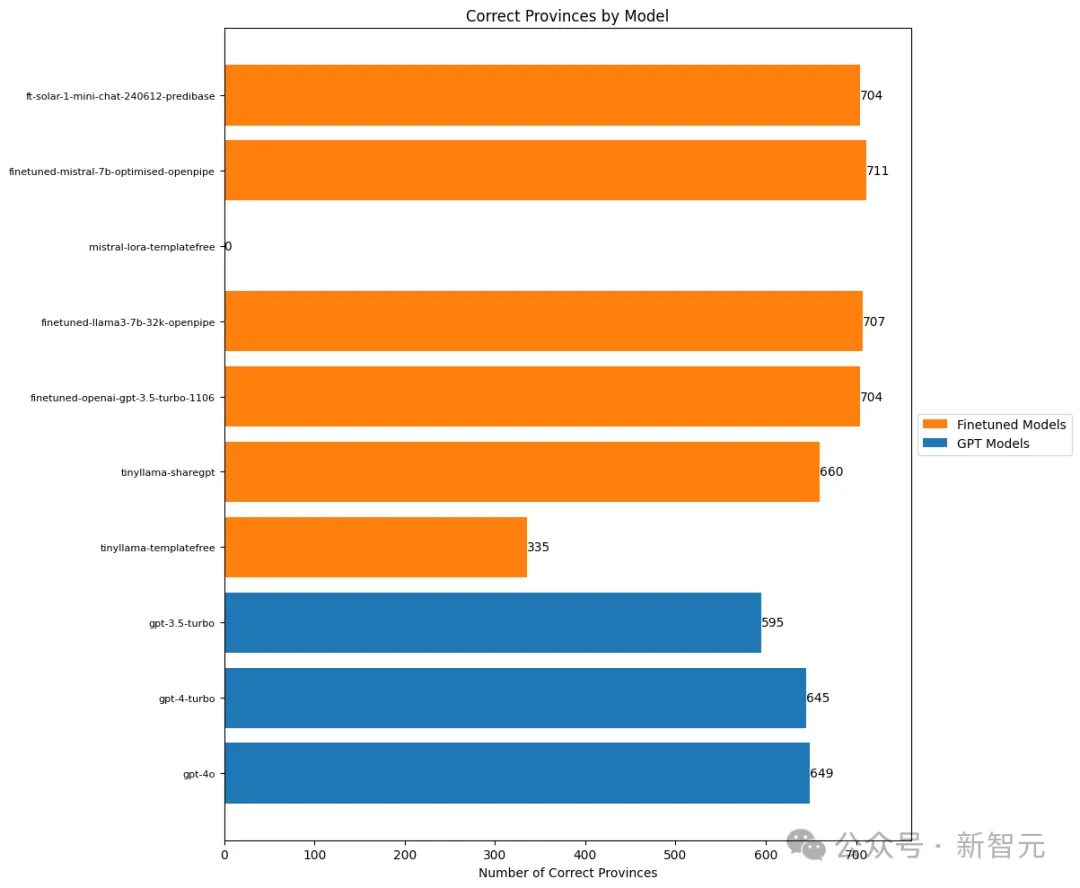

省份準確性

{

'gpt-4o': 649,

'gpt-4-turbo': 645,

'gpt-3.5-turbo': 595,

'tinyllama-templatefree': 335,

'tinyllama-sharegpt': 660,

'finetuned-openai-gpt-3.5-turbo-1106': 704,

'finetuned-llama3-7b-32k-openpipe': 707,

'mistral-lora-templatefree': 0,

'finetuned-mistral-7b-optimised-openpipe': 711,

'ft-solar-1-mini-chat-240612-predibase': 704

}

分析發現,微調后的模型實際上比OpenAI模型表現更好,只犯了少量錯誤。

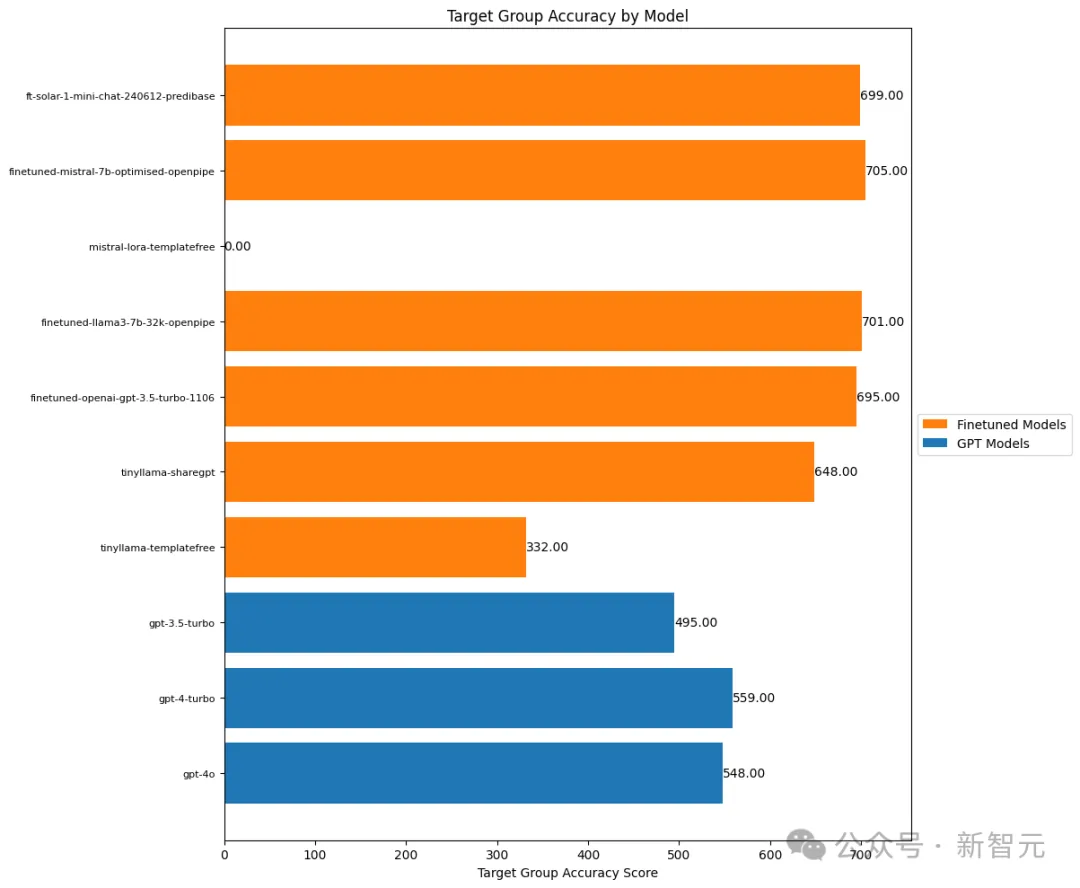

目標群體準確性

在這里,可能會提到多個目標群體,因此作者會根據模型預測的群體中有多少是正確的來給出一個滿分為1的分數。

微調后的模型在目標群體識別方面明顯優于OpenAI。

不過,作者懷疑如果添加一些訓練數據中沒有的新群體,模型的表現可能會下降。

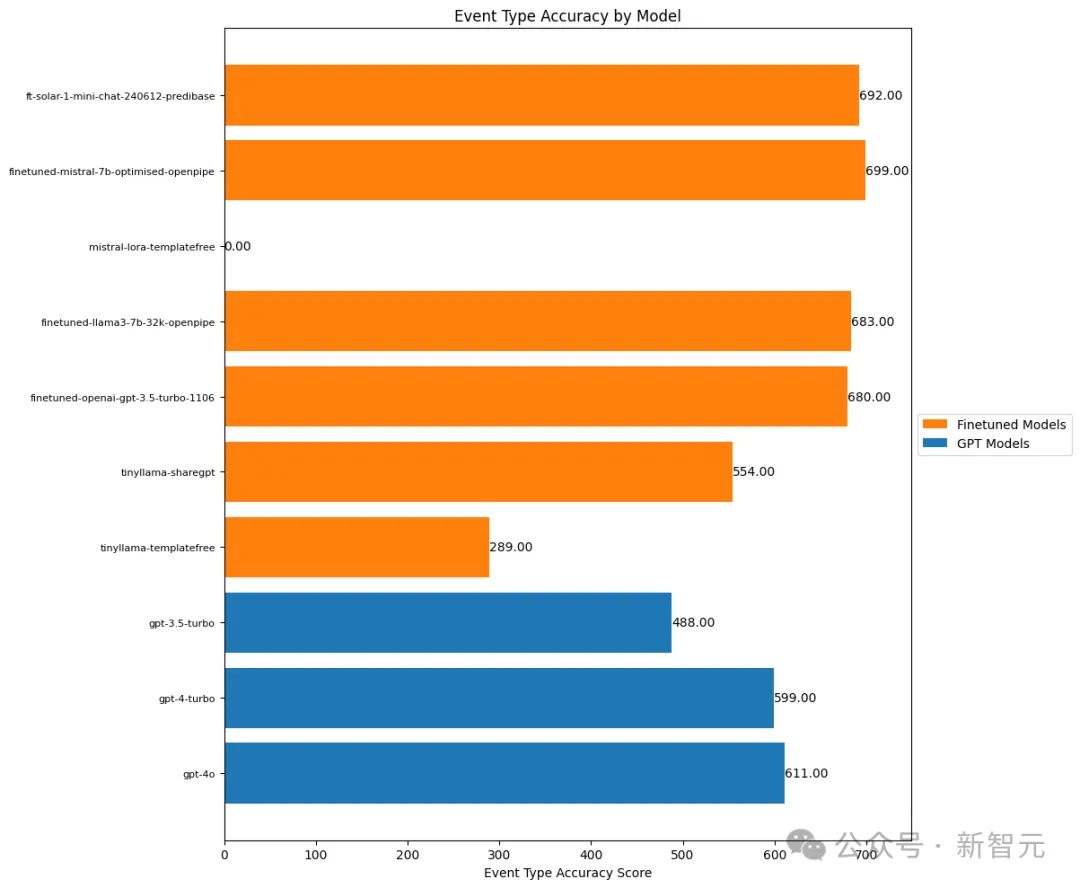

事件類型準確性

事件類型實際上是最難的類別之一,因為有些類別在語義上存在重疊,有時甚至連人工標注者也難以區分。

再一次,微調后的模型在這方面表現得相當好。

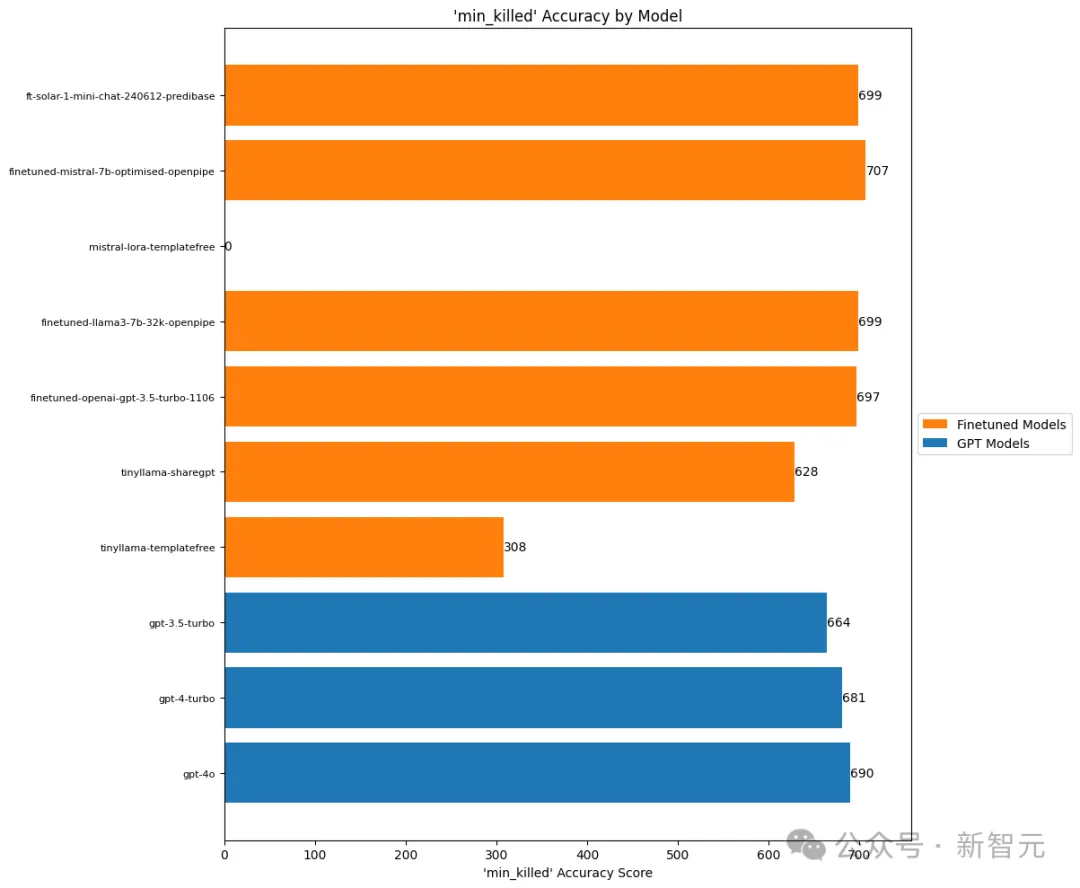

min_killed準確性

在這些數字估計任務中,微調模型和OpenAI模型的表現差距突然縮小了。

雖然Mistral依然表現最佳,但優勢并不明顯!而且OpenAI模型在這方面的表現非常出色,令人印象深刻。

作者猜測這是因為提示中有一整段解釋了用于標注示例的標準:

標注說明:‘facilitator’ 不是領導者。如果新聞稿中提到‘叛亂分子’被拘留而沒有進一步細節,則分配至少兩名被拘留者。將‘a couple’解釋為兩人。將‘several’解釋為至少三人,盡管有時可能指七或八人。將‘a few’、‘some’、‘a group’、‘a small group’和‘multiple’解釋為至少三人,即使有時它們指代更多人數。如果新聞稿中沒有其他信息來提供一個最低可接受的數字,請選擇較小的數字。將‘numerous’和‘a handful’解釋為至少四人,而‘a large number’解釋為至少五人。

min_captured準確性

killq準確性

作者期望這些布爾屬性的準確性非常高,基本上幾乎所有模型都能達到這一點。

不過,微調后的Mistral仍然擊敗了GPT-4o的最佳成績。

captureq準確性

killcaptureraid準確性

「kill-capture raid」是一種特定術語,在標注時以特定方式使用。

OpenAI對作者如何進行這些調用一無所知,這也解釋了他們在這里表現不佳的原因。

airstrike準確性

noshotsfired準確性

「noshootsfired」屬性指的是新聞稿中是否提到在某次突襲/事件中沒有開槍。(在某段時間內,新聞稿特別喜歡提到這一點。)

作者不太確定為什么OpenAI模型的表現與預期相反。

對此,可以想到的一些半擬人化解釋方式是,比如GPT-4類模型可能「過度思考」了這個標簽,但需要更多的調查才能真正理解這一點。

min_leaders_killed準確性

我們經常聽說LLM在處理數字時表現不佳,或者會默認使用某些值等等。但在這個任務中,所有模型都得到了如此高的分數。

這應該是大家一直在努力改進的地方,并且成效顯著。不過,微調模型仍然表現最好。

min_leaders_captured準確性

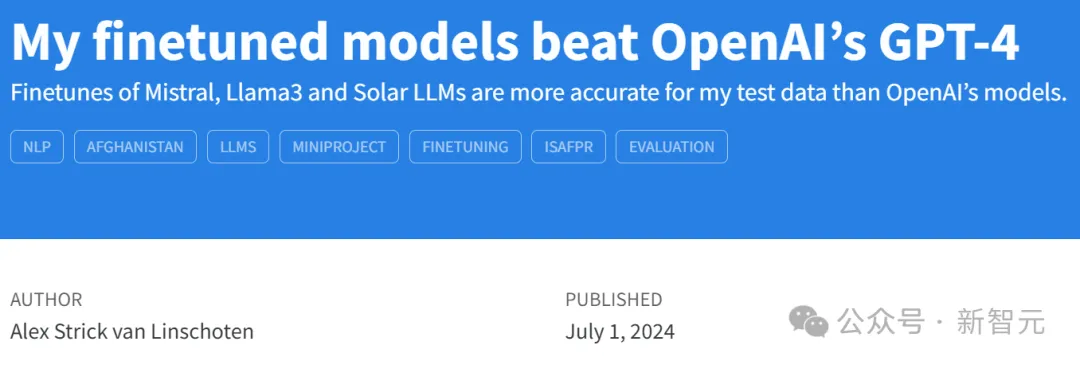

最終得分

讓我們將這些單項能力分數相加,取平均值,得到我們模型在準確性方面的最終分數。

甚至連作者自己也感到驚訝,微調模型竟然超過了OpenAI的GPT類模型。甚至,連TinyLlama都比GPT-3.5 Turbo表現更好!

其中,表現最好的是Mistral-7B(在OpenPipe上微調),緊隨其后的是Solar LLM和Llama3-7B。

僅從分數來看,任何想為結構化數據提取微調模型的人都可以先從Mistral-7B、Solar 7B或Llama3-7B入手。它們在準確性方面可能都差不多,而在模型服務、效率和延遲方面可能有不同的權衡。

作者認為,雖然在提示中再加入一些示例(以及更多的解釋和規則),就可以讓OpenAI的模型表現得更好,但擁有自己的微調模型也會有其他好處:

- 數據隱私(不會將你的信息發送給 OpenAI)

- 更小的模型很可能意味著更好的性能(盡管我仍需測試和證明這一點)

- 整體上更多的控制

- 成本改進

在成本方面,現在進行比較或聲明有點困難,尤其是考慮到大型云服務供應商可以依賴的規模經濟。

但在一個需要長期重復推理構建這個模型的實際用例中,更有可能讓成本論點成立。

特別是,因為唯一能讓OpenAI推理調用變得更好的方法是填充大量示例和額外解釋,這顯著增加了每次查詢的成本。

話雖如此,微調模型時確實會出現一些真實的權衡。

微調效果顯著,但是……

首先,作者非常高興地發現,常說的「微調你的模型,獲得比GPT-4更好的性能」實際上是真的!不僅是真的,而且只需要相對較少的調整和適應。

要知道,上述所有模型都是用現有數據進行的第一次微調。基本上只是使用了所有默認設置,因此它們開箱即用。

對于下一步的工作,作者表示,他將專注于表現最好的Solar、Llama3和Mistral 7B模型。

評估過程很痛苦

作者有一些在本地工作的模型,還有一些部署在不同環境和不同服務中的其他模型。

讓模型分布在不同地方確實很麻煩。在理想情況下,你會希望所有模型的推理都有一個標準接口,尤其是當它們用于相同的用例或項目時。很方便的是,作者的微調GPT3.5自動由OpenAI部署和服務,Llama3、Solar和Mistral也是如此,但作者希望有一個地方可以看到它們全部。

當你有多個模型在運行,并且你在微調和更新它們,數據也在不斷變化時,你就需要一種管理這一切的方法。

這也是微調大語言模型的主要挑戰之一,你必須管理所有這些東西,以確保它們可靠且可重復地工作。即使在項目的早期階段,也需要一種方法來保持一切井然有序不出錯。

但可以了解是否在進步

盡管評估的實施過程有些痛苦,但它們給了作者一個重要的工具,那就是他現在有了一種特定任務的方法,來判斷訓練數據或模型的任何改進或優化是否在幫助自己前進。沒有這個工具,那基本上就是在盲目摸索。

下一步是什么

作者最初想要訓練多個模型,讓它們在各自的領域都成為超級專家,例如有一個模型非常擅長估算某個特定事件中有多少人被捕。

然而,看到模型的表現,作者不太確定下一步還應不應該這樣做,或者說,不太確定自己是否真的能夠通過這種方法顯著提高準確性。

接下來要做的,是進行一些與準確性無關的測試評估。比如,看看模型在域外數據(即完全虛構的關于完全不同的事情的數據)上的表現如何。

另一件事,是深入研究模型服務的一些細節。作者想拿出他前三名表現最佳的模型,深入研究大語言模型服務是如何完成的。

但在進入下一步之前,還要等他的興奮勁退卻一些——「目前,我只是很高興微調后的模型擊敗了GPT-4!」