深度神經網絡可解釋性方法匯總,附Tensorflow代碼實現

理解神經網絡:人們一直覺得深度學習可解釋性較弱。然而,理解神經網絡的研究一直也沒有停止過,本文就來介紹幾種神經網絡的可解釋性方法,并配有能夠在Jupyter下運行的代碼連接。

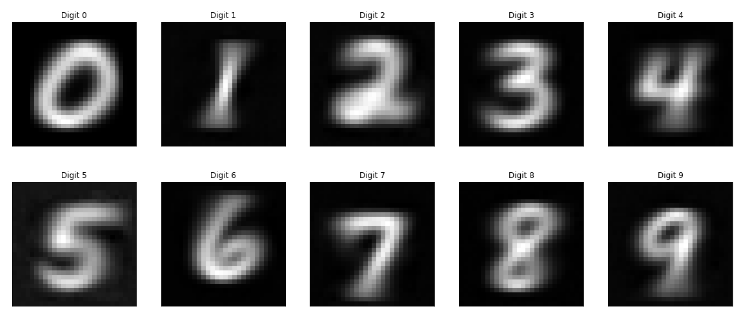

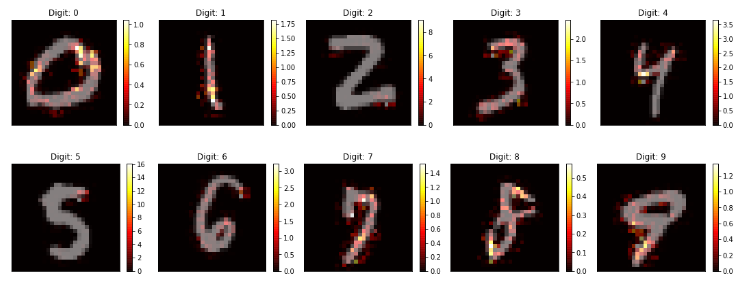

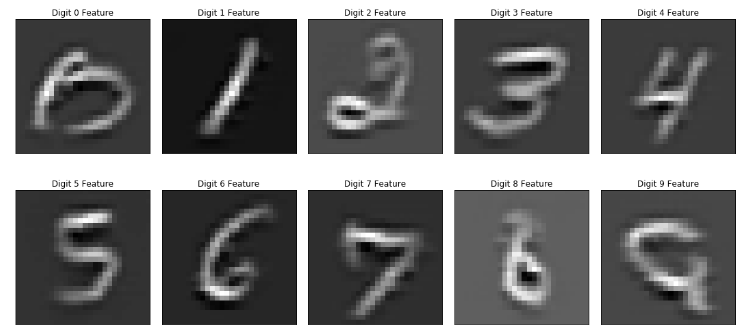

Activation Maximization

通過激活最化來解釋深度神經網絡的方法一共有兩種,具體如下:

1.1 Activation Maximization (AM)

相關代碼如下:

http://nbviewer.jupyter.org/github/1202kbs/Understanding-NN/blob/master/1.1%20Activation%20Maximization.ipynb

1.2 Performing AM in Code Space

相關代碼如下:

http://nbviewer.jupyter.org/github/1202kbs/Understanding-NN/blob/master/1.3%20Performing%20AM%20in%20Code%20Space.ipynb

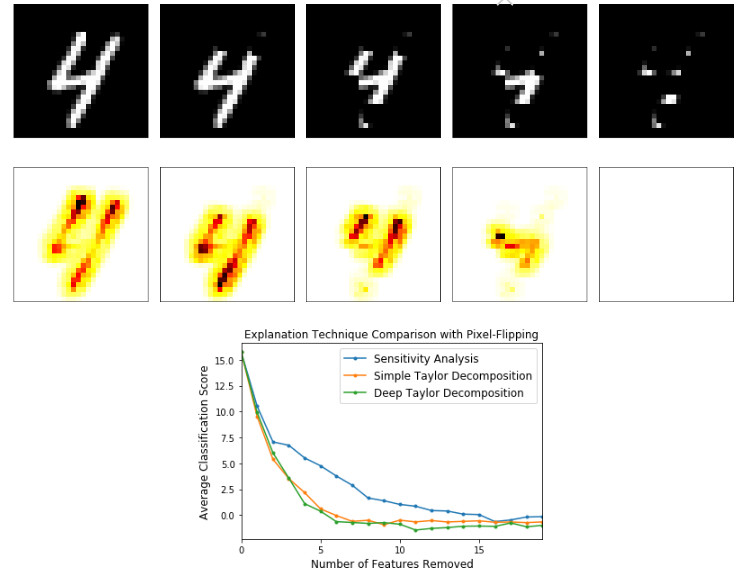

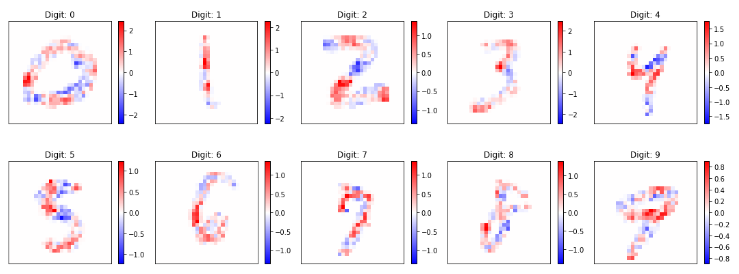

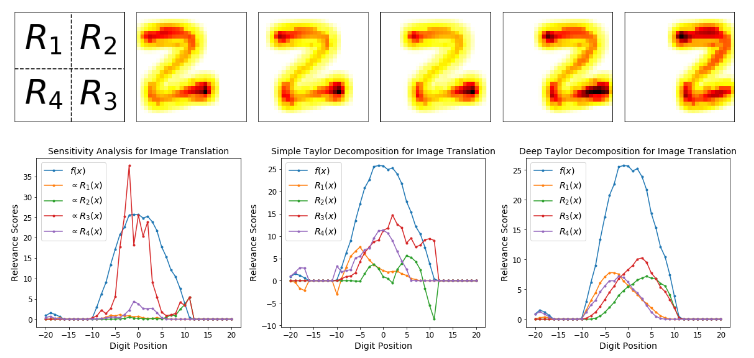

Layer-wise Relevance Propagation

層方向的關聯傳播,一共有5種可解釋方法。Sensitivity Analysis、Simple Taylor Decomposition、Layer-wise Relevance Propagation、Deep Taylor Decomposition、DeepLIFT。它們的處理方法是:先通過敏感性分析引入關聯分數的概念,利用簡單的Taylor Decomposition探索基本的關聯分解,進而建立各種分層的關聯傳播方法。具體如下:

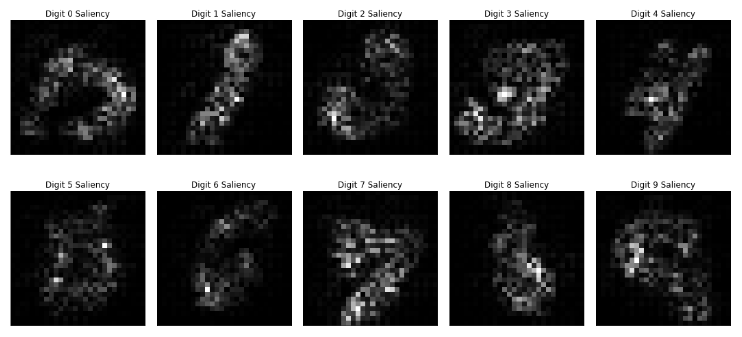

2.1 Sensitivity Analysis

相關代碼如下:

http://nbviewer.jupyter.org/github/1202kbs/Understanding-NN/blob/master/2.1%20Sensitivity%20Analysis.ipynb

2.2 Simple Taylor Decomposition

相關代碼如下:

http://nbviewer.jupyter.org/github/1202kbs/Understanding-NN/blob/master/2.2%20Simple%20Taylor%20Decomposition.ipynb

2.3 Layer-wise Relevance Propagation

相關代碼如下:

http://nbviewer.jupyter.org/github/1202kbs/Understanding-NN/blob/master/2.3%20Layer-wise%20Relevance%20Propagation%20%281%29.ipynb

http://nbviewer.jupyter.org/github/1202kbs/Understanding-NN/blob/master/2.3%20Layer-wise%20Relevance%20Propagation%20%282%29.ipynb

2.4 Deep Taylor Decomposition

相關代碼如下:

http://nbviewer.jupyter.org/github/1202kbs/Understanding-NN/blob/master/2.4%20Deep%20Taylor%20Decomposition%20%281%29.ipynb

http://nbviewer.jupyter.org/github/1202kbs/Understanding-NN/blob/master/2.4%20Deep%20Taylor%20Decomposition%20%282%29.ipynb

2.5 DeepLIFT

相關代碼如下:

http://nbviewer.jupyter.org/github/1202kbs/Understanding-NN/blob/master/2.5%20DeepLIFT.ipynb

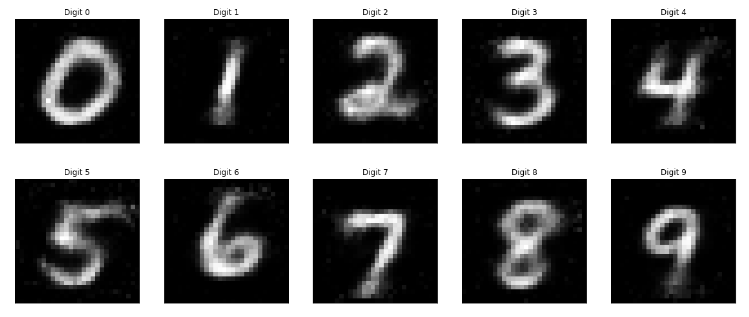

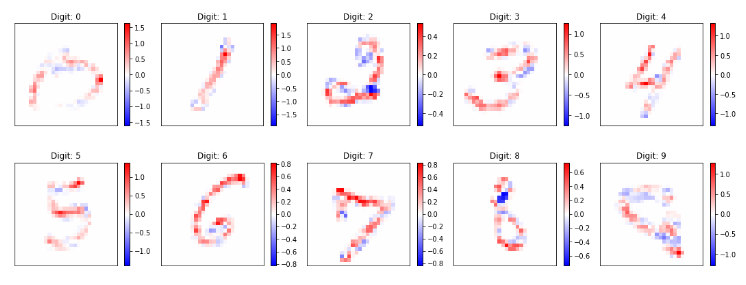

Gradient Based Methods

基于梯度的方法有:反卷積、反向傳播, 引導反向傳播,積分梯度和平滑梯度這幾種。具體可以參考如下鏈接:

https://github.com/1202kbs/Understanding-NN/blob/master/models/grad.py

詳細信息如下:

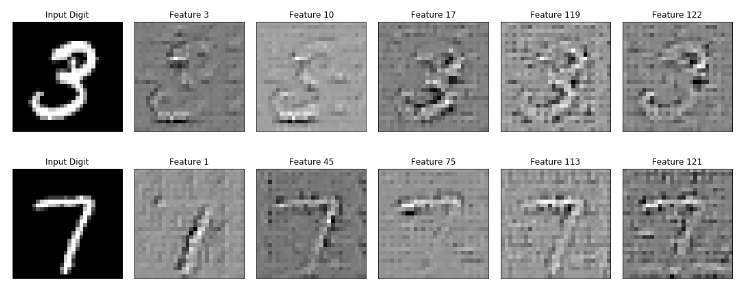

3.1 Deconvolution

相關代碼如下:

http://nbviewer.jupyter.org/github/1202kbs/Understanding-NN/blob/master/3.1%20Deconvolution.ipynb

3.2 Backpropagation

相關代碼如下:

http://nbviewer.jupyter.org/github/1202kbs/Understanding-NN/blob/master/3.2%20Backpropagation.ipynb

3.3 Guided Backpropagation

相關代碼如下:

http://nbviewer.jupyter.org/github/1202kbs/Understanding-NN/blob/master/3.3%20Guided%20Backpropagation.ipynb

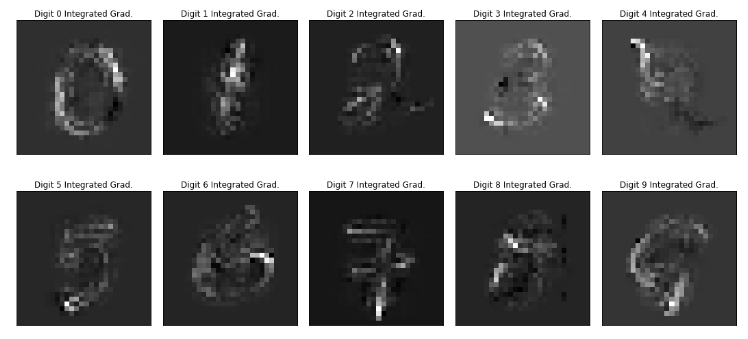

3.4 Integrated Gradients

相關代碼如下:

http://nbviewer.jupyter.org/github/1202kbs/Understanding-NN/blob/master/3.4%20Integrated%20Gradients.ipynb

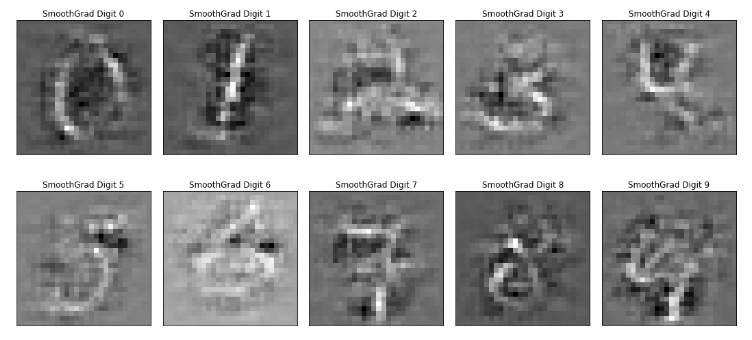

3.5 SmoothGrad

相關代碼如下:

http://nbviewer.jupyter.org/github/1202kbs/Understanding-NN/blob/master/3.5%20SmoothGrad.ipynb

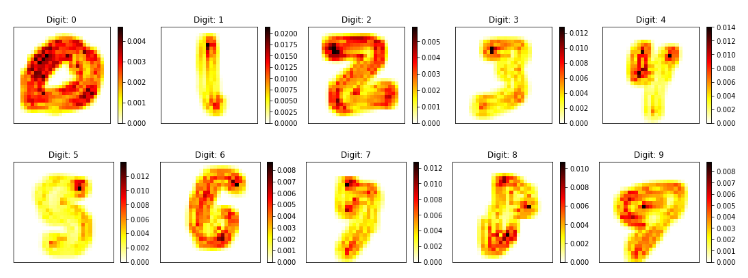

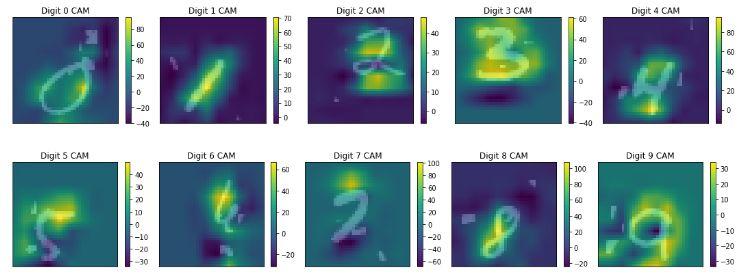

Class Activation Map

類激活映射的方法有3種,分別為:Class Activation Map、Grad-CAM、 Grad-CAM++。在MNIST上的代碼可以參考:

https://github.com/deepmind/mnist-cluttered

每種方法的詳細信息如下:

4.1 Class Activation Map

相關代碼如下:

http://nbviewer.jupyter.org/github/1202kbs/Understanding-NN/blob/master/4.1%20CAM.ipynb

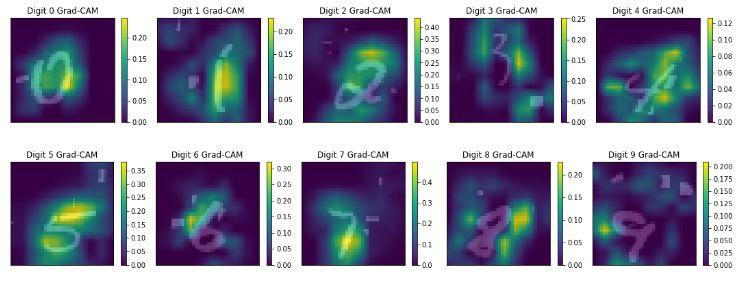

4.2 Grad-CAM

相關代碼如下:

http://nbviewer.jupyter.org/github/1202kbs/Understanding-NN/blob/master/4.2%20Grad-CAM.ipynb

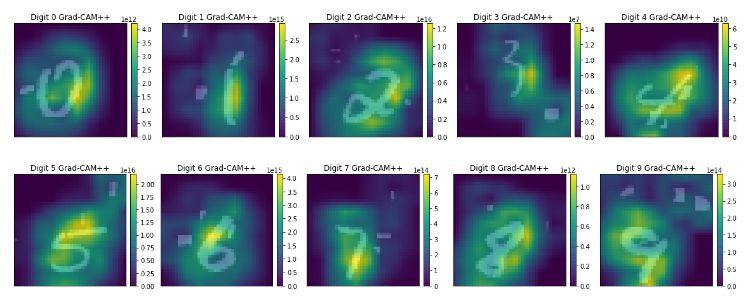

4.3 Grad-CAM++

相關代碼如下:

http://nbviewer.jupyter.org/github/1202kbs/Understanding-NN/blob/master/4.3%20Grad-CAM-PP.ipynb

Quantifying Explanation Quality

雖然每一種解釋技術都基于其自身的直覺或數學原理,但在更抽象的層次上確定好解釋的特征并能夠定量地測試這些特征也很重要。這里再推薦兩種基于質量和評價的可解釋性方法。具體如下:

5.1 Explanation Continuity

相關代碼如下:

http://nbviewer.jupyter.org/github/1202kbs/Understanding-NN/blob/master/5.1%20Explanation%20Continuity.ipynb

5.2 Explanation Selectivity

相關代碼如下:

http://nbviewer.jupyter.org/github/1202kbs/Understanding-NN/blob/master/5.2%20Explanation%20Selectivity.ipynb