我們聊聊如何增強ChatGPT處理模糊問題能力

提示工程技術(shù)可幫助大語言模型在檢索增強生成系統(tǒng)中處理代詞等復(fù)雜核心參照物。

譯自Improving ChatGPT’s Ability to Understand Ambiguous Prompts,作者 Cheney Zhang 是 Zilliz 的一位杰出的算法工程師。他對前沿 AI 技術(shù)如 LLM 和檢索增強生成(RAG)具有深厚的熱情和專業(yè)知識,積極為許多創(chuàng)新 AI 項目做出貢獻,包括 Towhee、Akcio 等。

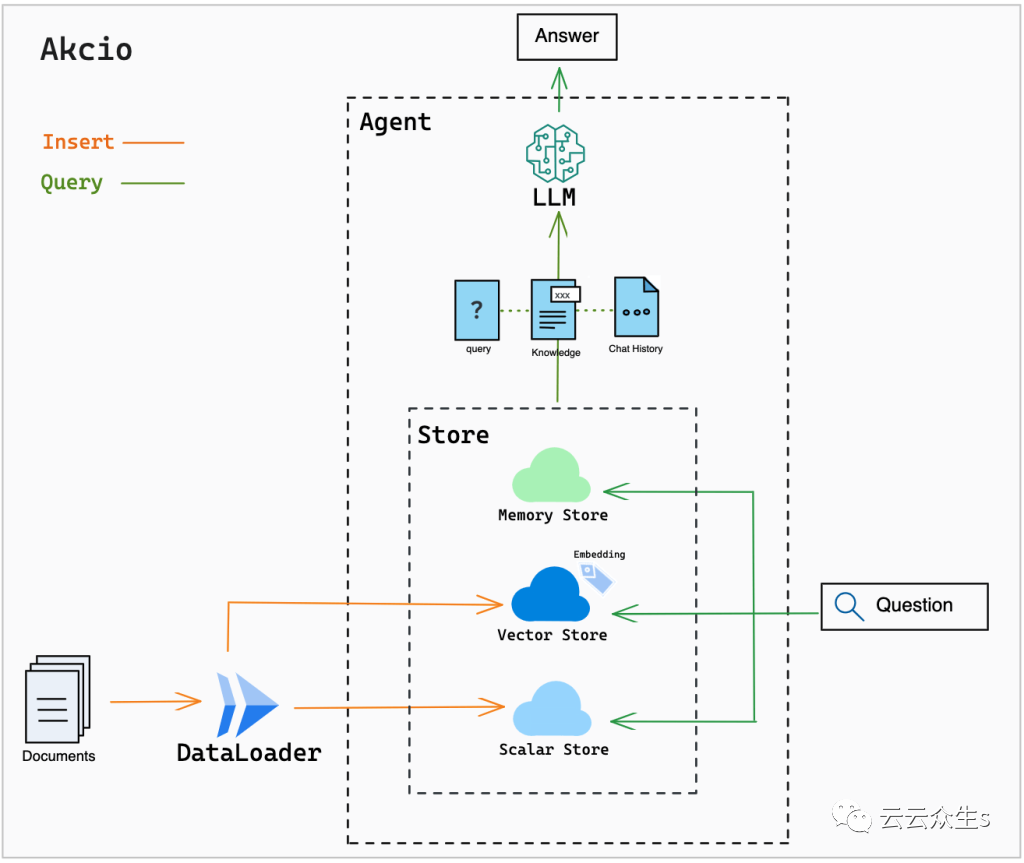

在不斷擴大的 AI 領(lǐng)域中,像ChatGPT這樣的大語言模型(LLM)正在以前所未有的速度推動創(chuàng)新研究和應(yīng)用。一個重要的發(fā)展是檢索增強生成(RAG)的出現(xiàn)。這種技術(shù)將 LLM 的力量與作為長期記憶的向量數(shù)據(jù)庫相結(jié)合,以增強生成響應(yīng)的準(zhǔn)確性。RAG 方法的典型體現(xiàn)是開源項目Akcio,它提供了一個強大的問答系統(tǒng)。

Akcio 的架構(gòu)

Akcio 的架構(gòu)

在 Akcio 的架構(gòu)中,使用數(shù)據(jù)加載器將特定領(lǐng)域的知識無縫集成到向量存儲中,如Milvus或Zilliz(完全托管的 Milvus)。向量存儲檢索與用戶查詢最相關(guān)的前 K 個結(jié)果,并將其傳達給 LLM,為 LLM 提供有關(guān)用戶問題的上下文。隨后,LLM 根據(jù)外部知識完善其響應(yīng)。

例如,如果用戶查詢“2023 年大語言模型的使用案例是什么?”關(guān)于導(dǎo)入 Akcio 的題為“2023 年大語言模型進展洞察報告”的文章,系統(tǒng)會巧妙地從報告中檢索出三個最相關(guān)的段落。

1. In 2023, the LLM use cases can be divided into two categories: generation AI and decision-making. Decision-making scenarios are expected to have higher business value.

2. The generation AI scenario mainly includes dialogue interaction, code development, intelligent agents, etc.

3. NLP applications include text classification, machine translation, sentiment analysis, automatic summarization, etc.Akcio 將這些段落與原始查詢相結(jié)合,并轉(zhuǎn)發(fā)給 LLM,生成一個細致準(zhǔn)確的響應(yīng):

The application scenarios of the large model industry can be divided into generation and decision-making scenarios.RAG 中指代消解的挑戰(zhàn)

然而,盡管取得了進步,實施 RAG 系統(tǒng)仍帶來挑戰(zhàn),特別是在涉及指代消解的多輪對話中。考慮這個問題序列:

Q1: What are the use cases of the large language model in 2023?

A1: The use cases of large language models can be divided into generation AI and decision-making.

Q2: What are their differences, and can you provide examples?Q2 中的代詞“their”指的是“生成式 AI 和決策制定”。然而,LLM 可能會生成與這個問題無關(guān)的結(jié)果,破壞對話的連貫性:

1. BERT and GPT are important models in the field of NLP, but they have significant differences in their design and application scenarios.

2. The difference between large models and small models lies in their scale and complexity. Large models usually have more parameters and more complex structures, requiring more computational resources and time for training and inference. Small models, on the other hand, are relatively simple with fewer parameters and faster training and inference speeds.

3. However, there is no additional information to distinguish between these two products because they appear very similar.傳統(tǒng)方法,如使用遞歸神經(jīng)網(wǎng)絡(luò)的標(biāo)記化、詞形還原和關(guān)鍵詞替換,通常不足以解析復(fù)雜的引用。因此,研究人員轉(zhuǎn)向了 ChatGPT 等 LLM 進行指代消解任務(wù)。這種方法涉及指示 ChatGPT 根據(jù)提供的上下文替換代詞或保留原始問題。雖然這種方法前景廣闊,但它偶爾會直接生成答案,而不是遵循提示指令,這表明需要更精細的策略。

測試的例子

我們使用以下提示格式實驗了直接命令 ChatGPT 替換代詞的方法:

prompt = f'''Please generate a new question adhering to the following guidelines:

1. Complete any missing pronouns or conditions in the question based on the context.

2. Retain the original question if it's already complete.

{history}

Question: {question}'''例子1

查詢:

history = ''' What is NLP?

NLP stands for Natural Language Processing. It is a field of computer science and artificial intelligence that focuses on the interaction between computers and humans using natural language. '''

question = 'What problem does it solve?'ChatGPT 的回應(yīng):

What problem does Natural Language Processing (NLP) solve?在這種情況下,ChatGPT 做得很好,用 “自然語言處理(NLP)” 替換了 “it”。

例子2

查詢:

history = '''When was GPT4 released?

GPT4 was released in 2023'''

question = 'What progress has been made in computer vision this year?'ChatGPT 的回應(yīng):

What progress has been made in computer vision this year?在這種情況下,ChatGPT 在替換 “今年” 時遇到了困難,導(dǎo)致指代消解不完整。

例子3

查詢:

history = '''What is GPT3?

GPT-3 (Generative Pre-trained Transformer 3) is a state-of-the-art language processing model developed by OpenAI.

When was GPT4 released?

GPT4 was released in 2023'''

question = 'What is the difference between them? What are the advantages of the latter?'ChatGPT 的回應(yīng):

Sorry, the information provided about GPT-4's release date is not accurate as it has not been officially announced by OpenAI. Therefore, it is not possible to answer the question about the difference between GPT-3 and GPT-4 or the advantages of the latter.不幸的是,ChatGPT 偏離了指令,這可能是由于其復(fù)雜的決策過程。盡管我們努力加強提示,但 ChatGPT 有時會偏向直接答案,使指代消解任務(wù)復(fù)雜化。

少樣本提示與思維鏈:一種改進的方法

提示工程在有效利用 LLM 方面發(fā)揮著至關(guān)重要的作用。我們決定測試將少樣本提示與思維鏈(CoT)方法相結(jié)合,作為一種有前途的策略。少樣本提示向 LLM 展示多個參考示例,引導(dǎo)它們在響應(yīng)中模仿這些示例。CoT 通過鼓勵 LLM 在答案中逐步推理來增強它們在復(fù)雜推理任務(wù)中的表現(xiàn)。

通過集成這些技術(shù),我們開發(fā)了一個提示格式來引導(dǎo) ChatGPT 進行指代消解。經(jīng)修訂的提示格式包括空的對話歷史記錄、基本示例、失敗的代詞替換以及涉及多個代詞的案例,為 ChatGPT 提供更明確的指示和參考示例。ChatGPT 返回NEED COREFERENCE RESOLUTION: Yes的實例至關(guān)重要,因為它們表明 ChatGPT 需要替換代詞或模糊引用以獲得連貫的響應(yīng)。

以下是經(jīng)過優(yōu)化的提示格式:

REWRITE_TEMP = f'''

HISTORY:

[]

NOW QUESTION: Hello, how are you?

NEED COREFERENCE RESOLUTION: No => THOUGHT: Consequently, the output question mirrors the current query. => OUTPUT QUESTION: Hello, how are you?

-------------------

HISTORY:

[Q: Is Milvus a vector database?

A: Yes, Milvus is a vector database.]

NOW QUESTION: How to use it?

NEED COREFERENCE RESOLUTION: Yes => THOUGHT: I must substitute 'it' with 'Milvus' in the current question. => OUTPUT QUESTION: How to use Milvus?

-------------------

HISTORY:

[]

NOW QUESTION: What are its features?

NEED COREFERENCE RESOLUTION: Yes => THOUGHT: Although 'it' requires substitution, there's no suitable reference in the history. Thus, the output question remains unchanged. => OUTPUT QUESTION: What are its features?

-------------------

HISTORY:

[Q: What is PyTorch?

A: PyTorch is an open-source machine learning library for Python. It provides a flexible and efficient framework for building and training deep neural networks.

Q: What is Tensorflow?

A: TensorFlow is an open-source machine learning framework. It provides a comprehensive set of tools, libraries, and resources for building and deploying machine learning models.]

NOW QUESTION: What is the difference between them?

NEED COREFERENCE RESOLUTION: Yes => THOUGHT: 'Them' should be replaced with 'PyTorch and Tensorflow' in the current question. => OUTPUT QUESTION: What is the difference between PyTorch and Tensorflow?

-------------------

HISTORY:

[{history}]

NOW QUESTION: {question}

NEED COREFERENCE RESOLUTION: '''測試的示例和優(yōu)化后的響應(yīng)

以下是我們使用優(yōu)化提示進行實驗的一些結(jié)果:

例子 1

查詢:

history = '''When was GPT-4 released?

GPT-4 was released in 2023'''

question = 'What progress has been made in computer vision this year?'ChatGPT 優(yōu)化后的響應(yīng):

Yes => THOUGHT: I need to replace "this year" with "2023" in the now question. => OUTPUT QUESTION: What progress has been made in computer vision in 2023?例子 2:

查詢:

history = '''What is GPT-3?

GPT-3 (Generative Pre-trained Transformer 3) is a state-of-the-art language processing model developed by OpenAI.

When was GPT-4 released?

GPT-4 was released in 2023'''

question = 'What is the difference between the two? What are the advantages of the latter?'ChatGPT 優(yōu)化后的響應(yīng):

Yes => THOUGHT: I need to replace 'the two' with 'GPT-3 and GPT-4' and 'the latter' with 'GPT-4' in the now question. => OUTPUT QUESTION: What is the difference between GPT-3 and GPT-4? What are the advantages of GPT-4?優(yōu)化后的提示格式極大地增強了 ChatGPT 處理復(fù)雜指代消解任務(wù)的能力。此前帶來挑戰(zhàn)的涉及多個實體的問題現(xiàn)在可以有效解決。ChatGPT 靈巧地替換代詞和模糊引用,提供準(zhǔn)確和上下文相關(guān)的響應(yīng)。

結(jié)論

提示工程在利用 LLM 的RAG系統(tǒng)中解決指代消解問題方面發(fā)揮著關(guān)鍵作用。通過集成創(chuàng)新技術(shù),如少樣本提示和 CoT 方法,我們極大地改進了 RAG 系統(tǒng)中處理復(fù)雜引用的能力,使得 ChatGPT 等 LLM 能夠準(zhǔn)確地替換代詞和模糊引用,并產(chǎn)生連貫的響應(yīng)。