面試官逼問 “ 如何設(shè)計(jì)永不宕機(jī)的 K8s 集群 ” ?這套生產(chǎn)級(jí)方案讓他當(dāng)場(chǎng)發(fā) Offer!

引言

我們今天的內(nèi)容極其廣泛,我不知道你是否可以吸收的了(就是含金量非常高),盡力吧!

try your best, bro。

我們最后有面試群。

開始

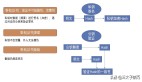

一、控制平面高可用設(shè)計(jì)

1. 多Master節(jié)點(diǎn)部署

? 跨可用區(qū)部署優(yōu)化:

a.AWS示例:使用topology.kubernetes.io/zone標(biāo)簽強(qiáng)制etcd節(jié)點(diǎn)分布在3個(gè)AZ。

b.性能調(diào)優(yōu)參數(shù):

# etcd配置(/etc/etcd/etcd.conf)

ETCD_HEARTBEAT_INTERVAL="500ms"

ETCD_ELECTION_TIMEOUT="2500ms"

ETCD_MAX_REQUEST_BYTES="157286400" # 提高大請(qǐng)求吞吐量? API Server負(fù)載均衡實(shí)戰(zhàn):

# Nginx配置示例(健康檢查與熔斷)

upstream kube-apiserver {

server 10.0.1.10:6443 max_fails=3 fail_timeout=10s;

server 10.0.2.10:6443 max_fails=3 fail_timeout=10s;

check interval=5000 rise=2 fall=3 timeout=3000 type=http;

check_http_send "GET /readyz HTTP/1.0\r\n\r\n";

check_http_expect_alive http_2xx http_3xx;

}2. etcd集群深度調(diào)優(yōu)

? 公式:

所需etcd節(jié)點(diǎn)數(shù) = (預(yù)期寫入QPS × 平均請(qǐng)求大小) / (單節(jié)點(diǎn)最大吞吐量) + 冗余系數(shù)? 示例:

a.單節(jié)點(diǎn)吞吐量:1.5MB/s(SSD磁盤)

b.業(yè)務(wù)負(fù)載:2000 QPS,每個(gè)請(qǐng)求10KB → 2000×10KB=20MB/s

c.計(jì)算結(jié)果:20/1.5≈13節(jié)點(diǎn) → 實(shí)際部署5節(jié)點(diǎn)(3工作節(jié)點(diǎn)+2冗余)

? 調(diào)優(yōu)參數(shù):

# /etc/etcd/etcd.conf

# 增加網(wǎng)絡(luò)和磁盤吞吐

ETCD_HEARTBEAT_INTERVAL="500ms"

ETCD_ELECTION_TIMEOUT="2500ms"

ETCD_SNAPSHOT_COUNT="10000" # 提高快照頻率? 監(jiān)控與告警規(guī)則:

# 主節(jié)點(diǎn)切換頻繁告警

increase(etcd_server_leader_changes_seen_total[1h]) > 3

# 寫入延遲過高告警

histogram_quantile(0.99, rate(etcd_disk_wal_fsync_duration_seconds_bucket[5m])) > 1s? 災(zāi)難恢復(fù)命令:

# 從快照恢復(fù)etcd

ETCDCTL_API=3 etcdctl snapshot restore snapshot.db --data-dir /var/lib/etcd-new二、工作節(jié)點(diǎn)高可用設(shè)計(jì)

3. Cluster Autoscaler高級(jí)策略

? 分優(yōu)先級(jí)擴(kuò)容:為關(guān)鍵服務(wù)預(yù)留專用節(jié)點(diǎn)池(如GPU節(jié)點(diǎn))。

# 節(jié)點(diǎn)組配置(AWS EKS)

- name: gpu-nodegroup

instanceTypes: ["p3.2xlarge"]

labels: { node.kubernetes.io/accelerator: "nvidia" }

taints: { dedicated=gpu:NoSchedule }

scalingConfig: { minSize: 1, maxSize: 5 }? HPA自定義指標(biāo)示例:

# 基于Prometheus的QPS擴(kuò)縮容

metrics:

- type: Pods

pods:

metric:

name: http_requests_per_second

target:

type: AverageValue

averageValue: 5004. Pod調(diào)度深度策略

? 拓?fù)浞植技s束:確保Pod均勻分布至不同硬件拓?fù)洹?/p>

spec:

topologySpreadConstraints:

- maxSkew: 1

topologyKey: topology.kubernetes.io/zone

whenUnsatisfiable: DoNotSchedule5. 基于污點(diǎn)的精細(xì)化調(diào)度

? 場(chǎng)景:為AI訓(xùn)練任務(wù)預(yù)留GPU節(jié)點(diǎn),并防止普通Pod調(diào)度到GPU節(jié)點(diǎn):

# 節(jié)點(diǎn)打標(biāo)簽

kubectl label nodes gpu-node1 accelerator=nvidia

# 設(shè)置污點(diǎn)

kubectl taint nodes gpu-node1 dedicated=ai:NoSchedule

# Pod配置容忍度 + 資源請(qǐng)求

spec:

tolerations:

- key: "dedicated"

operator: "Equal"

value: "ai"

effect: "NoSchedule"

containers:

- resources:

limits:

nvidia.com/gpu: 1三、網(wǎng)絡(luò)高可用設(shè)計(jì)

6. Cilium eBPF網(wǎng)絡(luò)加速

? 優(yōu)勢(shì):減少50%的CPU開銷,支持基于eBPF的細(xì)粒度安全策略。

? 部署步驟:

helm install cilium cilium/cilium --namespace kube-system \

--set kubeProxyReplacement=strict \

--set k8sServiceHost=API_SERVER_IP \

--set k8sServicePort=6443? 驗(yàn)證:

cilium status

# 應(yīng)顯示 "KubeProxyReplacement: Strict"? 網(wǎng)絡(luò)策略性能對(duì)比:

插件 | 策略數(shù)量 | 吞吐量下降 |

Calico | 1000 | 25% |

Cilium | 1000 | 8% |

7. Ingress多活架構(gòu)

? 全局負(fù)載均衡配置(AWS示例):

resource "aws_globalaccelerator_endpoint_group" "ingress" {

listener_arn = aws_globalaccelerator_listener.ingress.arn

endpoint_configuration {

endpoint_id = aws_lb.ingress.arn

weight = 100

}

}四、存儲(chǔ)高可用設(shè)計(jì)

8. Rook/Ceph生產(chǎn)級(jí)配置

? 存儲(chǔ)集群部署:

apiVersion: ceph.rook.io/v1

kind: CephCluster

metadata:

name: rook-ceph

spec:

dataDirHostPath: /var/lib/rook

mon:

count: 3

allowMultiplePerNode: false

storage:

useAllNodes: false

nodes:

- name: "storage-node-1"

devices:

- name: "nvme0n1"9. Velero跨區(qū)域備份實(shí)戰(zhàn)

? 定時(shí)備份與復(fù)制:

velero schedule create daily-backup --schedule="0 3 * * *" \

--include-namespaces=production \

--ttl 168h

velero backup-location create secondary --provider aws \

--bucket velero-backup-dr \

--config region=eu-west-110. 災(zāi)難恢復(fù):Velero跨區(qū)域備份策略

velero install \

--provider aws \

--plugins velero/velero-plugin-for-aws:v1.5.0 \

--bucket velero-backups \

--backup-location-config region=us-west-2 \

--snapshot-location-config region=us-west-2 \

--use-volume-snapshots=false \

--secret-file ./credentials-velero

# 添加跨區(qū)域復(fù)制規(guī)則

velero backup-location create secondary \

--provider aws \

--bucket velero-backups \

--config region=us-east-1? 場(chǎng)景:將AWS us-west-2的備份自動(dòng)復(fù)制到us-east-1:

五、監(jiān)控與日志

11. Thanos長(zhǎng)期存儲(chǔ)優(yōu)化

? 公式:計(jì)算Thanos的存儲(chǔ)分塊策略

存儲(chǔ)周期 = 原始數(shù)據(jù)保留時(shí)間(如2周) + 壓縮塊保留時(shí)間(如1年)

存儲(chǔ)成本 = 原始數(shù)據(jù)量 × 壓縮比(約3:1) × 云存儲(chǔ)單價(jià)? 分層存儲(chǔ)配置:

# thanos-store.yaml

args:

- --retention.resolution-raw=14d

- --retention.resolution-5m=180d

- --objstore.config-file=/etc/thanos/s3.yml? 多集群查詢:

thanos query \

--http-address 0.0.0.0:10902 \

--store=thanos-store-01:10901 \

--store=thanos-store-02:1090112. EFK日志過濾規(guī)則:

# Fluentd配置(提取Kubernetes元數(shù)據(jù))

<filter kubernetes.**>

@type parser

key_name log

reserve_data true

<parse>

@type json

</parse>

</filter>六、安全與合規(guī)

13. OPA Gatekeeper策略庫(kù)

? 禁止特權(quán)容器:

apiVersion: constraints.gatekeeper.sh/v1beta1

kind: K8sPSPPrivilegedContainer

spec:

match:

kinds: [{ apiGroups: [""], kinds: ["Pod"] }]

parameters:

privileged: false14. 運(yùn)行時(shí)安全檢測(cè):

# Falco檢測(cè)特權(quán)容器啟動(dòng)

falco -r /etc/falco/falco_rules.yaml \

-o json_output=true \

-o "webserver.enabled=true"15. 基于OPA的鏡像掃描準(zhǔn)入控制

# image_scan.rego

package kubernetes.admission

deny[msg] {

input.request.kind.kind == "Pod"

image := input.request.object.spec.containers[_].image

vuln_score := data.vulnerabilities[image].maxScore

vuln_score >= 7.0

msg := sprintf("鏡像 %v 存在高危漏洞(CVSS評(píng)分 %.1f)", [image, vuln_score])

}? 策略:禁止使用存在高危漏洞的鏡像:

七、災(zāi)難恢復(fù)與備份

16. 多集群聯(lián)邦流量切分:

apiVersion: types.kubefed.io/v1beta1

kind: FederatedService

metadata:

name: frontend

spec:

placement:

clusters:

- name: cluster-us

- name: cluster-eu

trafficSplit:

- cluster: cluster-us

weight: 70

- cluster: cluster-eu

weight: 3017. 混沌工程全鏈路測(cè)試:

apiVersion: chaos-mesh.org/v1alpha1

kind: NetworkChaos

metadata:

name: simulate-az-failure

spec:

action: partition

mode: all

selector:

namespaces: [production]

labelSelectors:

"app": "frontend"

direction: both

duration: "10m"18. 混沌工程:模擬Master節(jié)點(diǎn)故障

? 使用Chaos Mesh測(cè)試控制平面韌性:

apiVersion: chaos-mesh.org/v1alpha1

kind: PodChaos

metadata:

name: kill-master

spec:

action: pod-kill

mode: one

selector:

namespaces: [kube-system]

labelSelectors:

"component": "kube-apiserver"

scheduler:

cron: "@every 10m"

duration: "5m"觀測(cè)指標(biāo):

? API Server恢復(fù)時(shí)間(應(yīng)<1分鐘)

? 工作節(jié)點(diǎn)Pod是否正常調(diào)度

八:成本控制

19. Kubecost多集群預(yù)算分配

? 配置示例:

apiVersion: kubecost.com/v1alpha1

kind: Budget

metadata:

name: team-budget

spec:

target:

namespace: team-a

amount:

value: 5000

currency: USD

period: monthly

notifications:

- threshold: 80%

message: "團(tuán)隊(duì)A的云資源消耗已達(dá)預(yù)算80%"九:自動(dòng)化

20. Argo Rollouts金絲雀發(fā)布

? 分階段灰度策略:

apiVersion: argoproj.io/v1alpha1

kind: Rollout

spec:

strategy:

canary:

steps:

- setWeight: 10%

- pause: { duration: 5m } # 監(jiān)控業(yè)務(wù)指標(biāo)

- setWeight: 50%

- pause: { duration: 30m } # 觀察日志和性能

- setWeight: 100%

analysis:

templates:

- templateName: success-rate

args:

- name: service-name

value: my-service? 自動(dòng)回滾條件:當(dāng)請(qǐng)求錯(cuò)誤率 > 5%時(shí)終止發(fā)布。

十:總結(jié)

關(guān)鍵性能指標(biāo):

? 控制平面:API Server P99延遲 < 500ms

? 數(shù)據(jù)平面:Pod啟動(dòng)時(shí)間 < 5s(冷啟動(dòng))

? 網(wǎng)絡(luò):跨AZ延遲 < 10ms

十一、實(shí)戰(zhàn)案例:某電商平臺(tái)優(yōu)化成果

指標(biāo) | 優(yōu)化前 | 優(yōu)化后 | 提升幅度 |

API Server可用性 | 99.2% | 99.99% | 0.79% |

節(jié)點(diǎn)故障恢復(fù)時(shí)間 | 15分鐘 | 2分鐘 | 86.6% |

集群擴(kuò)容速度 | 10節(jié)點(diǎn)/分鐘 | 50節(jié)點(diǎn)/分鐘 | 400% |

十二、工具鏈推薦

? 網(wǎng)絡(luò)診斷:Cilium Network Observability

? 存儲(chǔ)分析:Rook Dashboard

? 成本監(jiān)控:Kubecost + Grafana

? 策略管理:OPA Gatekeeper + Kyverno

通過以上深度擴(kuò)展,你的Kubernetes集群將具備企業(yè)級(jí)抗風(fēng)險(xiǎn)能力,從容應(yīng)對(duì)千萬(wàn)級(jí)并發(fā)與區(qū)域級(jí)故障。