在神經(jīng)網(wǎng)絡(luò)中實(shí)現(xiàn)反向傳播

建立神經(jīng)網(wǎng)絡(luò)時,需要采取幾個步驟。其中兩個最重要的步驟是實(shí)現(xiàn)正向和反向傳播。這兩個詞聽起來真的很沉重,并且總是讓初學(xué)者感到恐懼。但實(shí)際上,如果將這些技術(shù)分解為各自的步驟,則可以正確理解它們。在本文中,我們將專注于反向傳播及其每個步驟的直觀知識。

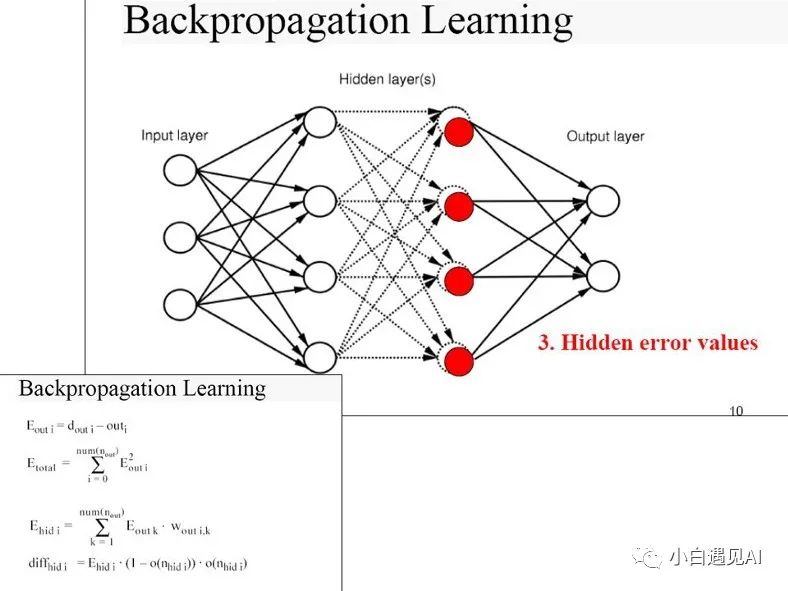

什么是反向傳播?

這只是實(shí)現(xiàn)神經(jīng)網(wǎng)絡(luò)的一項(xiàng)簡單技術(shù),允許我們計(jì)算參數(shù)的梯度,以執(zhí)行梯度下降并使成本函數(shù)最小化。許多學(xué)者將反向傳播描述為神經(jīng)網(wǎng)絡(luò)中數(shù)學(xué)上最密集的部分。不過請放輕松,因?yàn)樵诒疚闹形覀儗⑼耆饷芊聪騻鞑サ拿總€部分。

實(shí)施反向傳播

假設(shè)一個簡單的兩層神經(jīng)網(wǎng)絡(luò)-一個隱藏層和一個輸出層。我們可以如下執(zhí)行反向傳播初始化要用于神經(jīng)網(wǎng)絡(luò)的權(quán)重和偏差:這涉及隨機(jī)初始化神經(jīng)網(wǎng)絡(luò)的權(quán)重和偏差。這些參數(shù)的梯度將從反向傳播中獲得,并用于更新梯度下降。

#Import Numpy library

import numpy as np

#set seed for reproducability

np.random.seed(100)

#We will first initialize the weights and bias needed and store them in a dictionary called W_B

def initialize(num_f, num_h, num_out):

'''

Description: This function randomly initializes the weights and biases of each layer of the neural network

Input Arguments:

num_f - number of training features

num_h -the number of nodes in the hidden layers

num_out - the number of nodes in the output

Output:

W_B - A dictionary of the initialized parameters.

'''

#randomly initialize weights and biases, and proceed to store in a dictionary

W_B = {

'W1': np.random.randn(num_h, num_f),

'b1': np.zeros((num_h, 1)),

'W2': np.random.randn(num_out, num_h),

'b2': np.zeros((num_out, 1))

}

return W_B執(zhí)行前向傳播:這涉及到計(jì)算隱藏層和輸出層的線性和激活輸出。

對于隱藏層:我們將使用如下所示的relu激活功能:

#We will now proceed to create functions for each of our activation functions

def relu (Z):

'''

Description: This function performs the relu activation function on a given number or matrix.

Input Arguments:

Z - matrix or integer

Output:

relu_Z - matrix or integer with relu performed on it

'''

relu_Z = np.maximum(Z,0)

return relu_Z對于輸出層:

我們將使用S型激活函數(shù),如下所示:

def sigmoid (Z):

'''

Description: This function performs the sigmoid activation function on a given number or matrix.

Input Arguments:

Z - matrix or integer

Output:

sigmoid_Z - matrix or integer with sigmoid performed on it

'''

sigmoid_Z = 1 / (1 + (np.exp(-Z)))

return sigmoid_Z執(zhí)行前向傳播:

#We will now proceed to perform forward propagation

def forward_propagation(X, W_B):

'''

Description: This function performs the forward propagation in a vectorized form

Input Arguments:

X - input training examples

W_B - initialized weights and biases

Output:

forward_results - A dictionary containing the linear and activation outputs

'''

#Calculate the linear Z for the hidden layer

Z1 = np.dot(X, W_B['W1'].T) + W_B['b1']

#Calculate the activation ouput for the hidden layer

A = relu(Z1)

#Calculate the linear Z for the output layer

Z2 = np.dot(A, W_B['W2'].T) + W_B['b2']

#Calculate the activation ouput for the ouptu layer

Y_pred = sigmoid(Z2)

#Save all ina dictionary

forward_results = {"Z1": Z1,

"A": A,

"Z2": Z2,

"Y_pred": Y_pred}

return forward_results執(zhí)行向后傳播:相對于與梯度下降相關(guān)的參數(shù),計(jì)算成本的梯度。在這種情況下,為dLdZ2,dLdW2,dLdb2,dLdZ1,dLdW1和dLdb1。這些參數(shù)將與學(xué)習(xí)率結(jié)合起來執(zhí)行梯度下降。我們將為許多訓(xùn)練樣本(no_examples)實(shí)現(xiàn)反向傳播的矢量化版本。

分步指南如下:

- 從傳遞中獲取結(jié)果,如下所示:

forward_results = forward_propagation(X, W_B)

Z1 = forward_results['Z1']

A = forward_results['A']

Z2 = forward_results['Z2']

Y_pred = forward_results['Y_pred']- 獲得訓(xùn)練樣本的數(shù)量,如下所示:

no_examples = X.shape[1]- 計(jì)算函數(shù)的損失:

L = (1/no_examples) * np.sum(-Y_true * np.log(Y_pred) - (1 - Y_true) * np.log(1 - Y_pred))- 計(jì)算每個參數(shù)的梯度,如下所示:

dLdZ2= Y_pred - Y_true

dLdW2 = (1/no_examples) * np.dot(dLdZ2, A.T)

dLdb2 = (1/no_examples) * np.sum(dLdZ2, axis=1, keepdims=True)

dLdZ1 = np.multiply(np.dot(W_B['W2'].T, dLdZ2), (1 - np.power(A, 2)))

dLdW1 = (1/no_examples) * np.dot(dLdZ1, X.T)

dLdb1 = (1/no_examples) * np.sum(dLdZ1, axis=1, keepdims=True)- 將梯度下降所需的計(jì)算梯度存儲在字典中:

gradients = {"dLdW1": dLdW1,

"dLdb1": dLdb1,

"dLdW2": dLdW2,

"dLdb2": dLdb2}- 返回?fù)p耗和存儲的梯度:

return gradients, L這是完整的向后傳播功能:

def backward_propagation(X, W_B, Y_true):

'''Description: This function performs the backward propagation in a vectorized form

Input Arguments:

X - input training examples

W_B - initialized weights and biases

Y_True - the true target values of the training examples

Output:

gradients - the calculated gradients of each parameter

L - the loss function

'''

# Obtain the forward results from the forward propagation

forward_results = forward_propagation(X, W_B)

Z1 = forward_results['Z1']

A = forward_results['A']

Z2 = forward_results['Z2']

Y_pred = forward_results['Y_pred']

#Obtain the number of training samples

no_examples = X.shape[1]

# Calculate loss

L = (1/no_examples) * np.sum(-Y_true * np.log(Y_pred) - (1 - Y_true) * np.log(1 - Y_pred))

#Calculate the gradients of each parameter needed for gradient descent

dLdZ2= Y_pred - Y_true

dLdW2 = (1/no_examples) * np.dot(dLdZ2, A.T)

dLdb2 = (1/no_examples) * np.sum(dLdZ2, axis=1, keepdims=True)

dLdZ1 = np.multiply(np.dot(W_B['W2'].T, dLdZ2), (1 - np.power(A, 2)))

dLdW1 = (1/no_examples) * np.dot(dLdZ1, X.T)

dLdb1 = (1/no_examples) * np.sum(dLdZ1, axis=1, keepdims=True)

#Store gradients for gradient descent in a dictionary

gradients = {"dLdW1": dLdW1,

"dLdb1": dLdb1,

"dLdW2": dLdW2,

"dLdb2": dLdb2}

return gradients, L許多人總是認(rèn)為反向傳播很困難,但是正如本文中介紹的情形,事實(shí)并非如此。必須掌握每個步驟,才能掌握整個反向傳播技術(shù)。另外,有必要掌握線性代數(shù)和微積分等數(shù)學(xué)知識,以了解如何計(jì)算每個函數(shù)的各個梯度。使用這些工具,反向傳播應(yīng)該是小菜一碟!實(shí)際上,反向傳播通常由使用的深度學(xué)習(xí)框架來處理。但是,了解這種技術(shù)的內(nèi)在作用是值得的,因?yàn)樗袝r可以幫助我們理解神經(jīng)網(wǎng)絡(luò)為何訓(xùn)練得不好。

本文轉(zhuǎn)載 ??小白遇見AI?? ,作者:小煩