如何使用TensorFlow中的高級API:Estimator、Experiment和Dataset

近日,背景調查公司 Onfido 研究主管 Peter Roelants 在 Medium 上發表了一篇題為《Higher-Level APIs in TensorFlow》的文章,通過實例詳細介紹了如何使用 TensorFlow 中的高級 API(Estimator、Experiment 和 Dataset)訓練模型。值得一提的是 Experiment 和 Dataset 可以獨立使用。這些高級 API 已被***發布的 TensorFlow1.3 版收錄。

TensorFlow 中有許多流行的庫,如 Keras、TFLearn 和 Sonnet,它們可以讓你輕松訓練模型,而無需接觸哪些低級別函數。目前,Keras API 正傾向于直接在 TensorFlow 中實現,TensorFlow 也在提供越來越多的高級構造,其中的一些已經被***發布的 TensorFlow1.3 版收錄。

在本文中,我們將通過一個例子來學習如何使用一些高級構造,其中包括 Estimator、Experiment 和 Dataset。閱讀本文需要預先了解有關 TensorFlow 的基本知識。

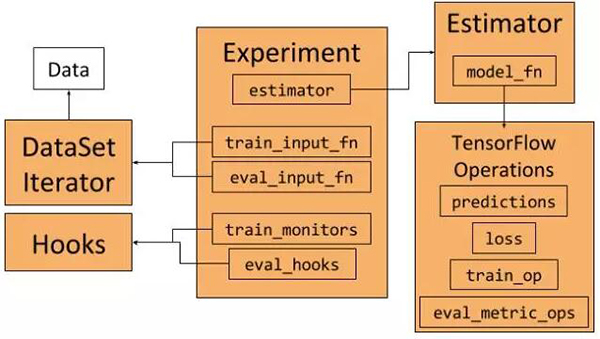

Experiment、Estimator 和 DataSet 框架和它們的相互作用(以下將對這些組件進行說明)

在本文中,我們使用 MNIST 作為數據集。它是一個易于使用的數據集,可以通過 TensorFlow 訪問。你可以在這個 gist 中找到完整的示例代碼。使用這些框架的一個好處是我們不需要直接處理圖形和會話。

Estimator

Estimator(評估器)類代表一個模型,以及這些模型被訓練和評估的方式。我們可以這樣構建一個評估器:

- returntf.estimator.Estimator(

- model_fnmodel_fn=model_fn, # First-class function

- paramsparams=params, # HParams

- config=run_config # RunConfig

- )

為了構建一個 Estimator,我們需要傳遞一個模型函數,一個參數集合以及一些配置。

- 參數應該是模型超參數的集合,它可以是一個字典,但我們將在本示例中將其表示為 HParams 對象,用作 namedtuple。

- 該配置指定如何運行訓練和評估,以及如何存出結果。這些配置通過 RunConfig 對象表示,該對象傳達 Estimator 需要了解的關于運行模型的環境的所有內容。

- 模型函數是一個 Python 函數,它構建了給定輸入的模型(見后文)。

模型函數

模型函數是一個 Python 函數,它作為***級函數傳遞給 Estimator。稍后我們就會看到,TensorFlow 也會在其他地方使用***級函數。模型表示為函數的好處在于模型可以通過實例化函數不斷重新構建。該模型可以在訓練過程中被不同的輸入不斷創建,例如:在訓練期間運行驗證測試。

模型函數將輸入特征作為參數,相應標簽作為張量。它還有一種模式來標記模型是否正在訓練、評估或執行推理。模型函數的***一個參數是超參數的集合,它們與傳遞給 Estimator 的內容相同。模型函數需要返回一個 EstimatorSpec 對象——它會定義完整的模型。

EstimatorSpec 接受預測,損失,訓練和評估幾種操作,因此它定義了用于訓練,評估和推理的完整模型圖。由于 EstimatorSpec 采用常規 TensorFlow Operations,因此我們可以使用像 TF-Slim 這樣的框架來定義自己的模型。

Experiment

Experiment(實驗)類是定義如何訓練模型,并將其與 Estimator 進行集成的方式。我們可以這樣創建一個實驗類:

- experiment = tf.contrib.learn.Experiment(

- estimatorestimator=estimator, # Estimator

- train_input_fntrain_input_fn=train_input_fn, # First-class function

- eval_input_fneval_input_fn=eval_input_fn, # First-class function

- train_steps=params.train_steps, # Minibatch steps

- min_eval_frequency=params.min_eval_frequency, # Eval frequency

- train_monitors=[train_input_hook], # Hooks for training

- eval_hooks=[eval_input_hook], # Hooks for evaluation

- eval_steps=None# Use evaluation feeder until its empty

- )

Experiment 作為輸入:

- 一個 Estimator(例如上面定義的那個)。

- 訓練和評估數據作為***級函數。這里用到了和前述模型函數相同的概念,通過傳遞函數而非操作,如有需要,輸入圖可以被重建。我們會在后面繼續討論這個概念。

- 訓練和評估鉤子(hooks)。這些鉤子可以用于監視或保存特定內容,或在圖形和會話中進行一些操作。例如,我們將通過操作來幫助初始化數據加載器。

- 不同參數解釋了訓練時間和評估時間。

一旦我們定義了 experiment,我們就可以通過 learn_runner.run 運行它來訓練和評估模型:

- learn_runner.run(

- experiment_fnexperiment_fn=experiment_fn, # First-class function

- run_configrun_config=run_config, # RunConfig

- schedule="train_and_evaluate", # What to run

- hparams=params # HParams

- )

與模型函數和數據函數一樣,函數中的學習運算符將創建 experiment 作為參數。

Dataset

我們將使用 Dataset 類和相應的 Iterator 來表示我們的訓練和評估數據,并創建在訓練期間迭代數據的數據饋送器。在本示例中,我們將使用 TensorFlow 中可用的 MNIST 數據,并在其周圍構建一個 Dataset 包裝器。例如,我們把訓練的輸入數據表示為:

- # Define the training inputs

- defget_train_inputs(batch_size, mnist_data):

- """Return the input function to get the training data.

- Args:

- batch_size (int): Batch size of training iterator that is returned

- by the input function.

- mnist_data (Object): Object holding the loaded mnist data.

- Returns:

- (Input function, IteratorInitializerHook):

- - Function that returns (features, labels) when called.

- - Hook to initialise input iterator.

- """

- iterator_initializer_hook = IteratorInitializerHook()

- deftrain_inputs():

- """Returns training set as Operations.

- Returns:

- (features, labels) Operations that iterate over the dataset

- on every evaluation

- """

- withtf.name_scope('Training_data'):

- # Get Mnist data

- images = mnist_data.train.images.reshape([-1, 28, 28, 1])

- labels = mnist_data.train.labels

- # Define placeholders

- images_placeholder = tf.placeholder(

- images.dtype, images.shape)

- labels_placeholder = tf.placeholder(

- labels.dtype, labels.shape)

- # Build dataset iterator

- dataset = tf.contrib.data.Dataset.from_tensor_slices(

- (images_placeholder, labels_placeholder))

- datasetdataset = dataset.repeat(None) # Infinite iterations

- datasetdataset = dataset.shuffle(buffer_size=10000)

- datasetdataset = dataset.batch(batch_size)

- iterator = dataset.make_initializable_iterator()

- next_example, next_label = iterator.get_next()

- # Set runhook to initialize iterator

- iterator_initializer_hook.iterator_initializer_func =

- lambdasess: sess.run(

- iterator.initializer,

- feed_dict={images_placeholder: images,

- labels_placeholder: labels})

- # Return batched (features, labels)

- returnnext_example, next_label

- # Return function and hook

- returntrain_inputs, iterator_initializer_hook

調用這個 get_train_inputs 會返回一個一級函數,它在 TensorFlow 圖中創建數據加載操作,以及一個 Hook 初始化迭代器。

本示例中,我們使用的 MNIST 數據最初表示為 Numpy 數組。我們創建一個占位符張量來獲取數據,再使用占位符來避免數據被復制。接下來,我們在 from_tensor_slices 的幫助下創建一個切片數據集。我們將確保該數據集運行***長時間(experiment 可以考慮 epoch 的數量),讓數據得到清晰,并分成所需的尺寸。

為了迭代數據,我們需要在數據集的基礎上創建迭代器。因為我們正在使用占位符,所以我們需要在 NumPy 數據的相關會話中初始化占位符。我們可以通過創建一個可初始化的迭代器來實現。創建圖形時,我們將創建一個自定義的 IteratorInitializerHook 對象來初始化迭代器:

- classIteratorInitializerHook(tf.train.SessionRunHook):

- """Hook to initialise data iterator after Session is created."""

- def__init__(self):

- super(IteratorInitializerHook, self).__init__()

- self.iterator_initializer_func = None

- defafter_create_session(self, session, coord):

- """Initialise the iterator after the session has been created."""

- self.iterator_initializer_func(session)

IteratorInitializerHook 繼承自 SessionRunHook。一旦創建了相關會話,這個鉤子就會調用 call after_create_session,并用正確的數據初始化占位符。這個鉤子會通過 get_train_inputs 函數返回,并在創建時傳遞給 Experiment 對象。

train_inputs 函數返回的數據加載操作是 TensorFlow 操作,每次評估時都會返回一個新的批處理。

運行代碼

現在我們已經定義了所有的東西,我們可以用以下命令運行代碼:

- python mnist_estimator.py --model_dir ./mnist_training --data_dir ./mnist_data

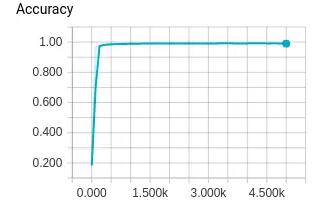

如果你不傳遞參數,它將使用文件頂部的默認標志來確定保存數據和模型的位置。訓練將在終端輸出全局步長、損失、精度等信息。除此之外,實驗和估算器框架將記錄 TensorBoard 可以顯示的某些統計信息。如果我們運行:

- tensorboard --logdir='./mnist_training'

我們就可以看到所有訓練統計數據,如訓練損失、評估準確性、每步時間和模型圖。

評估精度在 TensorBoard 中的可視化

在 TensorFlow 中,有關 Estimator、Experiment 和 Dataset 框架的示例很少,這也是本文存在的原因。希望這篇文章可以向大家介紹這些架構工作的原理,它們應該采用哪些抽象方法,以及如何使用它們。如果你對它們很感興趣,以下是其他相關文檔。

關于 Estimator、Experiment 和 Dataset 的注釋

- 論文《TensorFlow Estimators: Managing Simplicity vs. Flexibility in High-Level Machine Learning Frameworks》:https://terrytangyuan.github.io/data/papers/tf-estimators-kdd-paper.pdf

- Using the Dataset API for TensorFlow Input Pipelines:https://www.tensorflow.org/versions/r1.3/programmers_guide/datasets

- tf.estimator.Estimator:https://www.tensorflow.org/api_docs/python/tf/estimator/Estimator

- tf.contrib.learn.RunConfig:https://www.tensorflow.org/api_docs/python/tf/contrib/learn/RunConfig

- tf.estimator.DNNClassifier:https://www.tensorflow.org/api_docs/python/tf/estimator/DNNClassifier

- tf.estimator.DNNRegressor:https://www.tensorflow.org/api_docs/python/tf/estimator/DNNRegressor

- Creating Estimators in tf.estimator:https://www.tensorflow.org/extend/estimators

- tf.contrib.learn.Head:https://www.tensorflow.org/api_docs/python/tf/contrib/learn/Head

- 本文用到的 Slim 框架:https://github.com/tensorflow/models/tree/master/slim

完整示例

- """ to illustrate usage of tf.estimator.Estimator in TF v1.3"""

- importtensorflow astf

- fromtensorflow.examples.tutorials.mnist importinput_data asmnist_data

- fromtensorflow.contrib importslim

- fromtensorflow.contrib.learn importModeKeys

- fromtensorflow.contrib.learn importlearn_runner

- # Show debugging output

- tf.logging.set_verbosity(tf.logging.DEBUG)

- # Set default flags for the output directories

- FLAGS = tf.app.flags.FLAGS

- tf.app.flags.DEFINE_string(

- flag_name='model_dir', default_value='./mnist_training',

- docstring='Output directory for model and training stats.')

- tf.app.flags.DEFINE_string(

- flag_name='data_dir', default_value='./mnist_data',

- docstring='Directory to download the data to.')

- # Define and run experiment ###############################

- defrun_experiment(argv=None):

- """Run the training experiment."""

- # Define model parameters

- params = tf.contrib.training.HParams(

- learning_rate=0.002,

- n_classes=10,

- train_steps=5000,

- min_eval_frequency=100

- )

- # Set the run_config and the directory to save the model and stats

- run_config = tf.contrib.learn.RunConfig()

- run_configrun_config = run_config.replace(model_dir=FLAGS.model_dir)

- learn_runner.run(

- experiment_fnexperiment_fn=experiment_fn, # First-class function

- run_configrun_config=run_config, # RunConfig

- schedule="train_and_evaluate", # What to run

- hparams=params # HParams

- )

- defexperiment_fn(run_config, params):

- """Create an experiment to train and evaluate the model.

- Args:

- run_config (RunConfig): Configuration for Estimator run.

- params (HParam): Hyperparameters

- Returns:

- (Experiment) Experiment for training the mnist model.

- """

- # You can change a subset of the run_config properties as

- run_configrun_config = run_config.replace(

- save_checkpoints_steps=params.min_eval_frequency)

- # Define the mnist classifier

- estimator = get_estimator(run_config, params)

- # Setup data loaders

- mnist = mnist_data.read_data_sets(FLAGS.data_dir, one_hot=False)

- train_input_fn, train_input_hook = get_train_inputs(

- batch_size=128, mnistmnist_data=mnist)

- eval_input_fn, eval_input_hook = get_test_inputs(

- batch_size=128, mnistmnist_data=mnist)

- # Define the experiment

- experiment = tf.contrib.learn.Experiment(

- estimatorestimator=estimator, # Estimator

- train_input_fntrain_input_fn=train_input_fn, # First-class function

- eval_input_fneval_input_fn=eval_input_fn, # First-class function

- train_steps=params.train_steps, # Minibatch steps

- min_eval_frequency=params.min_eval_frequency, # Eval frequency

- train_monitors=[train_input_hook], # Hooks for training

- eval_hooks=[eval_input_hook], # Hooks for evaluation

- eval_steps=None# Use evaluation feeder until its empty

- )

- returnexperiment

- # Define model ############################################

- defget_estimator(run_config, params):

- """Return the model as a Tensorflow Estimator object.

- Args:

- run_config (RunConfig): Configuration for Estimator run.

- params (HParams): hyperparameters.

- """

- returntf.estimator.Estimator(

- model_fnmodel_fn=model_fn, # First-class function

- paramsparams=params, # HParams

- config=run_config # RunConfig

- )

- defmodel_fn(features, labels, mode, params):

- """Model function used in the estimator.

- Args:

- features (Tensor): Input features to the model.

- labels (Tensor): Labels tensor for training and evaluation.

- mode (ModeKeys): Specifies if training, evaluation or prediction.

- params (HParams): hyperparameters.

- Returns:

- (EstimatorSpec): Model to be run by Estimator.

- """

- is_training = mode == ModeKeys.TRAIN

- # Define model's architecture

- logits = architecture(features, is_trainingis_training=is_training)

- predictions = tf.argmax(logits, axis=-1)

- # Loss, training and eval operations are not needed during inference.

- loss = None

- train_op = None

- eval_metric_ops = {}

- ifmode != ModeKeys.INFER:

- loss = tf.losses.sparse_softmax_cross_entropy(

- labels=tf.cast(labels, tf.int32),

- logitslogits=logits)

- train_op = get_train_op_fn(loss, params)

- eval_metric_ops = get_eval_metric_ops(labels, predictions)

- returntf.estimator.EstimatorSpec(

- modemode=mode,

- predictionspredictions=predictions,

- lossloss=loss,

- train_optrain_op=train_op,

- eval_metric_opseval_metric_ops=eval_metric_ops

- )

- defget_train_op_fn(loss, params):

- """Get the training Op.

- Args:

- loss (Tensor): Scalar Tensor that represents the loss function.

- params (HParams): Hyperparameters (needs to have `learning_rate`)

- Returns:

- Training Op

- """

- returntf.contrib.layers.optimize_loss(

- lossloss=loss,

- global_step=tf.contrib.framework.get_global_step(),

- optimizer=tf.train.AdamOptimizer,

- learning_rate=params.learning_rate

- )

- defget_eval_metric_ops(labels, predictions):

- """Return a dict of the evaluation Ops.

- Args:

- labels (Tensor): Labels tensor for training and evaluation.

- predictions (Tensor): Predictions Tensor.

- Returns:

- Dict of metric results keyed by name.

- """

- return{

- 'Accuracy': tf.metrics.accuracy(

- labelslabels=labels,

- predictionspredictions=predictions,

- name='accuracy')

- }

- defarchitecture(inputs, is_training, scope='MnistConvNet'):

- """Return the output operation following the network architecture.

- Args:

- inputs (Tensor): Input Tensor

- is_training (bool): True iff in training mode

- scope (str): Name of the scope of the architecture

- Returns:

- Logits output Op for the network.

- """

- withtf.variable_scope(scope):

- withslim.arg_scope(

- [slim.conv2d, slim.fully_connected],

- weights_initializer=tf.contrib.layers.xavier_initializer()):

- net = slim.conv2d(inputs, 20, [5, 5], padding='VALID',

- scope='conv1')

- net = slim.max_pool2d(net, 2, stride=2, scope='pool2')

- net = slim.conv2d(net, 40, [5, 5], padding='VALID',

- scope='conv3')

- net = slim.max_pool2d(net, 2, stride=2, scope='pool4')

- net = tf.reshape(net, [-1, 4* 4* 40])

- net = slim.fully_connected(net, 256, scope='fn5')

- net = slim.dropout(net, is_trainingis_training=is_training,

- scope='dropout5')

- net = slim.fully_connected(net, 256, scope='fn6')

- net = slim.dropout(net, is_trainingis_training=is_training,

- scope='dropout6')

- net = slim.fully_connected(net, 10, scope='output',

- activation_fn=None)

- returnnet

- # Define data loaders #####################################

- classIteratorInitializerHook(tf.train.SessionRunHook):

- """Hook to initialise data iterator after Session is created."""

- def__init__(self):

- super(IteratorInitializerHook, self).__init__()

- self.iterator_initializer_func = None

- defafter_create_session(self, session, coord):

- """Initialise the iterator after the session has been created."""

- self.iterator_initializer_func(session)

- # Define the training inputs

- defget_train_inputs(batch_size, mnist_data):

- """Return the input function to get the training data.

- Args:

- batch_size (int): Batch size of training iterator that is returned

- by the input function.

- mnist_data (Object): Object holding the loaded mnist data.

- Returns:

- (Input function, IteratorInitializerHook):

- - Function that returns (features, labels) when called.

- - Hook to initialise input iterator.

- """

- iterator_initializer_hook = IteratorInitializerHook()

- deftrain_inputs():

- """Returns training set as Operations.

- Returns:

- (features, labels) Operations that iterate over the dataset

- on every evaluation

- """

- withtf.name_scope('Training_data'):

- # Get Mnist data

- images = mnist_data.train.images.reshape([-1, 28, 28, 1])

- labels = mnist_data.train.labels

- # Define placeholders

- images_placeholder = tf.placeholder(

- images.dtype, images.shape)

- labels_placeholder = tf.placeholder(

- labels.dtype, labels.shape)

- # Build dataset iterator

- dataset = tf.contrib.data.Dataset.from_tensor_slices(

- (images_placeholder, labels_placeholder))

- datasetdataset = dataset.repeat(None) # Infinite iterations

- datasetdataset = dataset.shuffle(buffer_size=10000)

- datasetdataset = dataset.batch(batch_size)

- iterator = dataset.make_initializable_iterator()

- next_example, next_label = iterator.get_next()

- # Set runhook to initialize iterator

- iterator_initializer_hook.iterator_initializer_func =

- lambdasess: sess.run(

- iterator.initializer,

- feed_dict={images_placeholder: images,

- labels_placeholder: labels})

- # Return batched (features, labels)

- returnnext_example, next_label

- # Return function and hook

- returntrain_inputs, iterator_initializer_hook

- defget_test_inputs(batch_size, mnist_data):

- """Return the input function to get the test data.

- Args:

- batch_size (int): Batch size of training iterator that is returned

- by the input function.

- mnist_data (Object): Object holding the loaded mnist data.

- Returns:

- (Input function, IteratorInitializerHook):

- - Function that returns (features, labels) when called.

- - Hook to initialise input iterator.

- """

- iterator_initializer_hook = IteratorInitializerHook()

- deftest_inputs():

- """Returns training set as Operations.

- Returns:

- (features, labels) Operations that iterate over the dataset

- on every evaluation

- """

- withtf.name_scope('Test_data'):

- # Get Mnist data

- images = mnist_data.test.images.reshape([-1, 28, 28, 1])

- labels = mnist_data.test.labels

- # Define placeholders

- images_placeholder = tf.placeholder(

- images.dtype, images.shape)

- labels_placeholder = tf.placeholder(

- labels.dtype, labels.shape)

- # Build dataset iterator

- dataset = tf.contrib.data.Dataset.from_tensor_slices(

- (images_placeholder, labels_placeholder))

- datasetdataset = dataset.batch(batch_size)

- iterator = dataset.make_initializable_iterator()

- next_example, next_label = iterator.get_next()

- # Set runhook to initialize iterator

- iterator_initializer_hook.iterator_initializer_func =

- lambdasess: sess.run(

- iterator.initializer,

- feed_dict={images_placeholder: images,

- labels_placeholder: labels})

- returnnext_example, next_label

- # Return function and hook

- returntest_inputs, iterator_initializer_hook

- # Run ##############################################

- if__name__ == "__main__":

- tf.app.run(

- main=run_experiment

- )

推理訓練模式

在訓練模型后,我們可以運行 estimateator.predict 來預測給定圖像的類別。可使用以下代碼示例。

- """ to illustrate inference of a trained tf.estimator.Estimator.

- NOTE: This is dependent on mnist_estimator.py which defines the model.

- mnist_estimator.py can be found at:

- https://gist.github.com/peterroelants/9956ec93a07ca4e9ba5bc415b014bcca

- """

- importnumpy asnp

- importskimage.io

- importtensorflow astf

- frommnist_estimator importget_estimator

- # Set default flags for the output directories

- FLAGS =tf.app.flags.FLAGS

- tf.app.flags.DEFINE_string(

- flag_name='saved_model_dir',default_value='./mnist_training',

- docstring='Output directory for model and training stats.')

- # MNIST sample images

- IMAGE_URLS =[

- 'https://i.imgur.com/SdYYBDt.png',# 0

- 'https://i.imgur.com/Wy7mad6.png',# 1

- 'https://i.imgur.com/nhBZndj.png',# 2

- 'https://i.imgur.com/V6XeoWZ.png',# 3

- 'https://i.imgur.com/EdxBM1B.png',# 4

- 'https://i.imgur.com/zWSDIuV.png',# 5

- 'https://i.imgur.com/Y28rZho.png',# 6

- 'https://i.imgur.com/6qsCz2W.png',# 7

- 'https://i.imgur.com/BVorzCP.png',# 8

- 'https://i.imgur.com/vt5Edjb.png',# 9

- ]

- definfer(argv=None):

- """Run the inference and print the results to stdout."""

- params =tf.contrib.training.HParams()# Empty hyperparameters

- # Set the run_config where to load the model from

- run_config =tf.contrib.learn.RunConfig()

- run_configrun_config =run_config.replace(model_dir=FLAGS.saved_model_dir)

- # Initialize the estimator and run the prediction

- estimator =get_estimator(run_config,params)

- result =estimator.predict(input_fn=test_inputs)

- forr inresult:

- print(r)

- deftest_inputs():

- """Returns training set as Operations.

- Returns:

- (features, ) Operations that iterate over the test set.

- """

- withtf.name_scope('Test_data'):

- images =tf.constant(load_images(),dtype=np.float32)

- dataset =tf.contrib.data.Dataset.from_tensor_slices((images,))

- # Return as iteration in batches of 1

- returndataset.batch(1).make_one_shot_iterator().get_next()

- defload_images():

- """Load MNIST sample images from the web and return them in an array.

- Returns:

- Numpy array of size (10, 28, 28, 1) with MNIST sample images.

- """

- images =np.zeros((10,28,28,1))

- foridx,url inenumerate(IMAGE_URLS):

- images[idx,:,:,0]=skimage.io.imread(url)

- returnimages

- # Run ##############################################

- if__name__ =="__main__":

- tf.app.run(main=infer)

原文:https://medium.com/onfido-tech/higher-level-apis-in-tensorflow-67bfb602e6c0

【本文是51CTO專欄機構“機器之心”的原創譯文,微信公眾號“機器之心( id: almosthuman2014)”】