?1.Prometheus 存儲問題及解決方案

Prometheus本地存儲專為短期且性能要求不高的數據而設計的,因此,使用的時候需要確認當前數據的保留期限以及相應的可用性要求。為了讓我們將持久數據存儲更長的時間,我們使用了“外部存儲”機制。在這種模式下,Prometheus 將自己的數據復制到外部存儲。

Prometheus高可用有多種方案,但我們選擇了通過 InfluxDB 實現的高可用解決方案。InfluxDB 是一種可靠且強大的存儲軟件,有很多功能。此外,它非常適合與Grafana對接,從而提供可視化監控 。

軟件 | 版本 |

Prometheus | 2.3.0 |

Grafana | 6.0.0 |

2.InfluxDB 安裝概覽

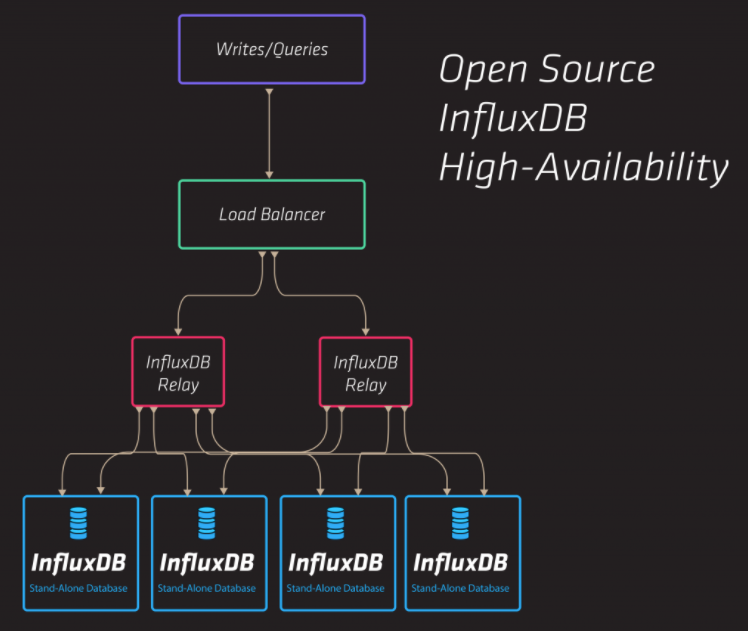

在我們的部署過程中,我們遵循了Influx-Relay 官方文檔(https://github.com/influxdata/influxdb-relay/blob/master/README.md)。安裝需要三個節點:

- 第一個和第二個是運行 Influx-relay 守護進程的 InfluxDB 實例

- 第三個是運行 Nginx 的負載均衡節點

根據InfluxDB 官方推薦的 Influx-Relay 方案,推薦使用 5 節點(四個 InfluxDB 實例 + Loadbalancer 節點),但三個節點足以滿足我們的工作負載。

節點上操作系統都使用了 Ubuntu Xenial。見下表軟件版本:

Software | Version |

Ubuntu | Ubuntu 16.04.1 LTS |

Kernel | 4.4.0-47-generic |

InfluxDB | 2.1 |

Influx-Relay | adaa2ea7bf97af592884fcfa57df1a2a77adb571 |

Nginx

| nginx/1.16.0 |

部署 InfluxDB HA 我們使用了本文7.1中描述的Influxdb HA 部署腳本 。

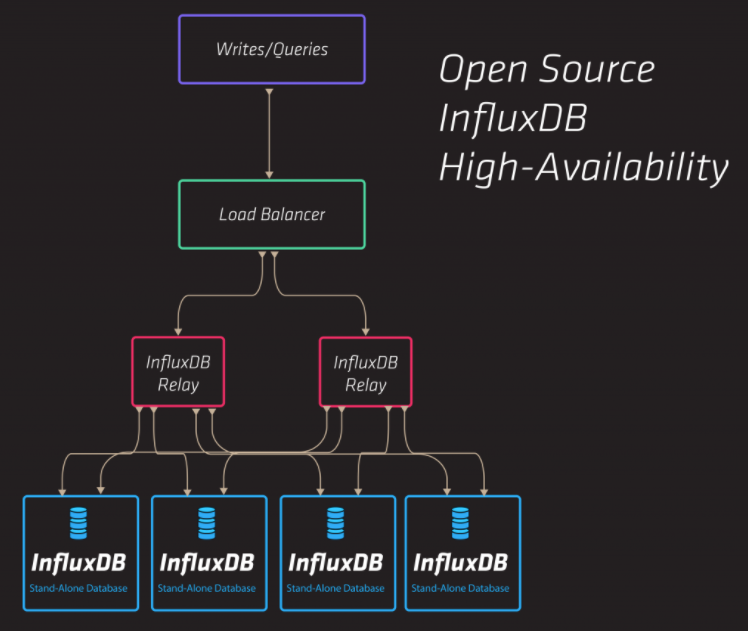

3.InfluxDB HA機制實現

HA 機制已從 InfluxDB(自版本 1.xx 起)移出,現在僅作為企業選項提供。目前有一個官方的fork還在活躍,這里主要講一下目前活躍的relay的fork,github地址在influxdb-relay(https://github.com/vente-privee/influxdb-relay)。

1)Influx-Relay

Influx-relay 是用 Golang 編寫的,其原理總結為將寫入查詢代理到多個目的地(InfluxDB 實例)。Influx-Relay 在每個 InfluxDB 節點上運行,因此任何 InfluxDB 實例的寫入請求都會在所有其他節點上進行鏡像。Influx-Relay 輕巧而健壯,不會消耗太多系統資源。請參閱本文7.3描述的Influx-Relay配置。

2)nginx

Nginx 守護進程在單獨的節點上運行并充當負載均衡器(上游代理模式)。它將“/query”查詢直接重定向到每個 InfluxDB 實例,并將“/write”查詢重定向到每個 Influx-relay 守護進程。輪詢算法被調度用于查詢和寫入。這樣,傳入的讀取和寫入在整個 InfluxDB 集群中均衡。請參閱本文7.4描述的Nginx配置。

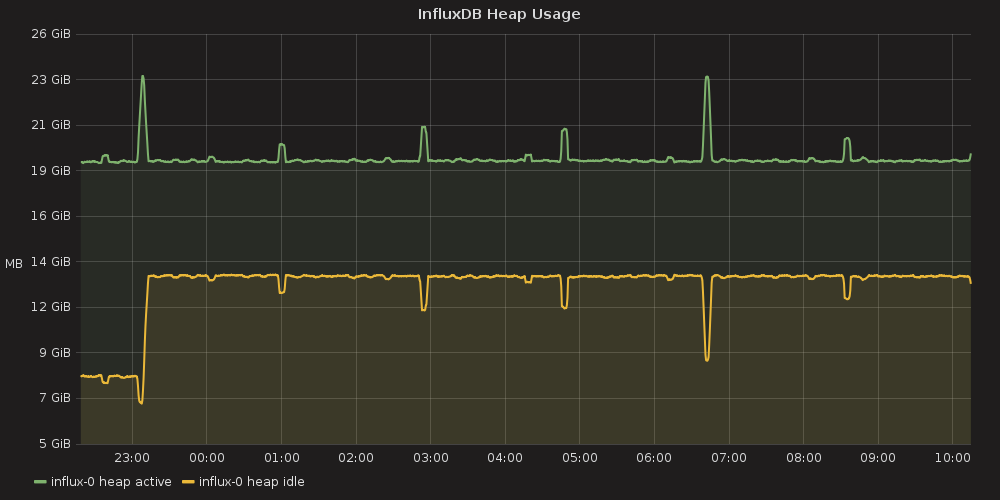

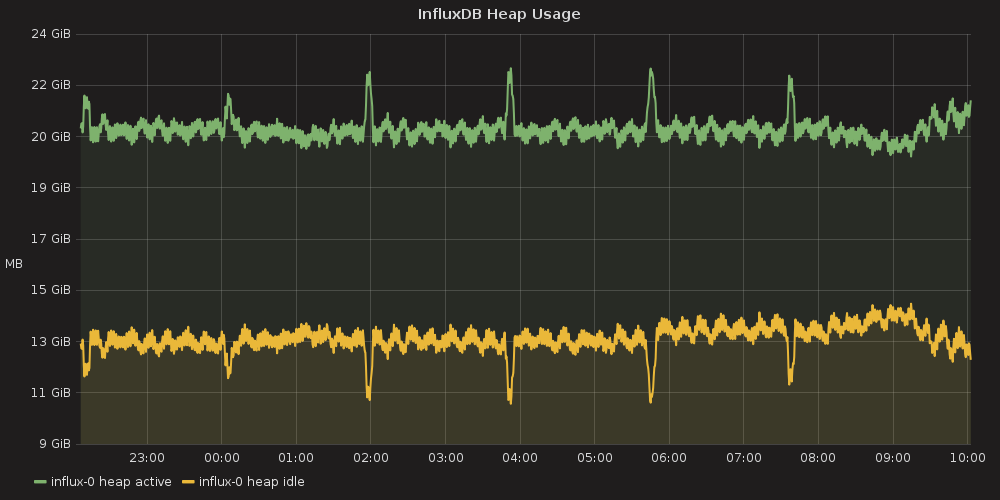

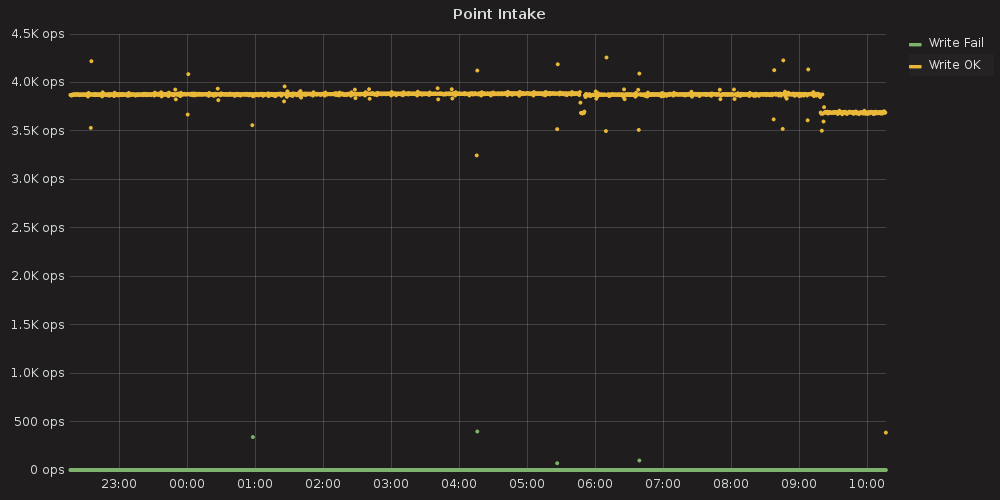

4.InfluxDB 監控

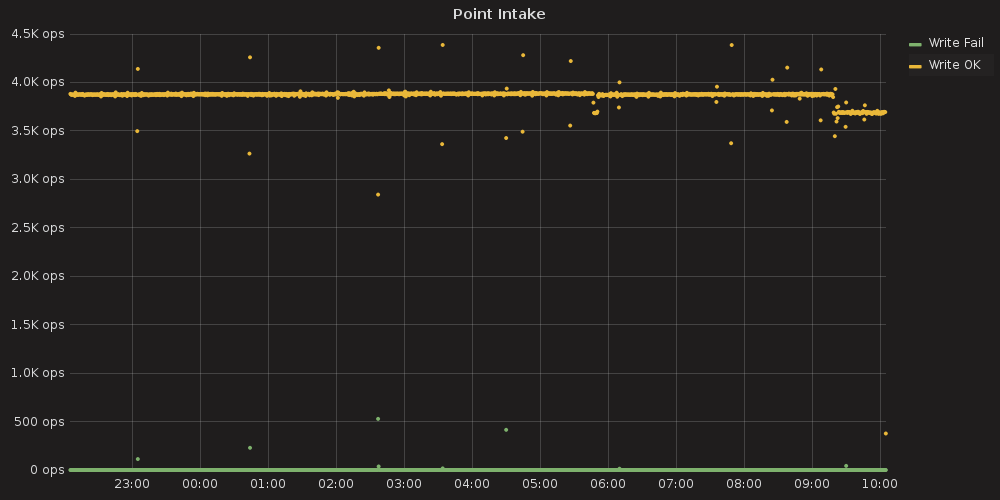

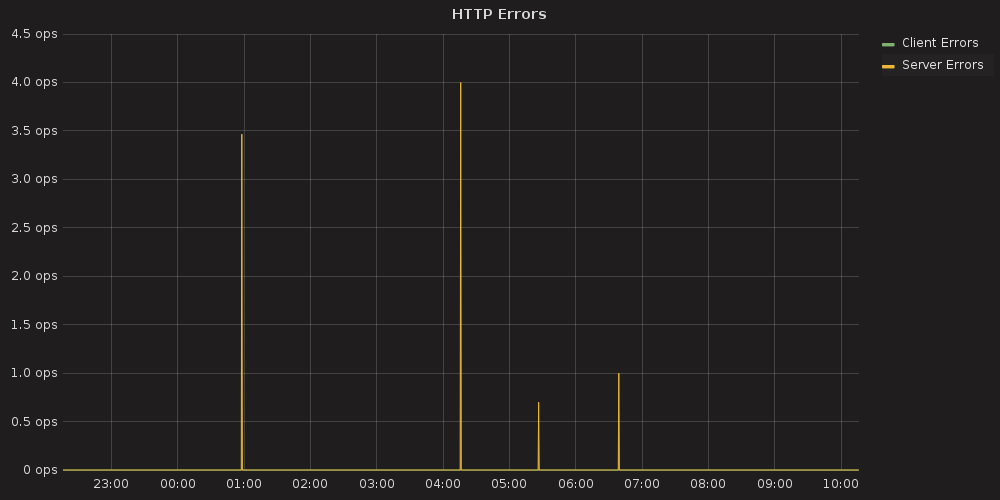

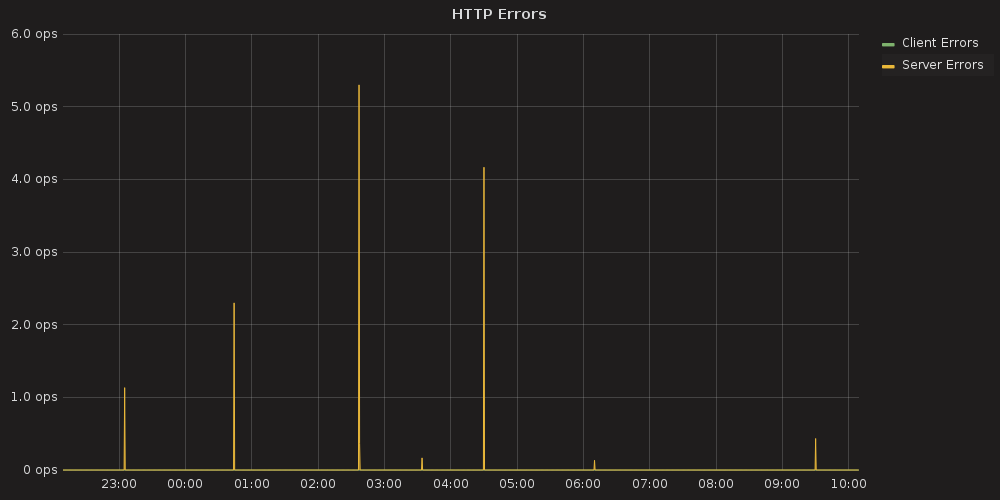

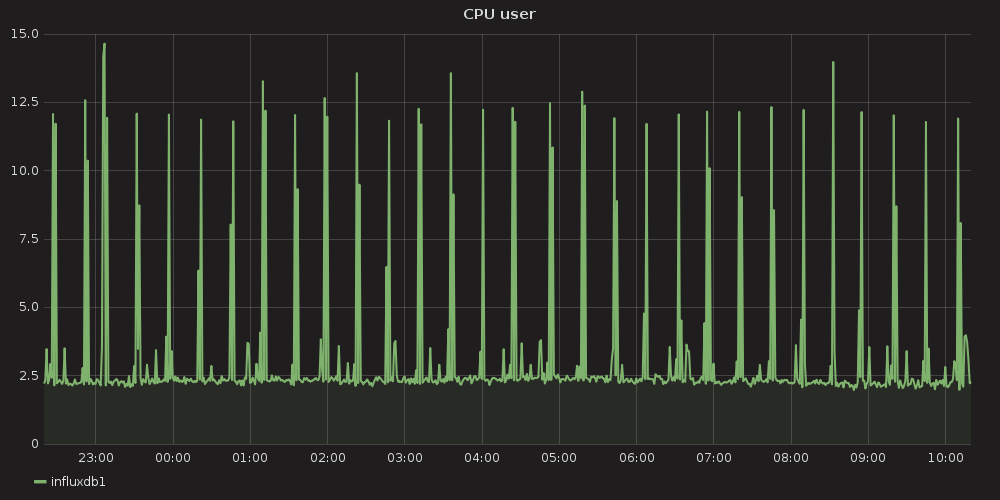

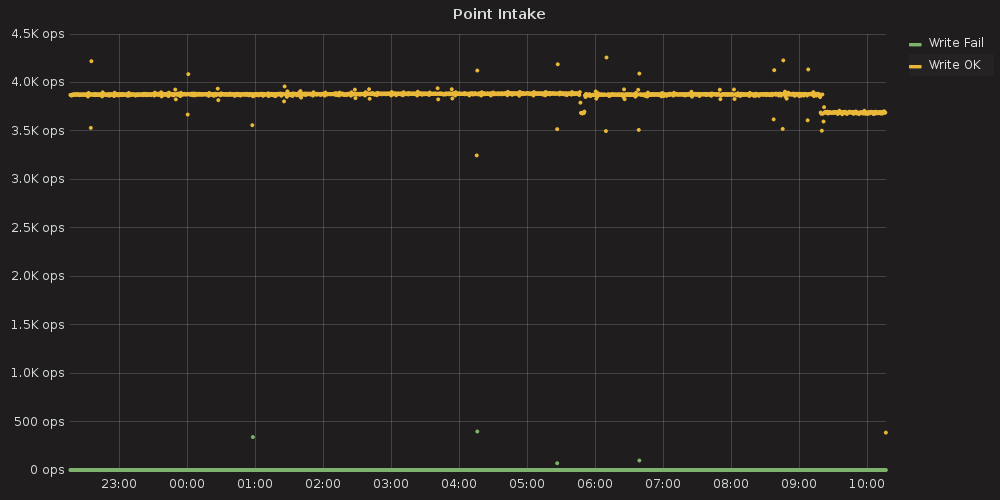

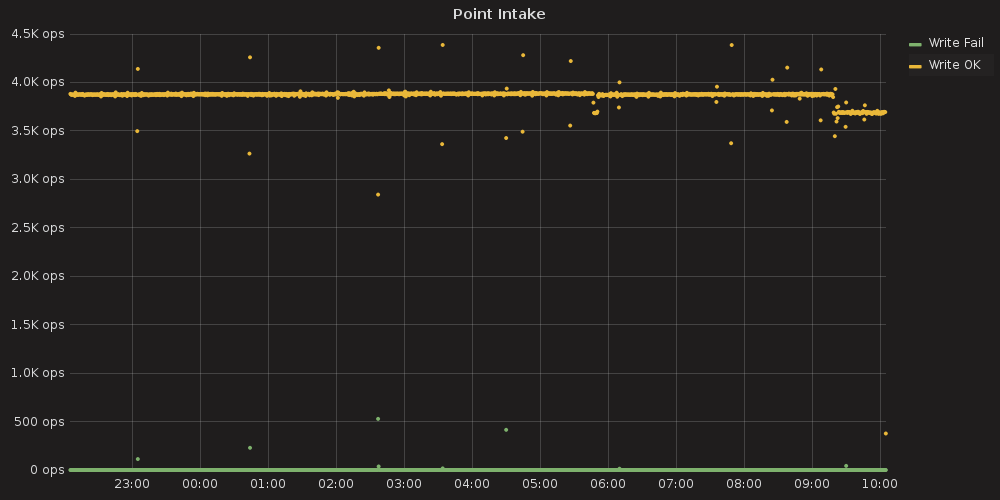

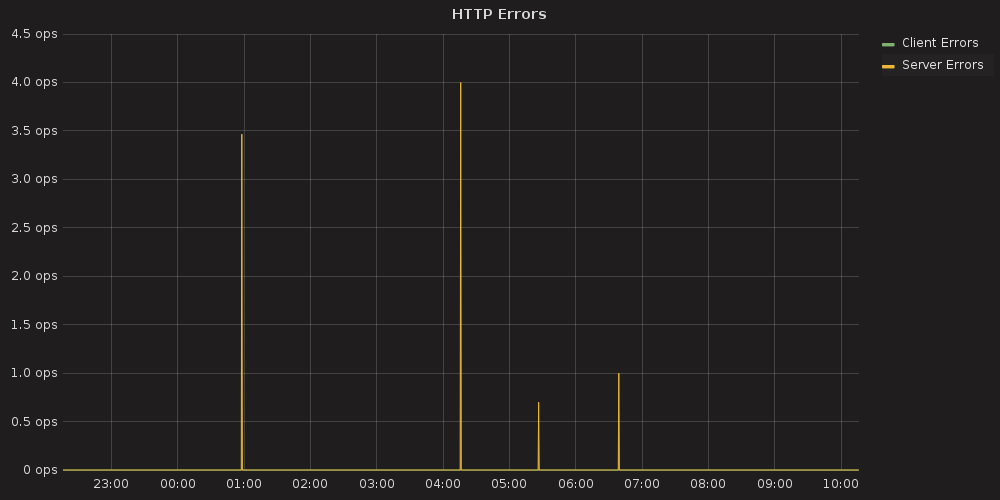

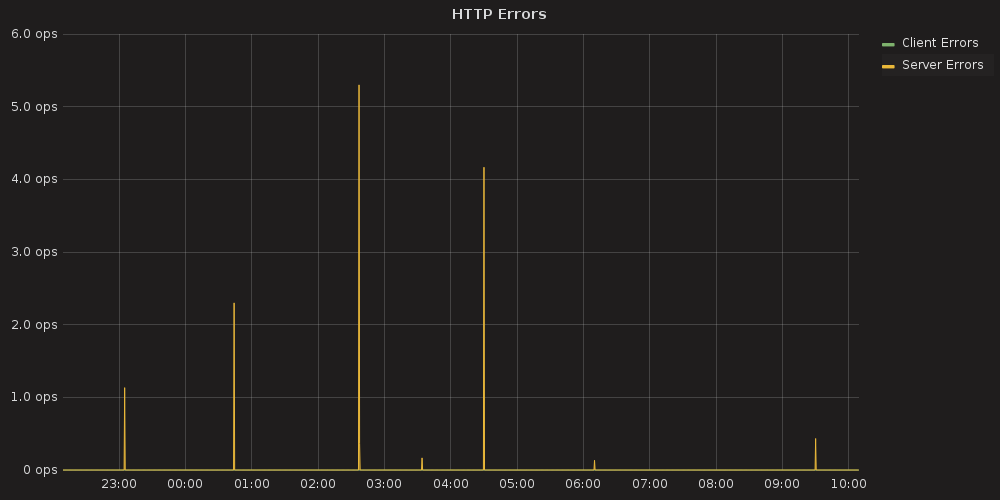

InfluxDB HA 安裝使用 Prometheus 進行了測試,該 Prometheus 輪詢 200 節點的服務,并生成大量流向其外部存儲的數據流。為了測試 InfluxDB 性能,在 Grafana 的幫助下使用并可視化了“_internal”數據庫計數器。我們發現 3 節點的 InfluxDB HA 可以輕松處理 200 節點的 Prometheus 負載,并且總體性能不會降低。用于 InfluxDB 監控的 Grafana 儀表板可以在參考本文的7.5部分。

5.InfluxDB HA 性能數據

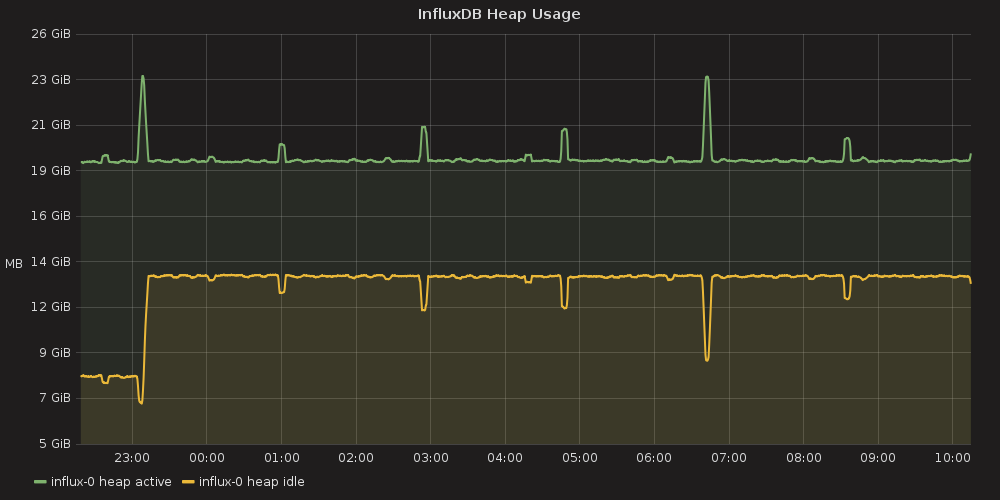

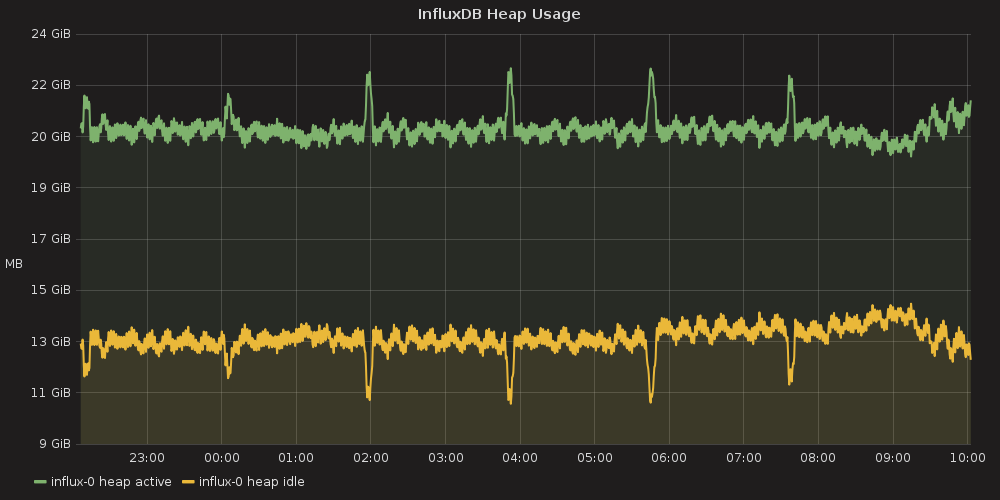

1)InfluxDB 數據庫性能數據

這些圖表是通過Grafana 根據原生存儲在 InfluxDB '_internal' 數據庫中的指標構建的。為了創建可視化,我們使用了 Grafana InfluxDB Dashboard(https://docs.openstack.org/developer/performanc-docs/methodologies/monitoring/influxha.html#grafana-influxdb-dashboard)。

InfluxDB node1 數據庫性能 | InfluxDB node2 數據庫性能 |

|

|

|

|

|

|

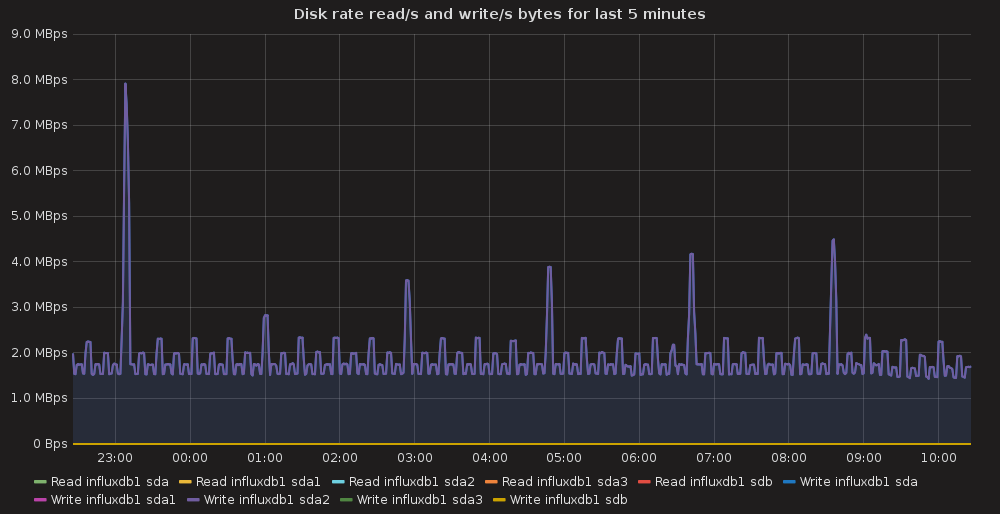

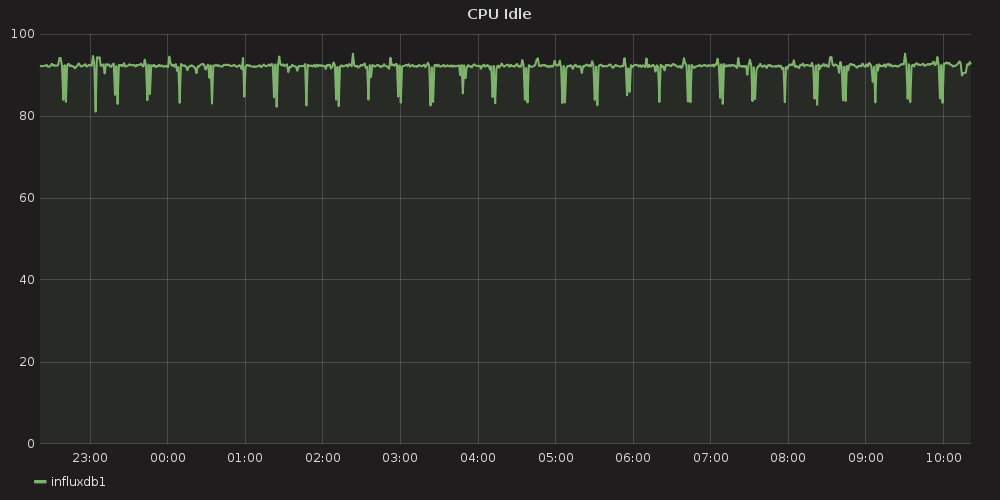

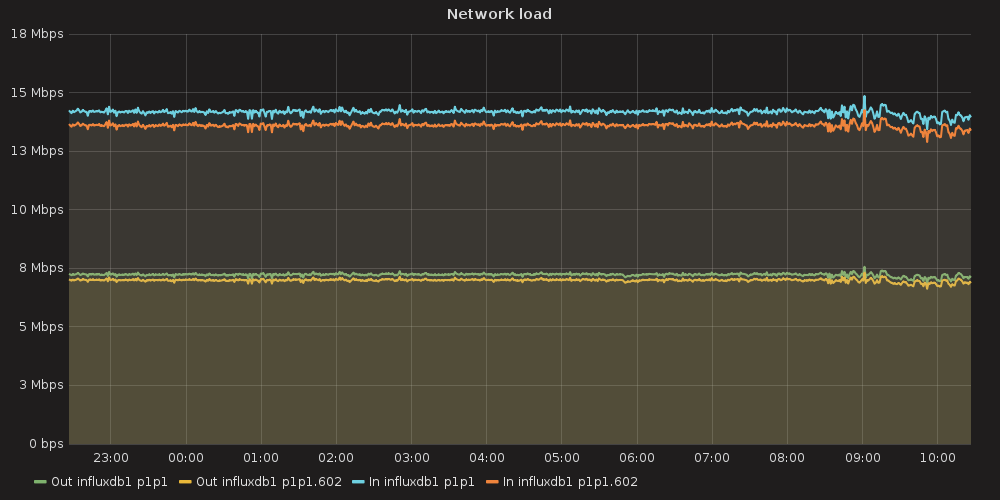

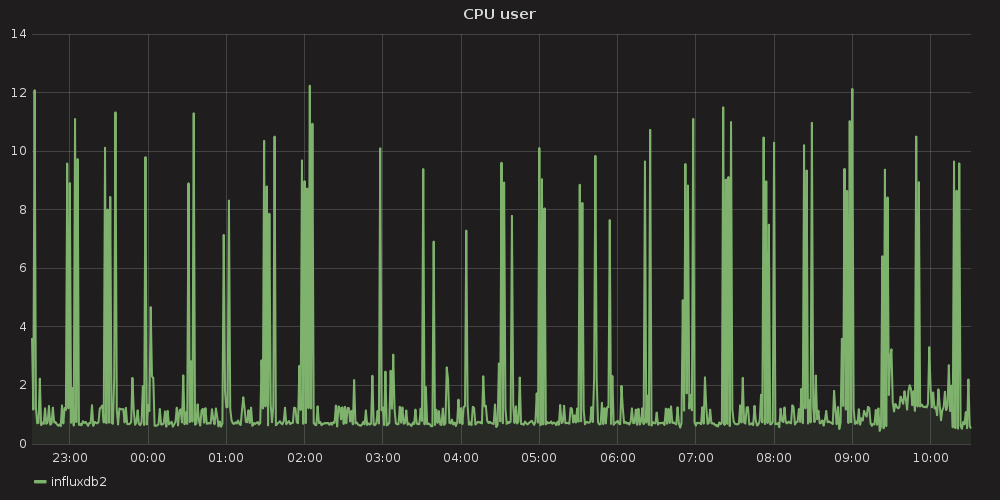

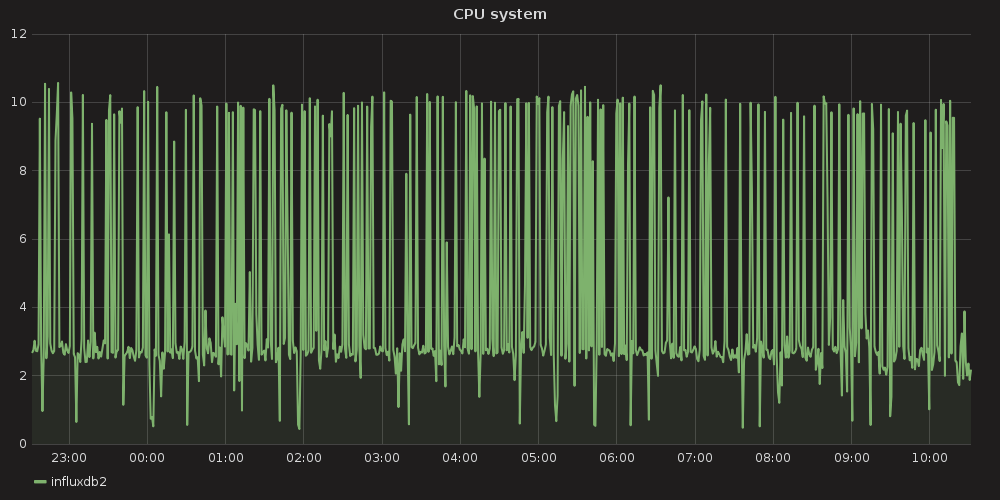

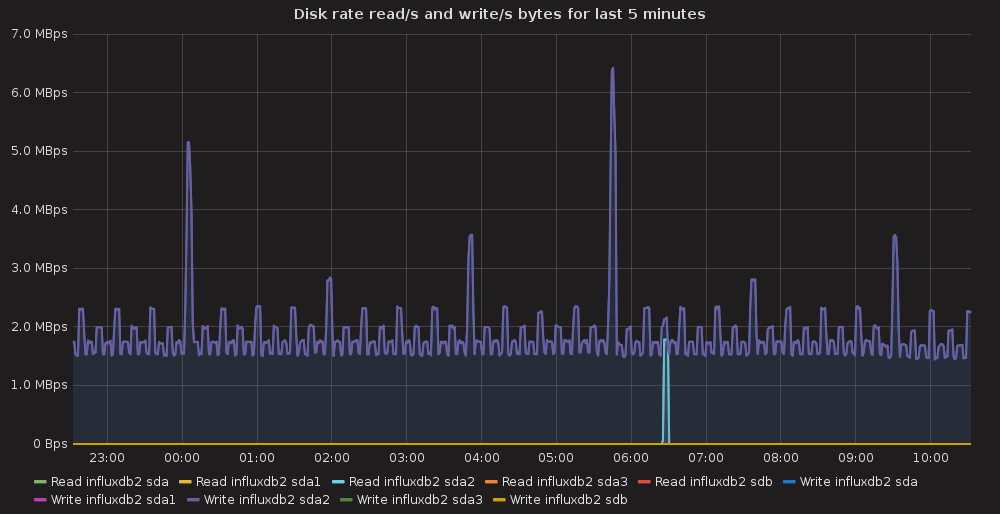

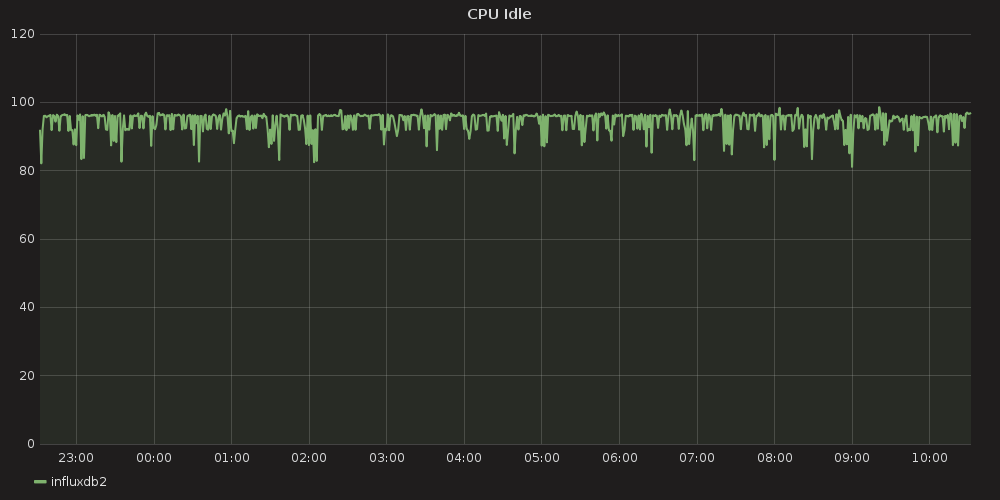

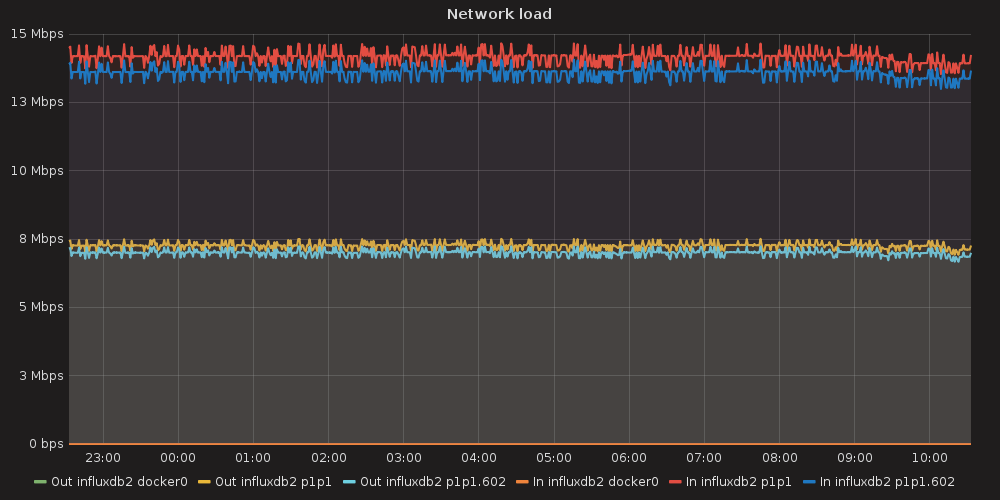

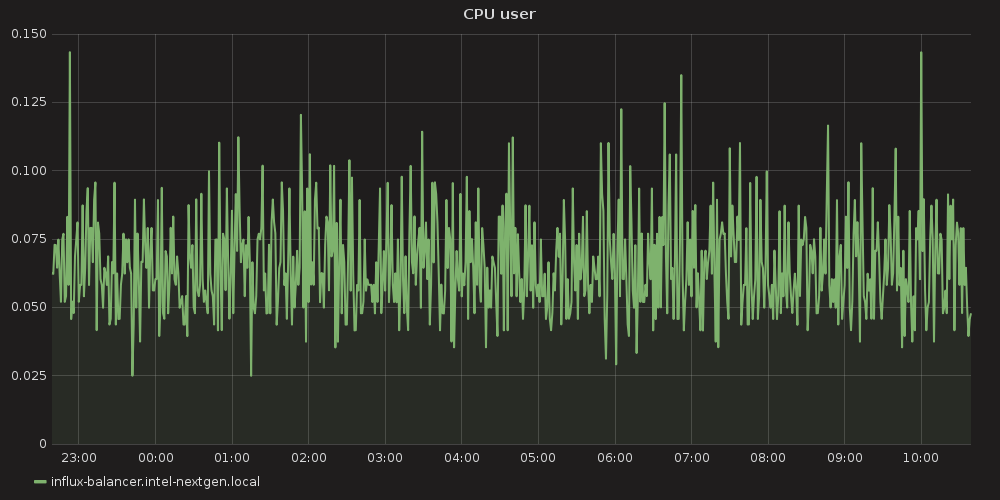

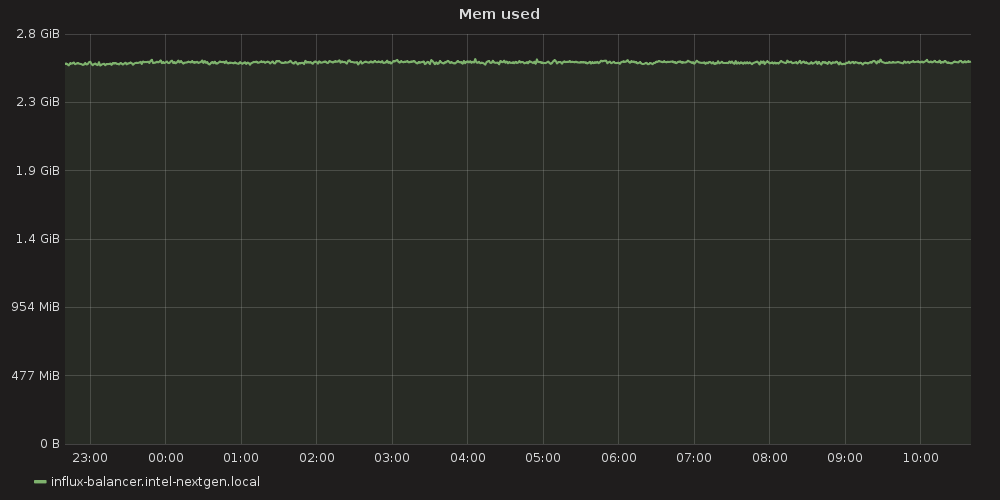

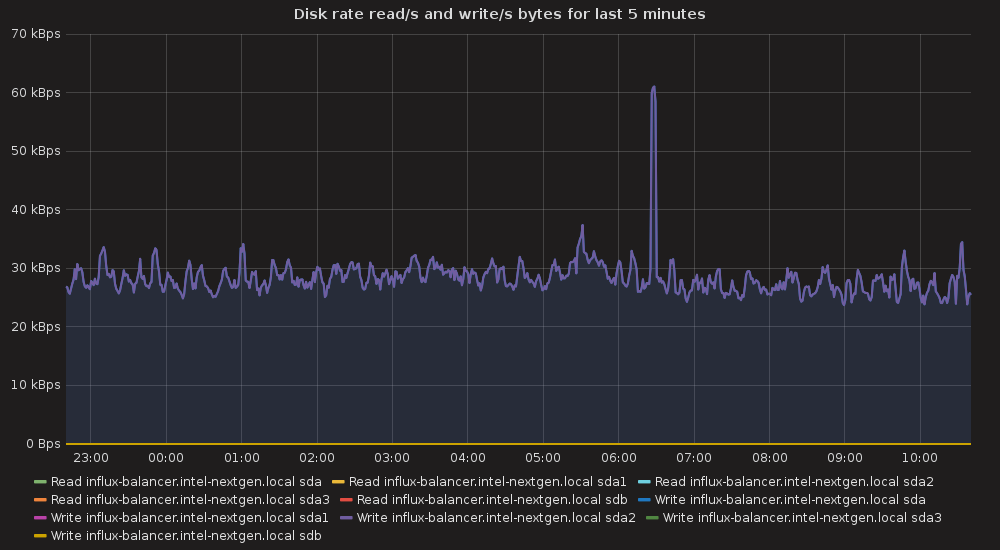

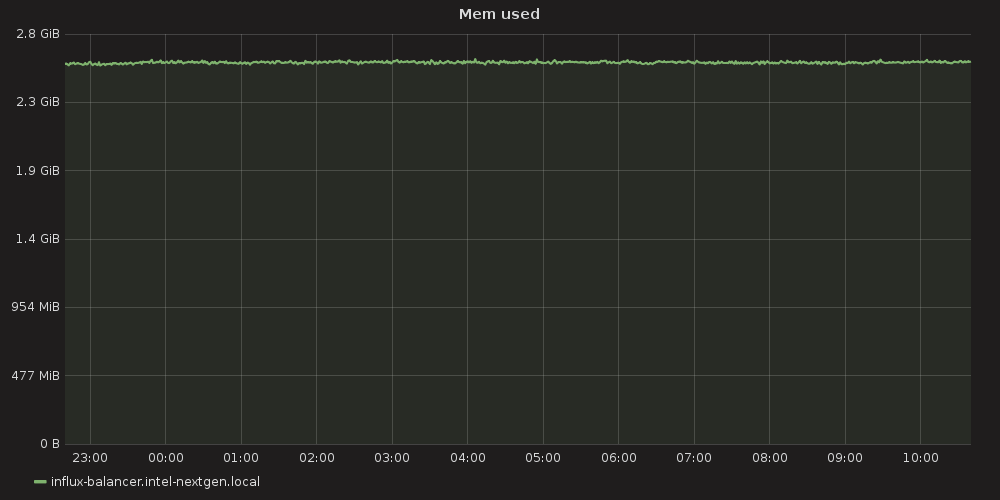

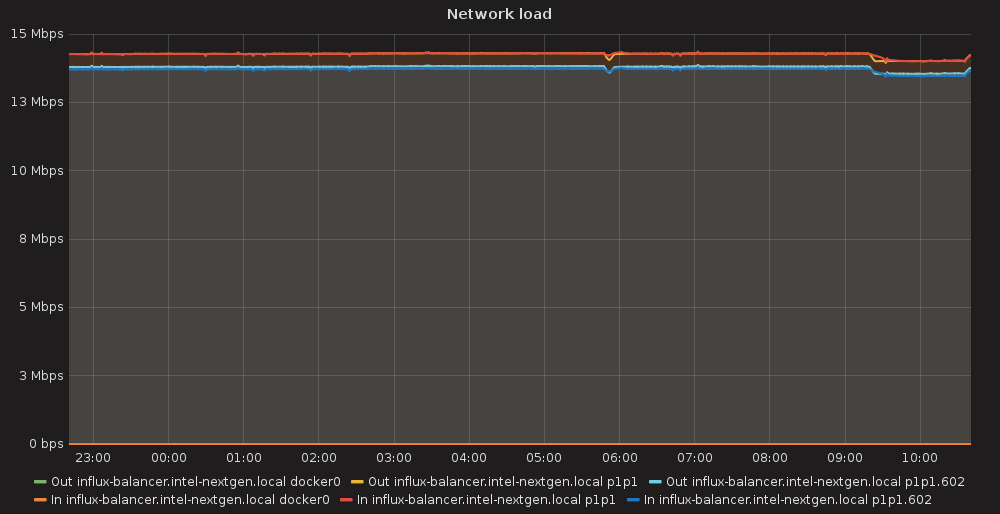

2)操作系統性能數據

操作系統性能指標是使用 Telegraf 代理收集的,該代理安裝在每個集群節點上,并按需啟用需要的插件。請參閱Containerized Openstack Monitoring(https://docs.openstack.org/developer/performance-docs/methodologies/monitoring/index.html)文檔中的Telegraf? 系統(?https://docs.openstack.org/developer/performance-docs/methodologies/monitoring/index.html#telegraf-sys-conf?) 配置文件。

InfluxDB node1 操作系統性能

InfluxDB node2 操作系統性能

負載均衡節點操作系統性能

6.如何部署

- 準備三個有工作網絡和 Internet 訪問權限的 Ubuntu Xenial 節點

- 暫時允許 root 用戶 ssh 訪問

- 解壓 influx_ha_deployment.tar

- 在 influx_ha/deploy_influx_ha.sh 中設置對應的 SSH_PASSWORD 變量

- 配置節點 ip 變量,啟動部署腳本,例如

INFLUX1=172.20.9.29 INFLUX2=172.20.9.19 BALANCER=172.20.9.27 bash -xe influx_ha/deploy_influx_ha.sh

7.應用程序

1)InfluxdbHA 部署腳本

#!/bin/bash -xe

INFLUX1=${INFLUX1:-172.20.9.29}

INFLUX2=${INFLUX2:-172.20.9.19}

BALANCER=${BALANCER:-172.20.9.27}

SSH_PASSWORD="r00tme"

SSH_USER="root"

SSH_OPTIONS="-o StrictHostKeyChecking=no -o UserKnownHostsFile=/dev/null"

type sshpass || (echo "sshpass is not installed" && exit 1)

ssh_exec() {

node=$1

shift

sshpass -p ${SSH_PASSWORD} ssh ${SSH_OPTIONS} ${SSH_USER}@${node} "$@"

}

scp_exec() {

node=$1

src=$2

dst=$3

sshpass -p ${SSH_PASSWORD} scp ${SSH_OPTIONS} ${2} ${SSH_USER}@${node}:${3}

}

# prepare influx1:

ssh_exec $INFLUX1 "echo 'deb https://repos.influxdata.com/ubuntu xenial stable' > /etc/apt/sources.list.d/influxdb.list"

ssh_exec $INFLUX1 "apt-get update && apt-get install -y influxdb"

scp_exec $INFLUX1 conf/influxdb.conf /etc/influxdb/influxdb.conf

ssh_exec $INFLUX1 "service influxdb restart"

ssh_exec $INFLUX1 "echo 'GOPATH=/root/gocode' >> /etc/environment"

ssh_exec $INFLUX1 "apt-get install -y golang-go && mkdir /root/gocode"

ssh_exec $INFLUX1 "source /etc/environment && go get -u github.com/influxdata/influxdb-relay"

scp_exec $INFLUX1 conf/relay_1.toml /root/relay.toml

ssh_exec $INFLUX1 "sed -i -e 's/influx1_ip/${INFLUX1}/g' -e 's/influx2_ip/${INFLUX2}/g' /root/relay.toml"

ssh_exec $INFLUX1 "influxdb-relay -config relay.toml &"

# prepare influx2:

ssh_exec $INFLUX2 "echo 'deb https://repos.influxdata.com/ubuntu xenial stable' > /etc/apt/sources.list.d/influxdb.list"

ssh_exec $INFLUX2 "apt-get update && apt-get install -y influxdb"

scp_exec $INFLUX2 conf/influxdb.conf /etc/influxdb/influxdb.conf

ssh_exec $INFLUX2 "service influxdb restart"

ssh_exec $INFLUX2 "echo 'GOPATH=/root/gocode' >> /etc/environment"

ssh_exec $INFLUX2 "apt-get install -y golang-go && mkdir /root/gocode"

ssh_exec $INFLUX2 "source /etc/environment && go get -u github.com/influxdata/influxdb-relay"

scp_exec $INFLUX2 conf/relay_2.toml /root/relay.toml

ssh_exec $INFLUX2 "sed -i -e 's/influx1_ip/${INFLUX1}/g' -e 's/influx2_ip/${INFLUX2}/g' /root/relay.toml"

ssh_exec $INFLUX2 "influxdb-relay -config relay.toml &"

# prepare balancer:

ssh_exec $BALANCER "apt-get install -y nginx"

scp_exec $BALANCER conf/influx-loadbalancer.conf /etc/nginx/sites-enabled/influx-loadbalancer.conf

ssh_exec $BALANCER "sed -i -e 's/influx1_ip/${INFLUX1}/g' -e 's/influx2_ip/${INFLUX2}/g' /etc/nginx/sites-enabled/influx-loadbalancer.conf"

ssh_exec $BALANCER "service nginx reload"

echo "INFLUX HA SERVICE IS AVAILABLE AT http://${BALANCER}:7076"

配置壓縮包(用于部署腳本)

influx_ha_deployment.tar`(https://docs.openstack.org/developer/performance-docs/_downloads/influx_ha_deployment.tar)

InfluxDB 配置

reporting-disabled = false

bind-address = ":8088"

[meta]

dir = "/var/lib/influxdb/meta"

retention-autocreate = true

logging-enabled = true

[data]

dir = "/var/lib/influxdb/data"

wal-dir = "/var/lib/influxdb/wal"

query-log-enabled = true

cache-max-memory-size = 1073741824

cache-snapshot-memory-size = 26214400

cache-snapshot-write-cold-duration = "10m0s"

compact-full-write-cold-duration = "4h0m0s"

max-series-per-database = 0

max-values-per-tag = 100000

trace-logging-enabled = false

[coordinator]

write-timeout = "10s"

max-concurrent-queries = 0

query-timeout = "0s"

log-queries-after = "0s"

max-select-point = 0

max-select-series = 0

max-select-buckets = 0

[retention]

enabled = true

check-interval = "30m0s"

[shard-precreation]

enabled = true

check-interval = "10m0s"

advance-period = "30m0s"

[admin]

enabled = false

bind-address = ":8083"

https-enabled = false

https-certificate = "/etc/ssl/influxdb.pem"

[monitor]

store-enabled = true

store-database = "_internal"

store-interval = "10s"

[subscriber]

enabled = true

http-timeout = "30s"

insecure-skip-verify = false

ca-certs = ""

write-concurrency = 40

write-buffer-size = 1000

[http]

enabled = true

bind-address = ":8086"

auth-enabled = false

log-enabled = true

write-tracing = false

pprof-enabled = true

https-enabled = false

https-certificate = "/etc/ssl/influxdb.pem"

https-private-key = ""

max-row-limit = 10000

max-connection-limit = 0

shared-secret = ""

realm = "InfluxDB"

unix-socket-enabled = false

bind-socket = "/var/run/influxdb.sock"

[[graphite]]

enabled = false

bind-address = ":2003"

database = "graphite"

retention-policy = ""

protocol = "tcp"

batch-size = 5000

batch-pending = 10

batch-timeout = "1s"

consistency-level = "one"

separator = "."

udp-read-buffer = 0

[[collectd]]

enabled = false

bind-address = ":25826"

database = "collectd"

retention-policy = ""

batch-size = 5000

batch-pending = 10

batch-timeout = "10s"

read-buffer = 0

typesdb = "/usr/share/collectd/types.db"

security-level = "none"

auth-file = "/etc/collectd/auth_file"

[[opentsdb]]

enabled = false

bind-address = ":4242"

database = "opentsdb"

retention-policy = ""

consistency-level = "one"

tls-enabled = false

certificate = "/etc/ssl/influxdb.pem"

batch-size = 1000

batch-pending = 5

batch-timeout = "1s"

log-point-errors = true

[[udp]]

enabled = false

bind-address = ":8089"

database = "udp"

retention-policy = ""

batch-size = 5000

batch-pending = 10

read-buffer = 0

batch-timeout = "1s"

precision = ""

[continuous_queries]

log-enabled = true

enabled = true

run-interval = "1s"

3)Influx-Relay配置

第一個實例

# Name of the HTTP server, used for display purposes only

[[http]]

name = "influx-http"

# TCP address to bind to, for HTTP server

bind-addr = "influx1_ip:9096"

# Array of InfluxDB instances to use as backends for Relay

# name: name of the backend, used for display purposes only.

# location: full URL of the /write endpoint of the backend

# timeout: Go-parseable time duration. Fail writes if incomplete in this time.

# skip-tls-verification: skip verification for HTTPS location. WARNING: it's insecure. Don't use in production.

output = [

{ name="local-influx1", location = "http://127.0.0.1:8086/write", timeout="10s" },

{ name="remote-influx2", location = "http://influx2_ip:8086/write", timeout="10s" },

]

[[udp]]

# Name of the UDP server, used for display purposes only

name = "influx-udp"

# UDP address to bind to

bind-addr = "127.0.0.1:9096"

# Socket buffer size for incoming connections

read-buffer = 0 # default

# Precision to use for timestamps

precision = "n" # Can be n, u, ms, s, m, h

# Array of InfluxDB UDP instances to use as backends for Relay

# name: name of the backend, used for display purposes only.

# location: host and port of backend.

# mtu: maximum output payload size

output = [

{ name="local-influx1-udp", locatinotallow="127.0.0.1:8089", mtu=512 },

{ name="remote-influx2-udp", locatinotallow="influx2_ip:8089", mtu=512 },

]

第二個實例

# Name of the HTTP server, used for display purposes only

[[http]]

name = "influx-http"

# TCP address to bind to, for HTTP server

bind-addr = "influx2_ip:9096"

# Array of InfluxDB instances to use as backends for Relay

# name: name of the backend, used for display purposes only.

# location: full URL of the /write endpoint of the backend

# timeout: Go-parseable time duration. Fail writes if incomplete in this time.

# skip-tls-verification: skip verification for HTTPS location. WARNING: it's insecure. Don't use in production.

output = [

{ name="local-influx2", location = "http://127.0.0.1:8086/write", timeout="10s" },

{ name="remote-influx1", location = "http://influx1_ip:8086/write", timeout="10s" },

]

[[udp]]

# Name of the UDP server, used for display purposes only

name = "influx-udp"

# UDP address to bind to

bind-addr = "127.0.0.1:9096"

# Socket buffer size for incoming connections

read-buffer = 0 # default

# Precision to use for timestamps

precision = "n" # Can be n, u, ms, s, m, h

# Array of InfluxDB UDP instances to use as backends for Relay

# name: name of the backend, used for display purposes only.

# location: host and port of backend.

# mtu: maximum output payload size

output = [

{ name="local-influx2-udp", locatinotallow="127.0.0.1:8089", mtu=512 },

{ name="remote-influx1-udp", locatinotallow="influx1_ip:8089", mtu=512 },

]

Nginx 配置

04

client_max_body_size 20M;

upstream influxdb {

server influx1_ip:8086;

server influx2_ip:8086;

}

upstream relay {

server influx1_ip:9096;

server influx2_ip:9096;

}

server {

listen 7076;

location /query {

limit_except GET {

deny all;

}

proxy_pass http://influxdb;

}

location /write {

limit_except POST {

deny all;

}

proxy_pass http://relay;

}

}

# stream {

# upstream test {

# server server1:8003;

# server server2:8003;

# }

#

# server {

# listen 7003 udp;

# proxy_pass test;

# proxy_timeout 1s;

# proxy_responses 1;

# }

# }

5)Grafana InfluxDB Dashboard

Influxdb對接Grafana所使用的Dashboard圖形可以參考InfluxDB_Dashboard.json(https://docs.openstack.org/developer/performance-docs/_downloads/InfluxDB_Dashboard.json)

8.最后

目前influxdb本身的集群方案屬于閉源狀態,而本身的開源的influxdb并不支持高可用集群。Prometheus本身不推薦作為數據存儲的工具,因此,通過influxdb-relay可以實現相對完善,可靠的監控高可用方案。

參考:

- https://docs.openstack.org/developer/performance-docs/methodologies/monitoring/influxha.html#influxdbha-deployment-script

- https://yeya24.github.io/post/influxdb_ha/

- https://github.com/influxdata/influxdb-relay

- https://github.com/vente-privee/influxdb-relay